Having your temperature taken has become a regular feature of day-to-day life during the COVID-19 pandemic. Everywhere from doctor’s offices to bars, testing for high body temperatures is a precaution many public establishments now take when people enter the premises. Here’s the origin story of the tool that’s had us on edge since the crisis began.

In the early 17th century, during the the Scientific Revolution, when the frontiers of discovery were marked by new ways to quantify natural phenomena, Galileo Galilei was forging new, innovative and empirically based methods in astronomy, physics and engineering. He also got humanity started toward a lesser known but crucial advance: the ability to measure heat.

During this era, a flurry of measuring devices and units of measurement were invented, eventually forging the standard units we have in place today. Galileo is credited with the invention of the thermoscope, a device for gauging heat. But it’s not the same as a thermometer. It couldn’t measure—meter—temperature because it had no scale.

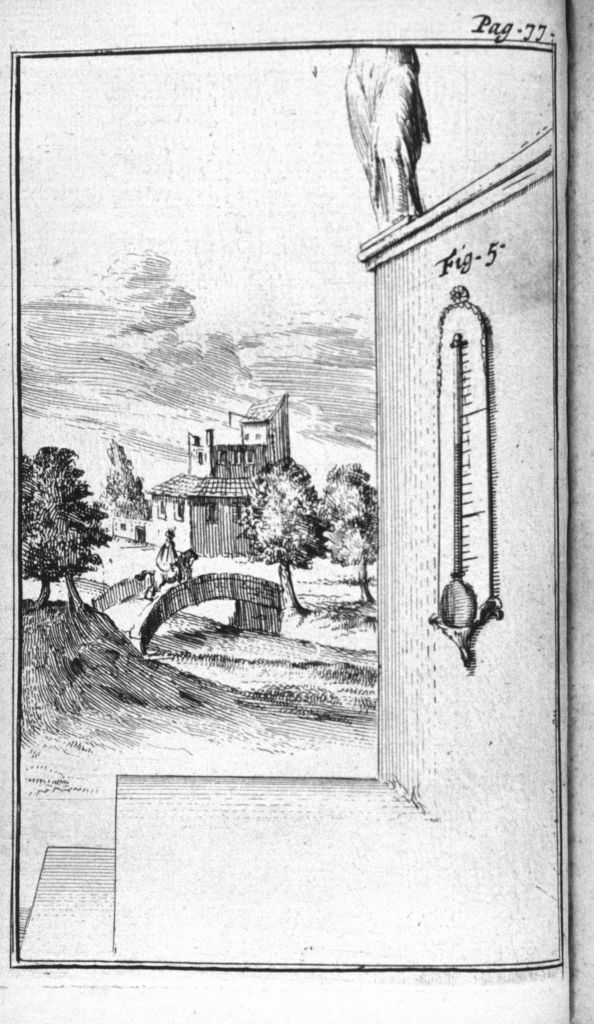

Around 1612, with a name so nice he used it twice, Venetian scholar Santorio Santorio made crucial conceptual advances to the thermoscope. He’s been credited with adding a scale—an advancement about as fundamental as the invention of the device itself. The early thermoscopes basically consisted of a vertically oriented glass tube with a bulb at the top and a base suspended in a pool of liquid such as water, which ran up a length of the column. As the temperature of the air in the bulb increased, its expansion changed the height of the liquid in the column. Santorio’s writings indicate that he set the maximum by heating the thermoscope’s bulb with a candle flame, and he set the minimum by contacting it with melting snow.

He may even have been the first to apply the thermometer to the field of medicine, as a device for objectively comparing body temperatures. To take a measurement, the patient would either hold the bulb with their hand or breathe on it.

In the 1650s, another breakthrough occurred when Ferdinando II de’ Medici, Grand Duke of Tuscany, made key design changes to the old thermoscope. De’Medici is cited as the first to create a sealed design, unaffected by air pressure. His thermoscope consisted of a vertical glass tube filled with “spirit of wine”—distilled wine—in which glass bubbles of varying levels of air pressure rose and fell with changes in temperature. He was so into measuring heat that in 1657 he started a private academy, the Accademia del Cimento, where investigators explored various forms and shapes for their thermoscopes, including ornate-looking designs with spiraling cylindrical columns. Owing to the improvements in both the form and function of the instruments, their demand grew steadily over the final 50 years of the 17th century, when they became known as “Florentine thermoscopes.”

Yet even with this improved functionality, accurate temperature measurement had quite a ways to go. There was still no accepted standard for calibration. The ways in which people tried to find a reference point were ridiculously arbitrary; they used standards as wide-ranging as the melting point of butter, the internal temperature of animals, the cellar temperature of the Paris observatory, the warmest or coldest day of the year in various cities, and “glowing coals in the kitchen fire.” No two thermometers registered the same temperature. It was a mess.

Enter Danish astronomer Olaus Rømer, who heralded an innovation that would change thermometry forever. In 1701 he had the idea to calibrate a scale relative to something much more accessible: the freezing and boiling points of water. Similar to the way we measure minutes within an hour, the range could be divided between these points into 60 degrees. Although this is what he could have done, and it would have been great, he didn’t quite get there. Awkwardly, since he had originally used frozen brine as the lower-end calibration point, his measurement of the freezing point of water occurred at 7.5 degrees, not zero. Known today as the Rømer scale, it bears historical importance, but is not formally in use.

As interest in thermoscopes continued to grow throughout Europe, a young merchant discovered that the instruments were becoming an increasingly popular trading commodity. He also found them to be utterly fascinating. His name was Daniel Gabriel Fahrenheit. Although you’re probably not surprised to hear his name come up here, his story is a rather remarkable one.

Get your history fix in one place: sign up for the weekly TIME History newsletter

Fahrenheit was born in Danzig, Poland (now Gdańsk) into a successful mercantile family. In 1701, when Fahrenheit was only 12, both his father and mother met an obscure fate: they died from eating poisonous mushrooms. Along with his siblings, he was taken in by new guardians and appointed to a merchant’s apprenticeship. Young Daniel, however, didn’t care much for the profession. He was more interested in science and glassblowing (you can see where this is going). Studying, creating and designing thermometers and barometers became his calling. But in his relentless pursuit of these activities, he accrued debt that he was unable to cover.

Although Fahrenheit was entitled to an inheritance from his parents, he could not yet use it to pay the debt. Instead, his new guardians were held responsible for it. Their solution: appoint him as a seafaring laborer for the Dutch East Indies Company so that he could earn the money to repay them. Fahrenheit escaped his fate by fleeing the country. He needed to wait out the years until he was 24, when he would be entitled to his inheritance and able to make good on his financial obligations. So he wandered through Germany, Denmark and Sweden for 12 years while continuing to pursue his love of science.

Eventually, his path crossed with Rømer’s in Amsterdam. Their collaboration spawned the first quicksilver (mercury) thermometer, which afforded greater accuracy and precision than its predecessors. And finally, he was able to make multiple thermometers that gave consistent readings because of the improved design involving mercury.

With the rising demand for thermometers, Fahrenheit was in the perfect position to develop his eponymous scale. He based it on Rømer’s but calibrated the zero point to the freezing temperature of a brine solution made of an equal mixture of water, salt and ice—substances accessible to all. He found that the surface of a solution of equal parts water and ice froze at 32 degrees, which is now the commonly known “freezing point” in the Fahrenheit scale. With two more increments of 32—that is, 96 degrees—the scale matched what Fahrenheit measured as the temperature of the human body, as calibrated by placement of the thermometer under his armpit. It all fit nicely together, and so the gauge caught on, eventually becoming temperature’s first standard scale.

With 32 a seemingly arbitrary number for the base of the thermometer, it might not be entirely surprising that his choice has served as fodder for conspiracy theorists. There were rumors that Fahrenheit was an active Freemason and based his scale’s starting point on the “32 degrees of enlightenment,” which accord to some rites of Freemasonry. However, no official records of his membership in the Freemasons exist.

When one considers the sensibility actually put forth by Fahrenheit to mark the scale in 32-degree increments between the freezing of the brine mixture and his (almost accurate) measurement of human body temperature, the gauge doesn’t seem so arbitrary. Its oddness was highlighted only after the world began to adopt the metric system.

The convenience of the metric scale and its application to a variety of measurements—distance, volume, mass, electricity—meant that more and more colonies adopted it as a standard for trade. Because it integrated with the universal numeric system in units of ten, commonly known as “base ten,” it was intuitive and made calculations easier. By the mid-20th century, the metric system dominated the globe.

Upon the worldwide adoption of the metric scale, the Fahrenheit system was succeeded by the scale invented by Swedish astronomer Anders Celsius in 1742. He made the calibration process more accurate by simply using the freezing and boiling points of water at sea level—no more salt mixture requiring its own measurements, à la Fahrenheit. On his original scale, however, 100 degrees was the freezing point. Rather than Celsius, it was Jean-Pierre Christin, a French physicist, mathematician, astronomer and musician, who, around the same time as Celsius’ innovation, conceived of a similar arrangement but with 100 as the boiling point—the current Celsius scale.

Adapted with permission from Out Cold: A Chilling Descent Into the Macabre, Controversial, Lifesaving History of Hypothermia by Phil Jaekl, available now from PublicAffairs.

More Must-Reads from TIME

- Cybersecurity Experts Are Sounding the Alarm on DOGE

- Meet the 2025 Women of the Year

- The Harsh Truth About Disability Inclusion

- Why Do More Young Adults Have Cancer?

- Colman Domingo Leads With Radical Love

- How to Get Better at Doing Things Alone

- Michelle Zauner Stares Down the Darkness

Contact us at letters@time.com