Frances Haugen is in the back of a Paris taxi, waving a piece of sushi in the air.

The cab is on the way to a Hilton hotel, where this November afternoon she is due to meet with the French digital economy minister. The Eiffel Tower appears briefly through the window, piercing a late-fall haze. Haugen is wolfing down lunch on the go, while recalling an episode from her childhood. The teacher of her gifted and talented class used to play a game where she would read to the other children the first letter of a word from the dictionary and its definition. Haugen and her classmates would compete, in teams, to guess the word. “At some point, my classmates convinced the teacher that it was unfair to put me on either team, because whichever team had me was going to win and so I should have to compete against the whole class,” she says.

Did she win? “I did win,” she says with a level of satisfaction that quickly fades to indignation. “And so imagine! That makes kids hate you!” She pops an edamame into her mouth with a flourish. “I look back and I’m like, That was a bad idea.”

She tells the story not to draw attention to her precociousness—although it does do that—but to share the lesson it taught her. “This shows you how badly some educators understand psychology,” she says. While some have described the Facebook whistle-blower as an activist, Haugen says she sees herself as an educator. To her mind, an important part of her mission is driving home a message in a way that resonates with people, a skill she has spent years honing.

It is the penultimate day of a grueling three-week tour of Europe, during which Haugen has cast herself in the role of educator in front of the U.K. and E.U. Parliaments, regulators and one tech conference crowd. Haugen says she wanted to cross the Atlantic to offer her advice to lawmakers putting the final touches on new regulations that take aim at the outsize influence of large social media companies.

The new U.K. and E.U. laws have the potential to force Facebook and its competitors to open up their algorithms to public scrutiny, and face large fines if they fail to address problematic impacts of their platforms. European lawmakers and regulators “have been on this journey a little longer” than their U.S. counterparts, Haugen says diplomatically. “My goal was to support lawmakers as they think through these issues.”

Beginning in late summer, Haugen, 37, disclosed tens of thousands of pages of internal Facebook documents to Congress and the Securities and Exchange Commission (SEC). The documents were the basis of a series of articles in the Wall Street Journal that sparked a reckoning in September over what the company knew about how it contributed to harms ranging from its impact on teens’ mental health and the extent of misinformation on its platforms, to human traffickers’ open use of its services. The documents paint a picture of a company that is often aware of the harms to which it contributes—but is either unwilling or unable to act against them. Haugen’s disclosures set Facebook stock on a downward trajectory, formed the basis for eight new whistle-blower complaints to the SEC and have prompted lawmakers around the world to intensify their calls for regulation of the company.

Facebook has rejected Haugen’s claims that it puts profits before safety, and says it spends $5 billion per year on keeping its platforms safe. “As a company, we have every commercial and moral incentive to give the maximum number of people as much of a positive experience as possible on our apps,” a spokesperson said in a statement.

Although many insiders have blown the whistle on Facebook before, nobody has left the company with the breadth of material that Haugen shared. And among legions of critics in politics, academia and media, no single person has been as effective as Haugen in bringing public attention to Facebook’s negative impacts. When Haugen decided to blow the whistle against Facebook late last year, the company employed more than 58,000 people. Many had access to the documents that she would eventually pass to authorities. Why did it take so long for somebody to do what she did?

Read More: How Facebook Forced a Reckoning by Shutting Down the Team That Put People Ahead of Profits

One answer is that blowing the whistle against a multibillion-dollar tech company requires a particular combination of skills, personality traits and circumstances. In Haugen’s case, it took one near-death experience, a lost friend, several crushed hopes, a cryptocurrency bet that came good and months in counsel with a priest who also happens to be her mother. Haugen’s atypical personality, glittering academic background, strong moral convictions, robust support networks and self-confidence also helped. Hers is the story of how all these factors came together—some by chance, some by design—to create a watershed moment in corporate responsibility, human communication and democracy.

When debate coach Scott Wunn first met a 16-year-old Haugen at Iowa City West High School, she had already been on the team for two years, after finishing junior high a year early. He was an English teacher who had been headhunted to be the debate team’s new coach. The school took this kind of extracurricular activity seriously, and so did the young girl with the blond hair. In their first exchange, Wunn remembers Haugen grilling him about whether he would take coaching as seriously as his other duties.

“I could tell from that moment she was very serious about debate,” says Wunn, who is now the executive director of the National Speech and Debate Association. “When we ran tournaments, she was the student who stayed the latest, who made sure that all of the students on the team were organized. Everything that you can imagine, Frances would do.”

Haugen specialized in a form of debate that specifically asked students to weigh the morality of every issue, and by her senior year, she had become one of the top 25 debaters in the country in her field. “Frances was a math whiz, and she loved political science,” Wunn says. In competitive debate, you don’t get to decide which side of the issue you argue for. But Haugen had a strong moral compass, and when she was put in a position where she had to argue for something she disagreed with, she didn’t lean back on “flash in the pan” theatrics, her former coach remembers. Instead, she would dig deeper to find evidence for an argument she could make that wouldn’t compromise her values. “Her moral convictions were strong enough, even at that age, that she wouldn’t try to manipulate the evidence such that it would go against her morality,” Wunn says.

When Haugen got to college, she realized she needed to master another form of communication. “Because my parents were both professors, I was used to having dinner-table conversations where, like, someone would have read an interesting article that day, and would basically do a five-minute presentation,” she says. “And so I got to college, and I had no idea how to make small talk.”

Today, Haugen is talkative and relaxed. She’s in a good mood because she got to “sleep in” until 8:30 a.m.—later than most other days on her European tour, she says. At one point, she asks if I’ve seen the TV series Archer and momentarily breaks into a song from the animated sitcom.

After graduating from Olin College of Engineering—where, beyond the art of conversation, she studied the science of computer engineering—Haugen moved to Silicon Valley. During a stint at Google, she helped write the code for Secret Agent Cupid, the precursor to popular dating app Hinge. She took time off to undertake an M.B.A. at Harvard, a rarity for software engineers in Silicon Valley and something she would later credit with helping her diagnose some of the organizational flaws within Facebook. But in 2014, while back at Google, Haugen’s trajectory was knocked off course.

Haugen has celiac disease, a condition that means her immune system attacks her own tissues if she eats gluten. (Hence the sushi.) She “did not take it seriously enough” in her 20s, she says. After repeated trips to the hospital, doctors eventually realized she had a blood clot in her leg that had been there for anywhere between 18 months and two years. Her leg turned purple, and she ended up in the hospital for over a month. There she had an allergic reaction to a drug and nearly bled to death. She suffered nerve damage in her hands and feet, a condition known as neuropathy, from which she still suffers today.

“I think it really changes your priorities when you’ve almost died,” Haugen says. “Everything that I had defined myself [by] before, I basically lost.” She was used to being the wunderkind who could achieve anything. Now, she needed help cooking her meals. “My recovery made me feel much more powerful, because I rebuilt my body,” she says. “I think the part that informed my journey was: You have to accept when you whistle-blow like this that you could lose everything. You could lose your money, you could lose your freedom, you could alienate everyone who cares about you. There’s all these things that could happen to you. Once you overcome your fear of death, anything is possible. I think it gave me the freedom to say: Do I want to follow my conscience?”

Once Haugen was out of the hospital, she moved back into her apartment but struggled with daily tasks. She hired a friend to assist her part time. “I became really close friends with him because he was so committed to my getting better,” she says. But over the course of six months, in the run-up to the 2016 U.S. presidential election, she says, “I just lost him” to online misinformation. He seemed to believe conspiracy theories, like the idea that George Soros runs the world economy. “At some point, I realized I couldn’t reach him,” she says.

Soon Haugen was physically recovering, and she began to consider re-entering the workforce. She spent stints at Yelp and Pinterest as a successful product manager working on algorithms. Then, in 2018, a Facebook recruiter contacted her. She told him that she would take the job only if she could work on tackling misinformation in Facebook’s “integrity” operation, the arm of the company focused on keeping the platform and its users safe. “I took that job because losing my friend was just incredibly painful, and I didn’t want anyone else to feel that pain,” she says.

Her optimism that she could make a change from inside lasted about two months. Haugen’s first assignment involved helping manage a project to tackle misinformation in places where the company didn’t have any third-party fact-checkers. Everybody on her team was a new hire, and she didn’t have the data scientists she needed. “I went to the engineering manager, and I said, ‘This is the inappropriate team to work on this,’” she recalls. “He said, ‘You shouldn’t be so negative.’” The pattern repeated itself, she says. “I raised a lot of concerns in the first three months, and my concerns were always discounted by my manager and other people who had been at the company for longer.”

Before long, her entire team was shifted away from working on international misinformation in some of Facebook’s most vulnerable markets to working on the 2020 U.S. election, she says. The documents Haugen would later disclose to authorities showed that in 2020, Facebook spent 3.2 million hours tackling misinformation, although just 13% of that time was spent on content from outside the U.S., the Journal reported. Facebook’s spokesperson said in a statement that the company has “dedicated teams with expertise in human rights, hate speech and misinformation” working in at-risk countries. “We dedicate resources to these countries, including those without fact-checking programs, and have been since before, during and after the 2020 U.S. elections, and this work continues today.”

Read More: Why Some People See More Disturbing Content on Facebook Than Others, According to Leaked Documents

Haugen said that her time working on misinformation in foreign countries made her deeply concerned about the impact of Facebook abroad. “I became concerned with India even in the first two weeks I was in the company,” she says. Many people who were accessing the Internet for the first time in places like India, Haugen realized after reading research on the topic, did not even consider the possibility that something they had read online might be false or misleading. “From that moment on, I was like, Oh, there is a huge sleeping dragon at Facebook,” she says. “We are advancing the Internet to other countries far faster than it happened in, say, the U.S.,” she says, noting that people in the U.S. have had time to build up a “cultural muscle” of skepticism toward online content. “And I worry about the gap [until] that information immune system forms.”

In February 2020, Haugen sent a text message to her parents asking if she could come and live with them in Iowa when the pandemic hit. Her mother Alice Haugen recalls wondering what pandemic she was talking about, but agreed. “She had made a spreadsheet with a simple exponential growth model that tried to guess when San Francisco would be shut down,” Alice says. A little later, Frances asked if she could send some food ahead of her. Soon, large Costco boxes started arriving at the house. “She was trying to bring in six months of food for five people, because she was afraid that the supply lines might break down,” Alice says. “Our living room became a small grocery store.”

After quarantining for 10 days upon arrival, the younger Haugen settled into lockdown life with her parents, continuing her work for Facebook remotely. “We shared meals, and every day we would have conversations,” Alice says. She recalled her daughter voicing specific concerns about Facebook’s impact in Ethiopia, where ethnic violence was playing out on—and in some cases being amplified by—Facebook’s platforms. On Nov. 9, Facebook said it had been investing in safety measures in Ethiopia for more than two years, including activating algorithms to down-rank potentially inflammatory content in several languages in response to escalating violence there. Haugen acknowledges the work, saying she wants to give “credit where credit is due,” but claims the social network was too late to intervene with safety measures in Ethiopia and other parts of the world. “The idea that they don’t even turn those knobs on until people are getting shot is completely unacceptable,” she says. “The reality right now is that Facebook is not willing to invest the level of resources that would allow it to intervene sooner.”

A Facebook spokesperson defended the prioritization system in its statement, saying that the company has long-term strategies to “mitigate the impacts of harmful offline events in the countries we deem most at risk … while still protecting freedom of expression and other human rights principles.”

What Haugen saw was happening in nations like Ethiopia and India would clarify her opinions about “engagement-based ranking”—the system within Facebook more commonly known as “the algorithm”—that chooses which posts, out of thousands of options, to rank at the top of users’ feeds. Haugen’s central argument is that human nature means this system is doomed to amplify the worst in us. “One of the things that has been well documented in psychology research is that the more times a human is exposed to something, the more they like it, and the more they believe it’s true,” she says. “One of the most dangerous things about engagement-based ranking is that it is much easier to inspire someone to hate than it is to compassion or empathy. Given that you have a system that hyperamplifies the most extreme content, you’re going to see people who get exposed over and over again to the idea that [for example] it’s O.K. to be violent to Muslims. And that destabilizes societies.”

In the run-up to the 2020 U.S. election, according to media reports, some initiatives proposed by Facebook’s integrity teams to tackle misinformation and other problems were killed or watered down by executives on the policy side of the company, who are responsible both for setting the platform’s rules and lobbying governments on Facebook’s behalf. Facebook spokespeople have said in response that the interventions were part of the company’s commitment to nuanced policymaking that balanced freedom of speech with safety. Haugen’s time at business school taught her to view the problem differently: Facebook was a company that prioritized growth over the safety of its users.

“Organizational structure is a wonky topic, but it matters,” Haugen says. Inside the company, she says, she observed the effect of these repeated interventions on the integrity team. “People make decisions on what projects to work on, or advance, or give more resources to, based on what they believe is the chance for success,” she says. “I think there were many projects that could be content-neutral—that didn’t involve us choosing what are good or bad ideas, but instead are about making the platform safe—that never got greenlit, because if you’ve seen other things like that fail, you don’t even try them.”

Being with her parents, particularly her mother, who left a career as a professor to become an Episcopal priest, helped Haugen become comfortable with the idea she might one day have to go public. “I was learning all these horrific things about Facebook, and it was really tearing me up inside,” she says. “The thing that really hurts most whistle-blowers is: whistle-blowers live with secrets that impact the lives of other people. And they feel like they have no way of resolving them. And so instead of being destroyed by learning these things, I got to talk to my mother … If you’re having a crisis of conscience, where you’re trying to figure out a path that you can live with, having someone you can agonize to, over and over again, is the ultimate amenity.”

Haugen didn’t decide to blow the whistle until December 2020, by which point she was back in San Francisco. The final straw came when Facebook dissolved Haugen’s former team, civic integrity, whose leader had asked employees to take an oath to put the public good before Facebook’s private interest. (Facebook denies that it dissolved the team, saying instead that members were spread out across the company to amplify its influence.) Haugen and many of her former colleagues felt betrayed. But her mother’s counsel had mentally prepared her. “It meant that when that moment happened, I was actually in a pretty good place,” Haugen says. “I wasn’t in a place of crisis like many whistle-blowers are.”

Read More: Why Facebook Employees ‘Deprioritized’ a Misinformation Fix

In March, Haugen moved to Puerto Rico, in part for the warm weather, which she says helps with her neuropathy pain. Another factor was the island’s cryptocurrency community, which has burgeoned because of the U.S. territory’s lack of capital gains taxes. In October, she told the New York Times that she had bought into crypto “at the right time,” implying that she had a financial buffer that allowed her to whistle-blow comfortably.

Haugen’s detractors have pointed to the irony of her calling for tech companies to do their social duty, while living in a U.S. territory with a high rate of poverty that is increasingly being used as a tax haven. Some have also pointed out that Haugen is not entirely independent: she has received support from Luminate, a philanthropic organization pushing for progressive Big Tech reform in Europe and the U.S., and which is backed by the billionaire founder of eBay, Pierre Omidyar. Luminate paid Haugen’s expenses on her trip to Europe and helped organize meetings with senior officials. Omidyar’s charitable foundation also provides annual funding to Whistleblower Aid, the nonprofit legal organization that is now representing Haugen pro bono. Luminate says it entered into a relationship with Haugen only after she went public with her disclosures.

Haugen resigned from Facebook in May this year, after being told by the human-resources team that she could not work remotely from a U.S. territory. The news accelerated the secret project that she had decided to begin after seeing her old team disbanded. To collect the documents she would later disclose, Haugen trawled Facebook’s internal employee forum, Workplace. She traced the careers of integrity colleagues she admired—many of whom had left the company in frustration—gathering slide decks, research briefs and policy proposals they had worked on, as well as other documents she came across.

Read more: Facebook Will Not Fix Itself

While collecting the documents, she had flashbacks to her teenage years preparing folders of evidence for debates. “I was like, Wow, this is just like debate camp!” she recalls. “When I was 16 and doing that, I had no idea that it would be useful in this way in the future.”

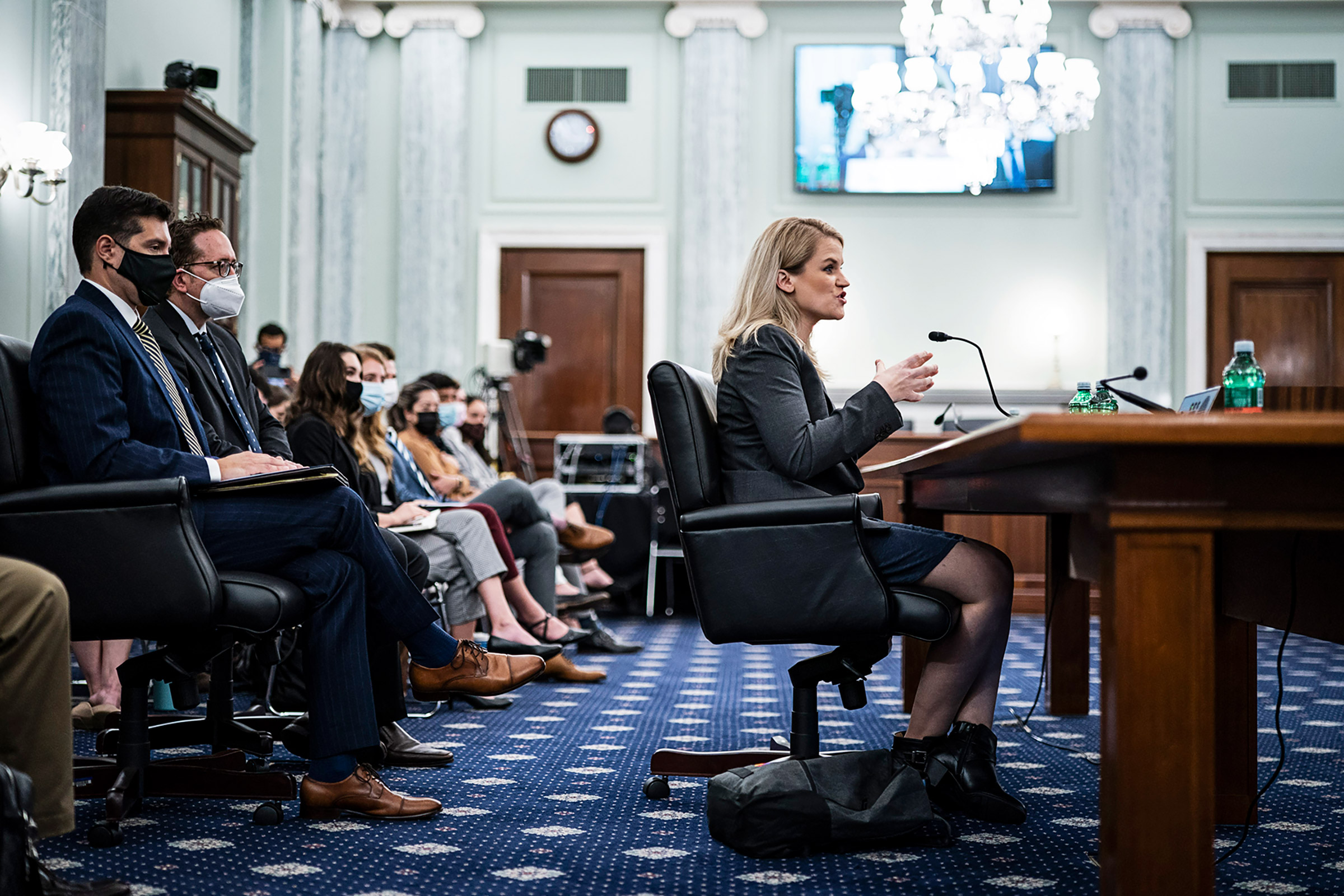

In her Senate testimony in early October, Haugen suggested a federal agency should be set up to oversee social media algorithms so that “someone like me could do a tour of duty” after working at a company like Facebook. But moving to Washington, D.C., to serve at such an agency has no appeal, she says. “I am happy to be one of the people consulted by that agency,” she says. “But I have a life I really like in Puerto Rico.”

Now that her tour of Europe is over, Haugen has had a chance to think about what comes next. Over an encrypted phone call from Puerto Rico a few days after we met in Paris, she says she would like to help build a grassroots movement to help young people push back against the harms caused by social media companies. In this new task, as seems to be the case with everything in Haugen’s life, she wants to try to leverage the power of education. “I am fully aware that a 19-year-old talking to a 16-year-old will be more effective than me talking to that 16-year-old,” she tells me. “There is a real opportunity for young people to flex their political muscles and demand accountability.”

I ask if she has a message to send to young people reading this. “Hmm,” she says, followed by a long pause. “In every era, humans invent technologies that run away from themselves,” she says. “It’s very easy to look at some of these tech platforms and feel like they are too big, too abstract and too amorphous to influence in any way. But the reality is there are lots of things we can do. And the reason they haven’t done them is because it makes the companies less profitable. Not unprofitable, just less profitable. And no company has the right to subsidize their profits with your health.”

Ironically, Haugen gives partial credit to one of her managers at Facebook for inspiring her thought process around blowing the whistle. After struggling with a problem for a week without asking for help, she missed a deadline. When she explained why, the manager told her he was disappointed that she had hidden that she was having difficulty, she says. “He said, ‘We solve problems together; we don’t solve them alone,’” she says. Never one to miss a teaching opportunity, she continues, “Part of why I came forward is I believe Facebook has been struggling alone. They’ve been hiding how much they’re struggling. And the reality is, we solve problems together, we don’t solve them alone.”

It’s a philosophy that Haugen sees as the basis for how social media platforms should deal with societal issues going forward. In late October, Facebook Inc. (which owns Facebook, Whats App and Instagram) changed its name to Meta, a nod to its ambition to build the next generation of online experiences. In a late-October speech, CEO Mark Zuckerberg said he believed the “Metaverse”—its new proposal to build a virtual universe—would fundamentally reshape how humans interact with technology. Haugen says she is concerned the Metaverse will isolate people rather than bring them together: “I believe any tech with that much influence deserves public oversight.”

But hers is also a belief system that allows for a path toward redemption. That friend she lost to misinformation? His story has a happy ending. “I learned later that he met a nice girl and he had gone back to church,” Haugen says, adding that he no longer believes in conspiracy theories. “It gives me a lot of hope that we can recover as individuals and as a society. But it involves us connecting with people.” —With reporting by Leslie Dickstein and Nik Popli

Correction, Nov. 22

The original version of this story misstated Pierre Omidyar’s relationship with Whistleblower Aid. He does not directly donate to Whistleblower Aid, but his charitable foundation, Democracy Fund, does contribute to its annual funding.

More Must-Reads from TIME

- Why Trump’s Message Worked on Latino Men

- What Trump’s Win Could Mean for Housing

- The 100 Must-Read Books of 2024

- Sleep Doctors Share the 1 Tip That’s Changed Their Lives

- Column: Let’s Bring Back Romance

- What It’s Like to Have Long COVID As a Kid

- FX’s Say Nothing Is the Must-Watch Political Thriller of 2024

- Merle Bombardieri Is Helping People Make the Baby Decision

Write to Billy Perrigo/Paris at billy.perrigo@time.com