Most people would date the beginning of the current AI hype cycle to late 2022, when OpenAI released ChatGPT. For Meredith Whittaker, it began around 2013. She was working at Google at the time, and witnessed from the inside as the company reoriented itself toward machine learning, acquiring DeepMind and offering courses to retrain its engineers. Whittaker controlled a pot of money, and someone pitched her a proposal to build a machine-learning system to predict future genocides. It was at that moment that she had a revelation. “I was like, How do you define genocide?” she recalls asking. “How are you informing the system of what a genocide looks like?” She imagined the harm that could come from feeding a supposedly “intelligent” AI like this with sloppy data. “I was like, What happens when your system tips the scales?”

The experience, which roughly coincided with Edward Snowden’s disclosure that the U.S. government was hoovering up masses of internet-usage data being generated by newly ubiquitous tech companies, sent Whittaker down a rabbit hole. She set to learning about AI, and quickly realized it relied upon huge quantities of data and computing power only accessible to the richest of tech companies. “There was a moment when I recognized that the consolidated resources of the winners of the surveillance business model are those that enabled companies to repackage data-centric, compute-centric technologies as ‘intelligence’ in a way that allows them to infiltrate any market they want,” she says.

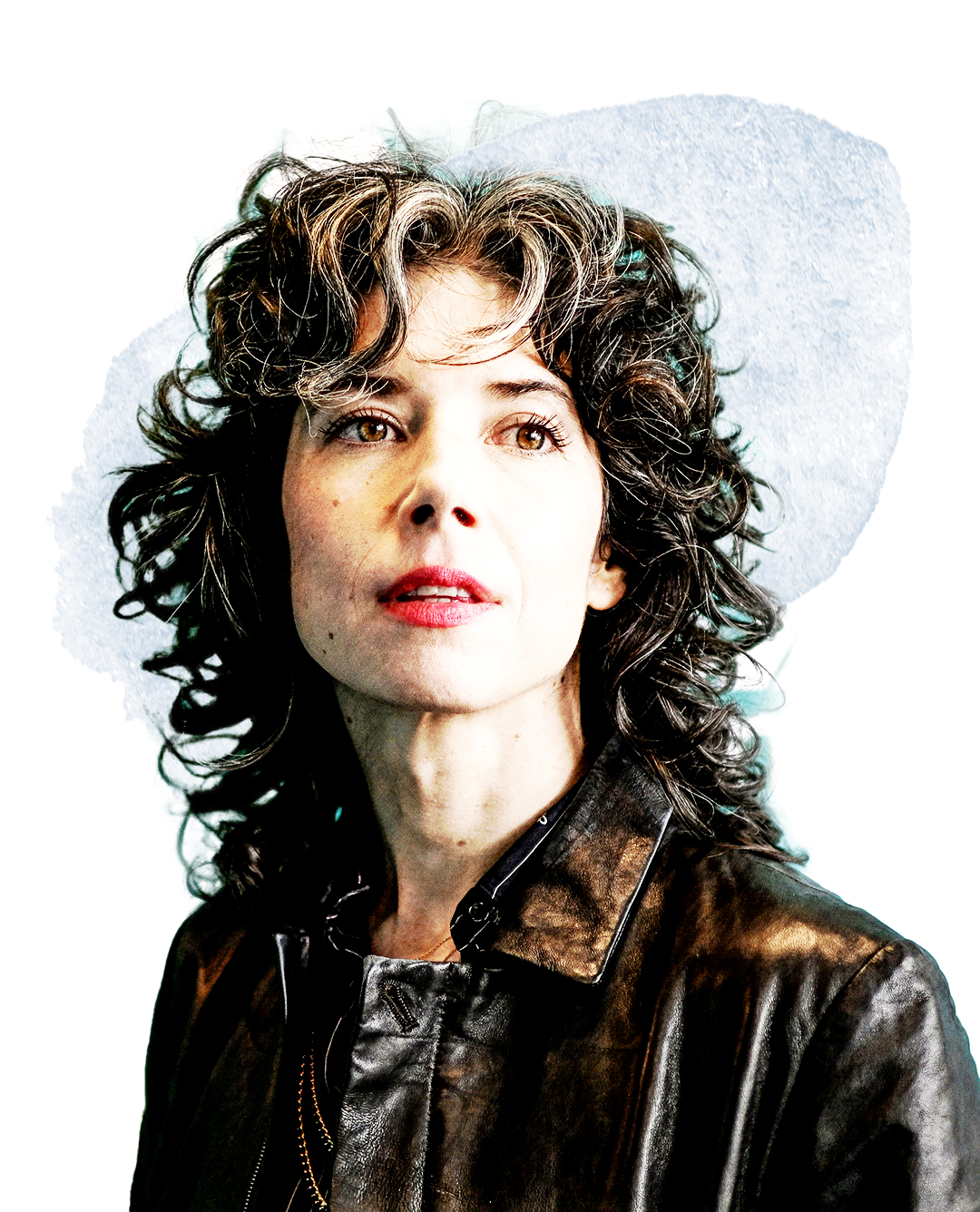

Toward the tail end of her 13-year career at Google, Whittaker helped lead mass internal protests against both alleged workplace sexual harassment and Google’s AI work for the U.S. military. Although Google acceded to employee demands on both issues, Whittaker quit in 2019, saying the company had retaliated against her for her activism and forced her to abandon her responsibilities to do with AI ethics. (Google denied at the time it had retaliated against Whittaker.) She went on to a stint at the Federal Trade Commission, advising chair Lina Khan on the link between corporate concentration of power and AI harms. Then in 2022 she became the president of the Signal Foundation, which oversees the encrypted private-messaging app Signal.

Read More: Signal’s President Meredith Whittaker Shares What’s Next for the Private Messaging App

The job isn’t as unconnected from the world of AI as you might think. Tech companies collect vast quantities of data to train their AI systems, scraping billions of images and words of text from the internet—often without the consent of their creators. That might include your profile pictures, restaurant reviews, tweets, or Wikipedia edits. It also includes the books of thousands of published authors and the work of innumerable professional and amateur artists. “It is fundamentally unethical that the meaning-making, the creative labor, and the work of hundreds of millions of people is being scraped, aggregated, and used to create systems that will undermine the livelihoods and the ecosystem of mutual understanding that we create for each other,” Whittaker says. Today’s AI, she adds, “is dependent on all of the free-to-the-commons labor, billions of hours that are now being concentrated in the hands of a handful of companies that then get to launder that as ‘intelligence’ in ways that are further cementing information and power asymmetries.”

Signal’s encrypted messaging service, which prevents texts and data being intercepted by anyone except the intended recipients, is one small but symbolic step toward an alternative system. “The Venn diagram of privacy concerns and AI concerns is a circle,” Whittaker tells TIME over an encrypted video call. “Signal is working within the tech industry ecosystem, against it. It is trying to create something that interrupts the data pipelines.”

- Cybersecurity Experts Are Sounding the Alarm on DOGE

- Meet the 2025 Women of the Year

- The Harsh Truth About Disability Inclusion

- Why Do More Young Adults Have Cancer?

- Colman Domingo Leads With Radical Love

- How to Get Better at Doing Things Alone

- Michelle Zauner Stares Down the Darkness