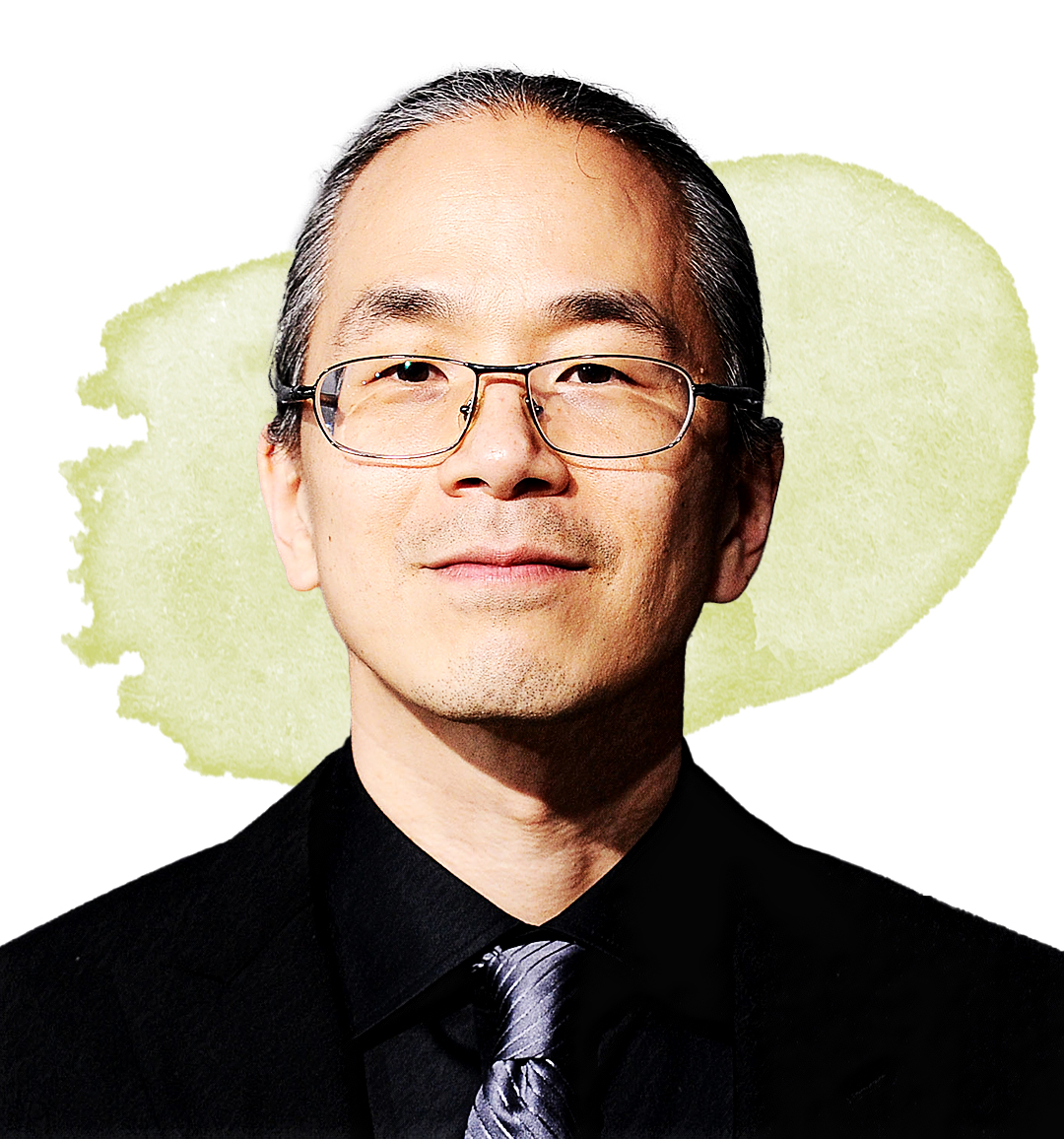

Ted Chiang is perhaps the world’s most celebrated living science-fiction author. His short, carefully hewn stories explore how our inner worlds and our societies would react to unexpected rifts in the fabric of science. How would it feel to receive a hormone injection that drastically improved your cognitive function? What if learning an alien language changed the way you perceived time? And if humanity were to create artificial life, what obligations would we owe it?

Recently, Chiang, 56, has stepped into a new role. In nonfiction pieces for the New Yorker, he has emerged as one of the sharpest critics of AI and the corporations behind it. In one viral piece, he compared ChatGPT to “a blurry jpeg of the web,” arguing that the very technology that makes the app so fluent is the reason for its inability to separate truth from fiction. In another, he took aim at the structures of power that new AI advances both arose from and reinforce. Without structural economic changes, he argued, the rise of AI threatens to worsen wealth inequality, weaken worker power, and fortify a tech oligarchy. “What does progress even mean, if it doesn’t include better lives for people who work?” he wrote. “What is the point of greater efficiency, if the money being saved isn’t going anywhere except into shareholders’ bank accounts?”

In his short but electric stories, Chiang makes every word count. And in a video interview over Zoom in early August, language is a preoccupation. Chiang accepts the need for humanity to use metaphors when talking about new technologies; in our search for meaning, it helps to anchor ourselves in comparisons. His problem is when the metaphors we use mislead us. For instance, when a large language model produces false information, it’s referred to as a “hallucination.” He prefers “confabulation.” Hallucination implies not only the presence of an inner mind, but the capacity of that mind to have sensory experiences. Confabulation, on the other hand, happens when a person unknowingly falsifies information to gloss over a gap in their memory. It’s still a metaphor, but a far better one for how current AI works, he thinks. “Can we stop LLMs from confabulating while still having them generate factual responses of the sort that we want?” Chiang asks. “I’m skeptical, because I don’t think that there are fundamentally different processes at work.”

Like many science-fiction authors, Chiang has written in his short stories about artificial beings with conscious inner worlds. Has this fictional device—so often useful for telling intimate stories about what really makes us human—exacerbated our tendency to see flickers of sentience in AI tools when in reality, no such thing exists? While lots of science fiction has explored the idea of sentient AI, we have few fictional touchstones for the situation we currently find ourselves in, where machines can bewitch us with our own language even while having no executive function. Is there a role for science fiction in popularizing that idea? Chiang is skeptical. “All art is political, and can promote positive political ideas,” he says. “But I don’t want to say science fiction should have any other priorities beyond being good art.”

Chiang also balks at the suggestion that deflating the unlikely tales spun by technologists gives him “any kind of moral authority.” But he says that when he reads some of the more speculative AI risk discourse, he does see more in common with science fiction than fact. “The idea of a computer that can make itself smarter and become super intelligent, that is a really interesting story idea,” he says. “But to hear people talk about it as if it’s something that is actually going to happen, that’s just jarring.”

- Why Biden Dropped Out

- Ukraine’s Plan to Survive Trump

- The Rise of a New Kind of Parenting Guru

- The Chaos and Commotion of the RNC in Photos

- Why We All Have a Stake in Twisters’ Success

- 8 Eating Habits That Actually Improve Your Sleep

- Welcome to the Noah Lyles Olympics

- Get Our Paris Olympics Newsletter in Your Inbox