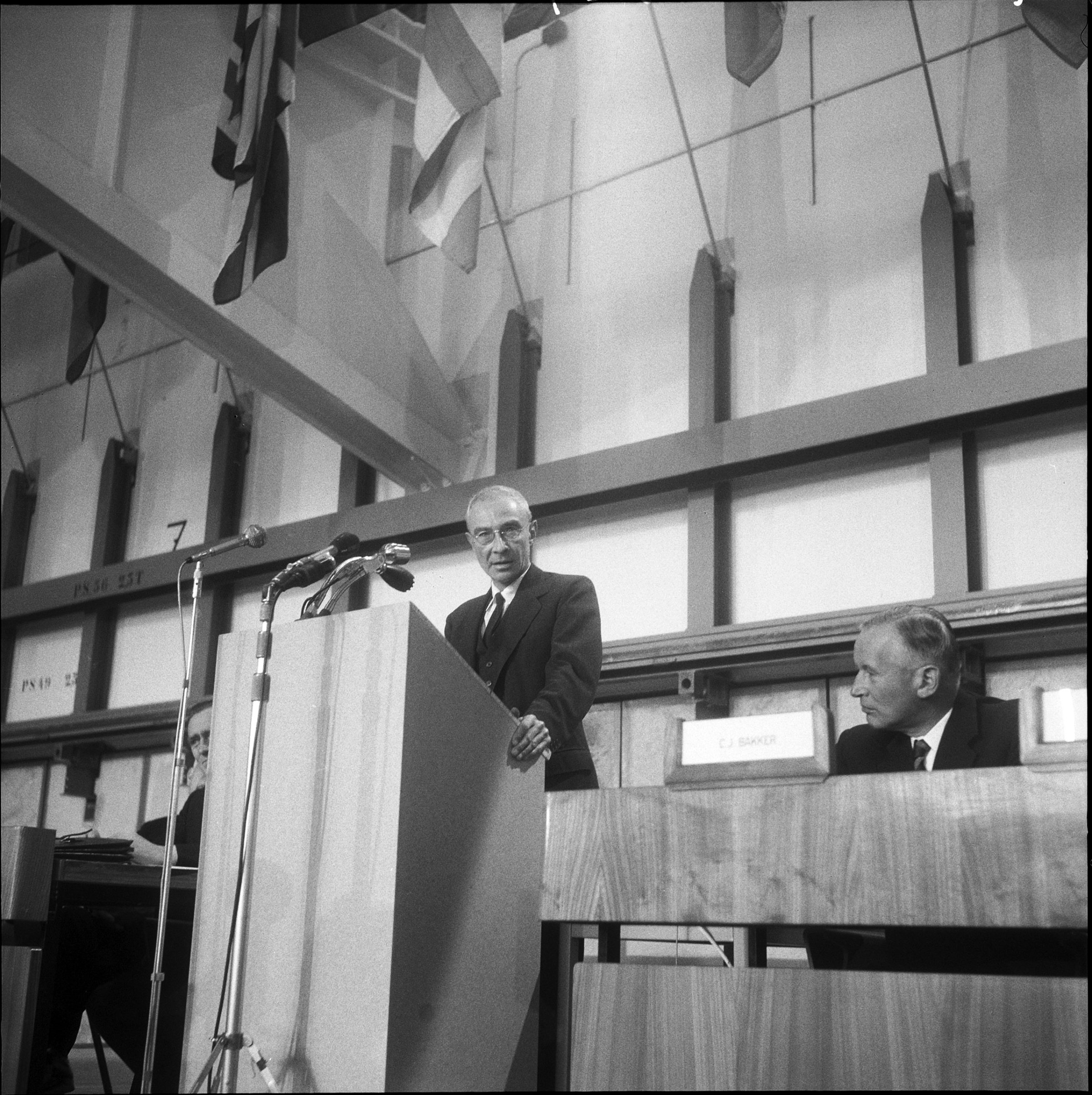

On 16th July 1945 the world changed forever. The Manhattan Project’s ‘Trinity’ test, directed by Robert Oppenheimer, endowed humanity for the first time with the ability to wipe itself out: an atomic bomb had been successfully detonated 210 miles south of Los Alamos, New Mexico.

On 6th August 1945 the bomb was dropped on Hiroshima and three days later, Nagasaki— unleashing unprecedented destructive power. The end of World War II brought a fragile peace, overshadowed by this new, existential threat.

While nuclear technology promised an era of abundant energy, it also launched us into a future where nuclear war could lead to the end of our civilization. The ‘blast radius’ of our technology had increased to a global scale. It was becoming increasingly clear that governing nuclear technology to avoid a global catastrophe required international cooperation. Time was of the essence to set up robust institutions to deal with this.

In 1952, 11 countries set up CERN and tasked it with “collaboration in scientific [nuclear] research of a purely fundamental nature”—making clear that CERN’s research would be used for the public good. The International Atomic Energy Agency (IAEA) was also set up in 1957 to monitor global stockpiles of uranium and limit proliferation. Among others, these institutions helped us to survive over the last 70 years.

We believe that humanity is facing once more an increase in the ‘blast radius’ of technology: the development of advanced artificial intelligence. A powerful technology that could annihilate humanity if left unrestrained, but, if harnessed safely, could change the world for the better.

The specter of artificial general intelligence

Experts have been sounding the alarm on artificial general intelligence (AGI) development. Distinguished AI scientists and leaders of the major AI companies, including Sam Altman of OpenAI and Demis Hassabis of Google DeepMind, signed a statement from the Center for AI Safety that reads: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.” A few months earlier, another letter calling for a pause in giant AI experiments was signed over 27,000 times, including by Turing Prize winners Yoshua Bengio and Geoffrey Hinton.

Read More: The Top 100 Leaders in AI

This is because a small group of AI companies (OpenAI, Google Deepmind, Anthropic) are aiming to create AGI: not just chatbots like ChatGPT, but AIs that are “autonomous and outperform humans at most economic activities”. Ian Hogarth, investor and now Chair of the UK’s Foundation Model Taskforce, calls these ‘godlike AIs’ and implored governments to slow down the race to build them. Even the developers of the technology themselves expect great danger from it. Altman, CEO of the company behind ChatGPT, said that the “Development of superhuman machine intelligence (SMI) is probably the greatest threat to the continued existence of humanity.”

World leaders are calling for the establishment of an international institution to deal with the threat of AGI: a ‘CERN’ or ‘IAEA for AI’. In June, President Biden and U.K. Prime Minister Sunak discussed such an organization. The U.N. Secretary-General, Antonio Guterres thinks we need one, too. Given this growing consensus for international cooperation to respond to the risks from AI, we need to lay out concretely how such an institution might be built.

Would a ‘CERN for AI’ look like MAGIC?

MAGIC (the Multilateral AGI Consortium) would be the world’s only advanced and secure AI facility focused on safety-first research and development of advanced AI. Like CERN, MAGIC will allow humanity to take AGI development out of the hands of private firms and lay it into the hands of an international organization mandated towards safe AI development.

MAGIC would have exclusivity when it comes to the high-risk research and development of advanced AI. It would be illegal for other entities to independently pursue AGI development. This would not affect the vast majority of AI research and development, and only focus on frontier, AGI-relevant research, similar to how we already deal with dangerous R&D with other technologies. Research on engineering lethal pathogens is outright banned or confined to very high biosafety level labs. At the same time, the vast majority of drug research is instead supervised by regulatory agencies like the FDA.

MAGIC will only be concerned with preventing the high-risk development of frontier AI systems - godlike AIs. Research breakthroughs done at MAGIC will only be shared with the outside world once proven demonstrably safe.

To make sure high risk AI research remains secure and under strict oversight at MAGIC, a global moratorium on creation of AIs using more than a set amount of computing power be put in place (here’s a great overview of why computing power matters). This is similar to how we already deal with uranium internationally, the main resource used for nuclear weapons and energy.

Without competitive pressures, MAGIC can ensure the adequate safety and security needed for this transformative technology, and distribute the benefits to all signatories. CERN exists as a precedent for how we can succeed with MAGIC.

The U.S. and the U.K. are in a perfect position to facilitate this multilateral effort, and springboard its inception after the upcoming Global Summit on Artificial Intelligence in November this year.

Averting existential risk from AGI is daunting, and leaving this challenge to private companies is a very dangerous gamble. We don’t let individuals or corporations develop nuclear weapons for private use, and we shouldn’t allow this to happen with dangerous, powerful AI. We managed to not destroy ourselves with nuclear weapons, and we can secure our future again - but not if we remain idle. We must place advanced AI development into the hands of a new global, trusted institution and create a safer future for everyone.

Post-WWII institutions helped us avoid nuclear war by controlling nuclear development. As humanity faces a new global threat—uncontrolled artificial general intelligence (AGI)—we once again need to take action to secure our future.

More Must-Reads from TIME

- Cybersecurity Experts Are Sounding the Alarm on DOGE

- Meet the 2025 Women of the Year

- The Harsh Truth About Disability Inclusion

- Why Do More Young Adults Have Cancer?

- Colman Domingo Leads With Radical Love

- How to Get Better at Doing Things Alone

- Michelle Zauner Stares Down the Darkness

Contact us at letters@time.com