Three hundred and sixty-four days after she lost her job as a co-lead of Google’s ethical artificial intelligence (AI) team, Timnit Gebru is nestled into a couch at an Airbnb rental in Boston, about to embark on a new phase in her career.

Google hired Gebru in 2018 to help ensure that its AI products did not perpetuate racism or other societal inequalities. In her role, Gebru hired prominent researchers of color, published several papers that highlighted biases and ethical risks, and spoke at conferences. She also began raising her voice internally about her experiences of racism and sexism at work. But it was one of her research papers that led to her departure. “I had so many issues at Google,” Gebru tells TIME over a Zoom call. “But the censorship of my paper was the worst instance.”

In that fateful paper, Gebru and her co-authors questioned the ethics of large language AI models, which seek to understand and reproduce human language. Google is a world leader in AI research, an industry forecast to contribute $15.7 trillion to the global economy by 2030, according to accounting firm PwC. But Gebru’s paper suggested that, in their rush to build bigger, more powerful language models, companies including Google weren’t stopping to think about the kinds of biases being built into them—biases that could entrench existing inequalities, rather than help solve them. It also raised concerns about the environmental impact of the AIs, which use huge amounts of energy. In the battle for AI dominance, Big Tech companies were seemingly prioritizing profits over safety, the authors suggested, calling for the industry to slow down. “It was like, You built this thing, but mine is even bigger,” Gebru recalls of the atmosphere at the time. “When you have that attitude, you’re obviously not thinking about ethics.”

Gebru’s departure from Google set off a firestorm in the AI world. The company appeared to have forced out one of the world’s most respected ethical AI researchers after she criticized some of its most lucrative work. The backlash was fierce.

The dispute didn’t just raise concerns about whether corporate behemoths like Google’s parent Alphabet could be trusted to ensure this technology benefited humanity and not just their bottom lines. It also brought attention to important questions: If artificial intelligence is trained on data from the real world, who loses out when that data reflects systemic injustices? Were the companies at the forefront of AI really listening to the people they had hired to mitigate those harms? And, in the quest for AI dominance, who gets to decide what kind of collateral damage is acceptable?

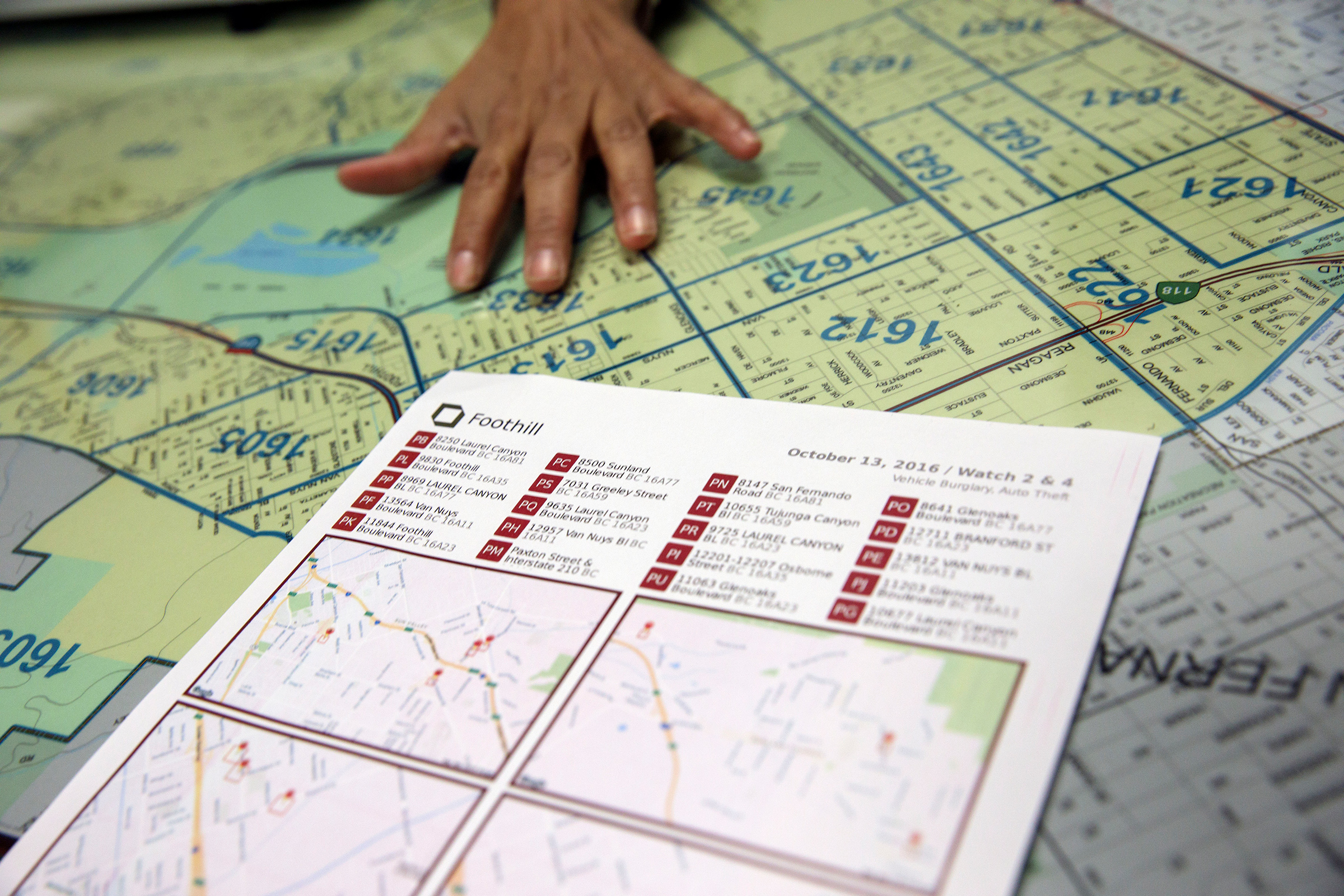

For the past decade, AI has been quietly seeping into daily life, from facial recognition to digital assistants like Siri or Alexa. These largely unregulated uses of AI are highly lucrative for those who control them, but are already causing real-world harms to those who are subjected to them: false arrests; health care discrimination; and a rise in pervasive surveillance that, in the case of policing, can disproportionately affect Black people and disadvantaged socioeconomic groups.

Gebru is a leading figure in a constellation of scholars, activists, regulators and technologists collaborating to reshape ideas about what AI is and what it should be. Some of her fellow travelers remain in Big Tech, mobilizing those insights to push companies toward AI that is more ethical. Others, making policy on both sides of the Atlantic, are preparing new rules to set clearer limits on the companies benefiting most from automated abuses of power. Gebru herself is seeking to push the AI world beyond the binary of asking whether systems are biased and to instead focus on power: who’s building AI, who benefits from it, and who gets to decide what its future looks like.

The day after our Zoom call, on the anniversary of her departure from Google, Gebru launched the Distributed AI Research (DAIR) Institute, an independent research group she hopes will grapple with how to make AI work for everyone. “We need to let people who are harmed by technology imagine the future that they want,” she says.

When Gebru was a teenager, war broke out between Ethiopia, where she had lived all her life, and Eritrea, where both of her parents were born. It became unsafe for her to remain in Addis Ababa, the Ethiopian capital. After a “miserable” experience with the U.S. asylum system, Gebru finally made it to Massachusetts as a refugee. Immediately, she began experiencing racism in the American school system, where even as a high-achieving teenager she says some teachers discriminated against her, trying to prevent her taking certain AP classes. Years later, it was a pivotal experience with the police that put her on the path toward ethical technology. She recalls calling the cops after her friend, a Black woman, was assaulted in a bar. When they arrived, the police handcuffed Gebru’s friend and later put her in a cell. The assault was never filed, she says. “It was a blatant example of systemic racism.”

While Gebru was a Ph.D. student at Stanford in the early 2010s, tech companies in Silicon Valley were pouring colossal amounts of money into a previously obscure field of AI called machine learning. The idea was that with enough data and processing power, they could teach computers to perform a wide array of tasks, like speech recognition, identifying a face in a photo or targeting people with ads based on their past behavior. For decades, most AI research had relied on hard-coded rules written by humans, an approach that could never cope with such complex tasks at scale. But by feeding computers enormous amounts of data—now available thanks to the Internet and smartphone revolutions—and by using high-powered machines to spot patterns in those data, tech companies became enamored with the belief that this method could unlock new frontiers in human progress, not to mention billions of dollars in profits.

In many ways, they were right. Machine learning became the basis for many of the most lucrative businesses of the 21st century. It powers Amazon’s recommendation engines and warehouse logistics and underpins Google’s search and assistant functions, as well as its targeted advertising business. It also promises to transform the terrain of the future, offering tantalizing prospects like AI lawyers who could give affordable legal advice or AI doctors who could diagnose patients’ ailments within seconds, or even AI scientists.

By the time she left Stanford, Gebru knew she wanted to use her new expertise to bring ethics into this field, which was dominated by white men. She says she was influenced by a 2016 ProPublica investigation into predictive policing, which detailed how courtrooms across the U.S. were adopting software that offered to predict the likelihood of defendants reoffending in the future, to advise judges during sentencing. By looking at actual reoffending rates and comparing them with the software’s predictions, ProPublica found that the AI was not only often wrong, but also dangerously biased: it was more likely to rate Black defendants who did not reoffend as “high risk,” and to rate white defendants who went on to reoffend as “low risk.” The results showed that when an AI system is trained on historical data that reflects inequalities—as most data from the real world does—the system will project those inequalities into the future.

When she read the story, Gebru thought about not only her own experience with police, but also the overwhelming lack of diversity in the AI world that she had experienced so far. Shortly after attending a conference in 2015, where she was one of only a few Black attendees, she put her thoughts into words in an article that she never published. “I am very concerned about the future of AI,” she wrote. “Not because of the risk of rogue machines taking over. But because of the homogeneous, one-dimensional group of men who are currently involved in advancing the technology.”

By 2017, Gebru was an AI researcher at Microsoft, where she co-authored a paper called Gender Shades. It demonstrated how facial-recognition systems developed by IBM and Microsoft were almost perfect at detecting images of white people, but not people with darker skin, particularly Black women. The data set that had been used to train the algorithm contained lots of images of white men, but very few of Black women. The research, which Gebru had worked on alongside Joy Buolamwini of MIT Media Lab, forced IBM and Microsoft to update their data sets.

Google hired Gebru shortly after Gender Shades was published, at a time when Big Tech companies were coming under increasing scrutiny over the ethical credentials of their AI research. While Gebru was interviewing, a group of Google employees were protesting the company’s agreement with the Pentagon to build AI systems for weaponized drones. Google eventually canceled the contract, but several employees who were involved in worker activism in the wake of the protests say they were later fired or forced out. Gebru had reservations about joining Google, but believed she could have a positive impact. “I went into Google with my eyes wide open in terms of what I was getting into,” she says. “What I thought was, This company is a huge ship, and I won’t be able to change its course. But maybe I’ll be able to carve out a small space for people in various groups who should be involved in AI, because their voices are super important.”

After a couple of years on the job, Gebru had realized that publishing research papers was more effective at bringing about change than trying to convince her superiors at Google, whom she often found to be intransigent. So when co-workers began asking her questions about the ethics of large language models, she decided to collaborate on a paper about them. In the year leading up to that decision, the hype around large language models had led to a palpable sense of enthusiasm across Silicon Valley. In a stunt a couple of months earlier, the Guardian published an op-ed written by a large language model called GPT-3 from a Microsoft-backed company, OpenAI. “A robot wrote this entire article. Are you scared yet, human?” asked the headline. Investment was flooding into tech firms’ AI research teams, all of which were competing to build models based on ever bigger data sets.

To Gebru and her colleagues, the enthusiasm around language models was leading the industry in a worrying direction. For starters, they knew that despite appearances, these AIs were nowhere near sentient. The paper compared the systems to “parrots” that were simply very good at repeating combinations of words from their training data. This meant they were especially susceptible to bias. Part of the problem was that in the race to build ever bigger data sets, companies had begun to build programs that could scrape text from the Internet to use as training data. “This means that white supremacist and misogynistic, ageist, etc., views are overrepresented,” Gebru and her colleagues wrote in the paper. At its core was the same maxim that had underpinned Gebru and Buolamwini’s facial-recognition research: if you train an AI on biased data, it will give you biased results.

Read more: Artificial Intelligence Can Now Craft Original Jokes—And That’s No Laughing Matter

The paper that Gebru and her colleagues wrote is now “essentially canon” in the field of responsible AI, according to Rumman Chowdhury, the director of Twitter’s machine-learning ethics, transparency and accountability team. She says it cuts to the core of the questions that ethical AI researchers are attempting to get Big Tech companies to reckon with: “What are we building? Why are we building it? And who is it impacting?”

But Google’s management was not happy. After the paper was submitted for an internal review, Gebru was contacted by a vice president, who told her that the company had issues with it. Gebru says Google initially gave vague objections, including that the paper painted too negative a picture of the technology. (Google would later say the research did not account for safeguards that its teams had built to protect against biases, or its advancements in energy efficiency. The company did not comment further for this story.)

Google asked Gebru to either retract the paper or remove from it her name and those of her Google colleagues. Gebru says she replied in an email saying that she would not retract the paper, and would remove the names only if the company came clean about its objections and who exactly had raised them—otherwise she would resign after tying up loose ends with her team. She then emailed a group of women colleagues in Google’s AI division separately, accusing the company of “silencing marginalized voices.” On Dec. 2, 2020, Google’s response came: it could not agree to her conditions, and would accept her resignation. In fact, the email said, Gebru would be leaving Google immediately because her message to colleagues showed “behavior that is inconsistent with the expectations of a Google manager.” Gebru says she was fired; Google says she resigned.

In an email to staff after Gebru’s departure, Jeff Dean, the head of Google AI, attempted to reassure concerned colleagues that the company was not turning its back on ethical AI. “We are deeply committed to continuing our research on topics that are of particular importance to individual and intellectual diversity,” he wrote. “That work is critical and I want our research programs to deliver more work on these topics—not less.”

Today, the idea that AI can encode the biases of human society is not controversial. It is taught in computer science classes and accepted as fact by most AI practitioners, even at Big Tech companies. But to some who are of the same mind as Gebru, it is only the first epiphany in a much broader—and more critical—worldview. The central point of this burgeoning school of thought is that the problem with AI is not only the ingrained biases in individual programs, but also the power dynamics that underpin the entire tech sector. In the context of an economy where founders of platforms like Amazon, Google and Facebook have amassed more wealth than near anybody else in human history, proponents of this belief see AI as just the latest and most powerful in a sequence of tools wielded by capitalist elites to consolidate their wealth, cannibalize new markets, and penetrate ever more deeply into the private human experience in pursuit of data and profit.

To others in this emerging nexus of resistance, Gebru’s ouster from Google was a sign. “Timnit’s work has pretty unflinchingly pulled back the veil on some of these claims, that are fundamental to these companies’ projections, promises to their boards and also to the way they present themselves in the world,” says Meredith Whittaker, a former researcher at Google who resigned in 2019 after helping lead worker resistance to its cooperation with the Pentagon. “You saw how threatening that work was, in the way that Google treated her.”

Whittaker was recently appointed as a senior adviser on AI to the Federal Trade Commission (FTC). “What I am concerned about is the capacity for social control that [AI] gives to a few profit-driven corporations,” says Whittaker, who was not speaking in the capacity of her FTC role. “Their interests are always aligned with the elite, and their harms will almost necessarily be felt most by the people who are subjected to those decisions.”

It’s a viewpoint that Big Tech could not disagree with more, but to which European regulators are also paying attention. The E.U. is currently scrutinizing a wide-ranging draft AI act. If passed, it could restrict forms of AI that lawmakers deem harmful, including real-time facial recognition, although activists say it doesn’t go far enough. Several U.S. cities, including San Francisco, have already implemented facial-recognition bans. Gebru has spoken in favor of regulation that defines what kind of uses of AI are unacceptable, and sets better guardrails for those that remain. She recently told European lawmakers scrutinizing the new bill: “The No. 1 thing that would safeguard us from unsafe uses of AI is curbing the power of the companies who develop it.”

She added that increasing legal protections for tech workers was an essential part of making sure companies did not create harmful AI, because workers are often the first line of defense, as in her case. Progress is being made on this front too. In October 2021, the Silenced No More Act came into force in California, preventing big companies from using NDAs to silence employees who complain about harassment or discrimination. In January 2021, hundreds of Google workers unionized for the first time. In the fall, Facebook whistle-blower Frances Haugen disclosed thousands of pages of internal documents to authorities, seeking whistle-blower protection under federal law.

Read more: Inside Frances Haugen’s Decision to Take on Facebook

Gebru sees her research institute DAIR as another organ within this wider push toward tech that is socially responsible, putting the needs of communities ahead of the profit incentive and everything that comes with it. At DAIR, Gebru will work with researchers around the world across multiple disciplines to examine the outcomes of AI technology, with a particular focus on the African continent and the African diaspora in the U.S. One of DAIR’s first projects will use AI to analyze satellite imagery of townships in South Africa, to better understand legacies of apartheid. DAIR is also working on building an industry-wide standard that could help mitigate bias in data sets, by making it common practice for researchers to write accompanying documentation about how they gathered their data, what its limitations are and how it should (or should not) be used. Gebru says DAIR’s funding model gives it freedom too. DAIR has received $3.7 million from a group of big philanthropists including the Ford, MacArthur and Open Society foundations. It’s a novel way of funding AI research, with few ties to the system of Silicon Valley money and patronage that often decides which areas of research are worthy of pursuit, not only within Big Tech companies, but also within the academic institutions to which they give grants.

Even though DAIR will be able to conduct only a small handful of studies, and its funding pales in comparison with the money Big Tech is prepared to spend on AI development, Gebru is optimistic. She has already demonstrated the power of being part of a collective of engaged collaborators working together to create a future in which AI benefits not just the rich and powerful. They’re still the underdogs, but the impact of their work is increasing. “When you’re constantly trying to convince people of AI harms, you don’t have the space or time to implement your version of the future,” Gebru says. “So we need alternatives.”

—With reporting by Nik Popli

More Must-Reads from TIME

- Cybersecurity Experts Are Sounding the Alarm on DOGE

- Meet the 2025 Women of the Year

- The Harsh Truth About Disability Inclusion

- Why Do More Young Adults Have Cancer?

- Colman Domingo Leads With Radical Love

- How to Get Better at Doing Things Alone

- Michelle Zauner Stares Down the Darkness

Write to Billy Perrigo at billy.perrigo@time.com