Hoping to stem the torrent of false cures and conspiracy theories about COVID-19, Facebook announced Thursday it would begin informing users globally who have liked, commented on, or shared “harmful” misinformation about the coronavirus, pointing them instead in the direction of a reliable source.

Facebook hopes the move will drastically reduce the spread of false information about the coronavirus online, a growing crisis that the World Health Organization (WHO) has described as an “infodemic.”

“We want to connect people who may have interacted with harmful misinformation about the virus with the truth from authoritative sources in case they see or hear these claims again,” said Guy Rosen, Facebook’s Vice President for Integrity, in a blog post published early Thursday.

The new policy only applies to false claims related to the coronavirus, but campaigners say the announcement could lay the groundwork for a breakthrough in the battle against political disinformation online. “Facebook applying this to the pandemic is a good first step but this should also be applied to political disinformation too, particularly with the 2020 U.S. election approaching” says Fadi Quran, a campaign director at Avaaz, a global advocacy group that has lobbied Facebook to “correct the record” on false information since 2018. (In the language of online security, “disinformation” means the coordinated, purposeful spread of false information, while “misinformation” refers to accidental inaccuracies.) “I hope this is going to be expanded to other issues and fast,” Quran tells TIME.

Under Facebook’s new policy, when a piece of “harmful” coronavirus-related misinformation has been debunked by its fact checkers, and removed from the site, all users who have interacted with it will be shown a message in their news feed directing them to the WHO’s list of debunked claims. People who have liked, commented on, or shared Facebook posts saying that drinking bleach can cure COVID-19, or that social distancing does not prevent the disease from spreading, will be among the first to see the new message “in the coming weeks,” Rosen said.

Keep up to date with our daily coronavirus newsletter by clicking here.

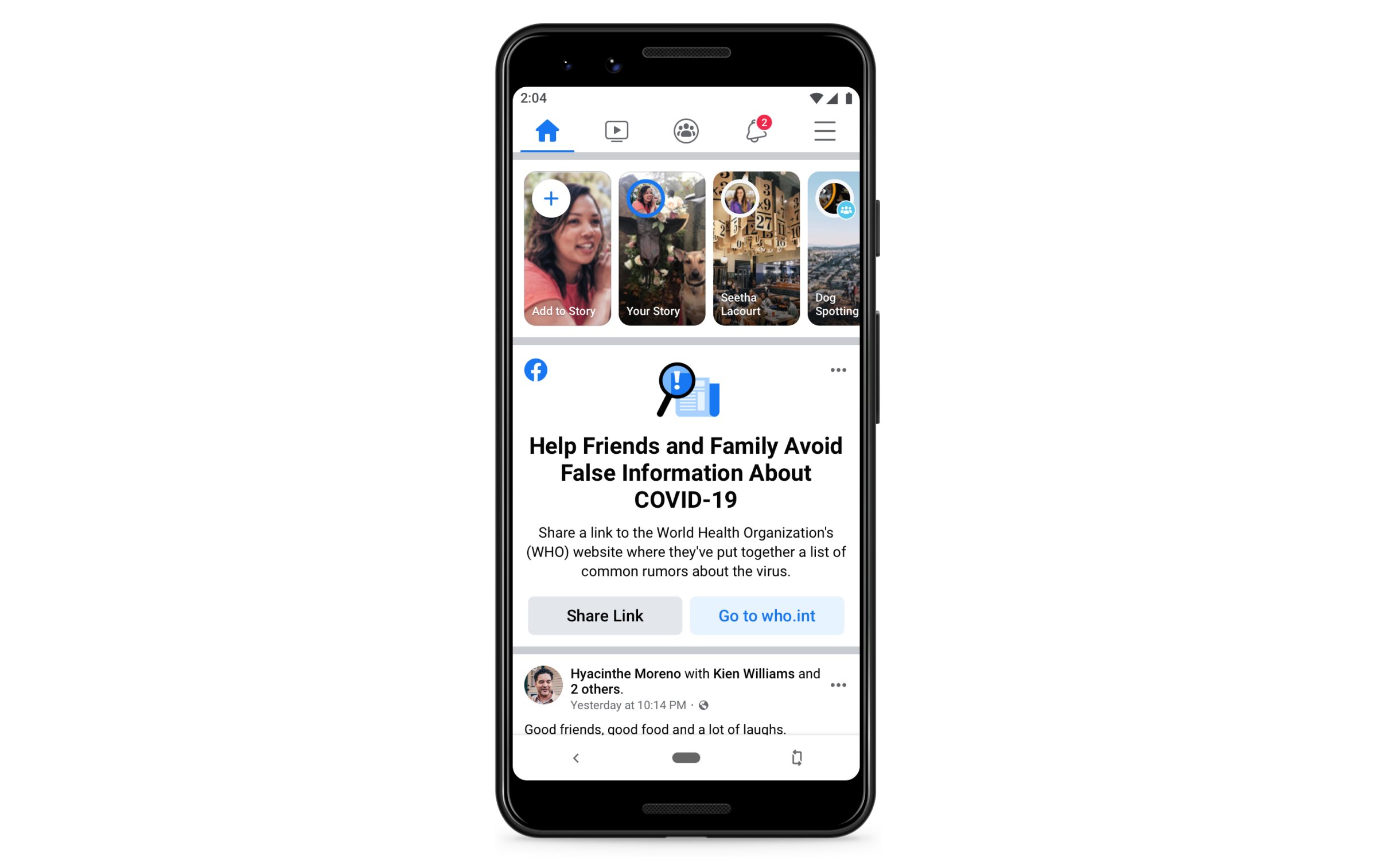

Currently, users who have read the post without “interacting” with it will not be shown corrections. And according to the plans announced Thursday, Facebook’s correction message will not be tailored to the specific piece of misinformation the user has seen; instead, users will be shown a single blanket message reading: “Help friends and family avoid false information about COVID-19” above a link to the WHO website.

However, Facebook is planning to test different ways of showing the messages, according to a spokesperson, leaving open the possibility of more specific corrections and targeting all people who have seen the misinformation. “We believe they should be more specific,” says Christoph Schott, another campaign director at Avaaz. “Facebook has taken the first step of admitting that yes, it actually is the right thing to tell people about factual information. There is now room to go further and we think they should.”

Going further would involve also informing people who have seen misinformation—not just those who have engaged with it, as is the case under the current plans. Schott says Facebook has sophisticated tools, which it lets advertisers make use of, that can tell how long a user has been looking at a piece of content, even if they don’t interact with it. These tools, he says, could be used for the purposes of correcting misinformation as well as increasing Facebook’s ad revenue. “If someone watches a video about how holding their breath for 10 seconds can let you know if you have COVID-19 or not [a debunked false claim circulating online] but doesn’t like it or comment on it or share it, then should that person not be informed? That just doesn’t make any sense to me,” Schott said.

Although activists have welcomed the news, there are still several obstacles preventing Facebook from issuing corrections on political falsehoods in the same way it can for public health ones.

The last four years have shown Facebook is reluctant to crack down on political disinformation. Since Russia attempted to sway the 2016 U.S. election by flooding social media with false news stories, Facebook has cracked down on foreign interference. But it has made only limited attempts to crack down on home-grown disinformation, and is especially wary of infringing on first-amendment rights. Nevertheless, the company has still become embroiled in a partisan fight over the matter, with President Trump accusing Facebook (along with Twitter and YouTube) of anti-conservative bias. The controversy led to Facebook announcing in September 2019 that it would not fact-check political ads during the 2020 election, effectively granting political disinformation a free pass, so long as it’s not part of a foreign influence campaign.

Amid political consensus that coronavirus misinformation is dangerous, however, Facebook has been comparatively quick to combat the spread of false information about COVID-19. As conspiracy theories spread in February and March connecting 5G telecoms equipment to the disease, Facebook said it would remove posts calling for 5G masts to be attacked.

Although Avaaz campaigners acknowledge overcoming political opposition will be a large task, they are optimistic that Facebook has conceded that sending corrections is a viable strategy for combating false information. “Our conversations with Facebook, and other social media platforms, began with them saying that correcting the record would be impossible,” says Quran. “And then when we proved that it was possible, the argument became that corrections were ineffective. And then when we did research to prove that corrections are effective, then it became about political controversy.”

The announcement comes after Facebook was presented with a study commissioned by Avaaz showing that users are nearly 50% less likely to believe misinformation on Facebook if they are subsequently shown a notification debunking it. In the study, a representative sample of 2,000 American adults were shown a replica version of a Facebook feed. One group was shown misinformation alone. A second group was shown misinformation and then a correction, prominently displayed in the newsfeed. A third control group was shown no misinformation. The group who was shown the corrections were 49.4% less likely to believe false information, the researchers from George Washington University and Ohio State University found.

In addition, says Quran from Avaaz, the study also showed that the people exposed to misinformation and corrections were even better at separating fact from fiction than even the people who were shown none at all. “This indicates that ‘correct the record’ could make society more resilient,” Quran tells TIME. “Even for people who don’t see misinformation.”

Now, Facebook has said telling users about fact checks works. According to Rosen’s blog post, 95% of users who were shown warnings about 400,000 pieces of debunked content in March chose not to click through and read the misinformation. Incidentally, these were posts that were not designated as immediately “harmful” by Facebook, meaning they were allowed to remain online. Under Facebook’s policy announced on Thursday, users who have interacted with such posts would not receive a correction in their newsfeed.

Campaigners at Avaaz say it’s not necessarily a bad thing false posts that aren’t designated as “harmful” are still online — but believe Facebook should quickly expand its new correction policy to notify users about this kind of misinformation, too. “The most important thing is that you don’t remove the old content, unless it has the capability to cause immediate harm,” Schott tells TIME. “You just go back to people and say, ‘just so you know, on this specific issue, fact checkers have found factually incorrect information.’ It’s about giving people more information. It’s much better than a government just saying, ‘this is fake news, take it down.’”

Please send any tips, leads, and stories to virus@time.com.

More Must-Reads from TIME

- Cybersecurity Experts Are Sounding the Alarm on DOGE

- Meet the 2025 Women of the Year

- The Harsh Truth About Disability Inclusion

- Why Do More Young Adults Have Cancer?

- Colman Domingo Leads With Radical Love

- How to Get Better at Doing Things Alone

- Michelle Zauner Stares Down the Darkness

Write to Billy Perrigo at billy.perrigo@time.com