Tucked away in an office on a quiet Los Angeles street, past hallways chockablock with miniature props and movie posters, is a cavernous motion-capture studio. And in that studio is the National Mall in Washington, D.C., in 1963, on the day Martin Luther King Jr. delivered his “I Have a Dream” speech.

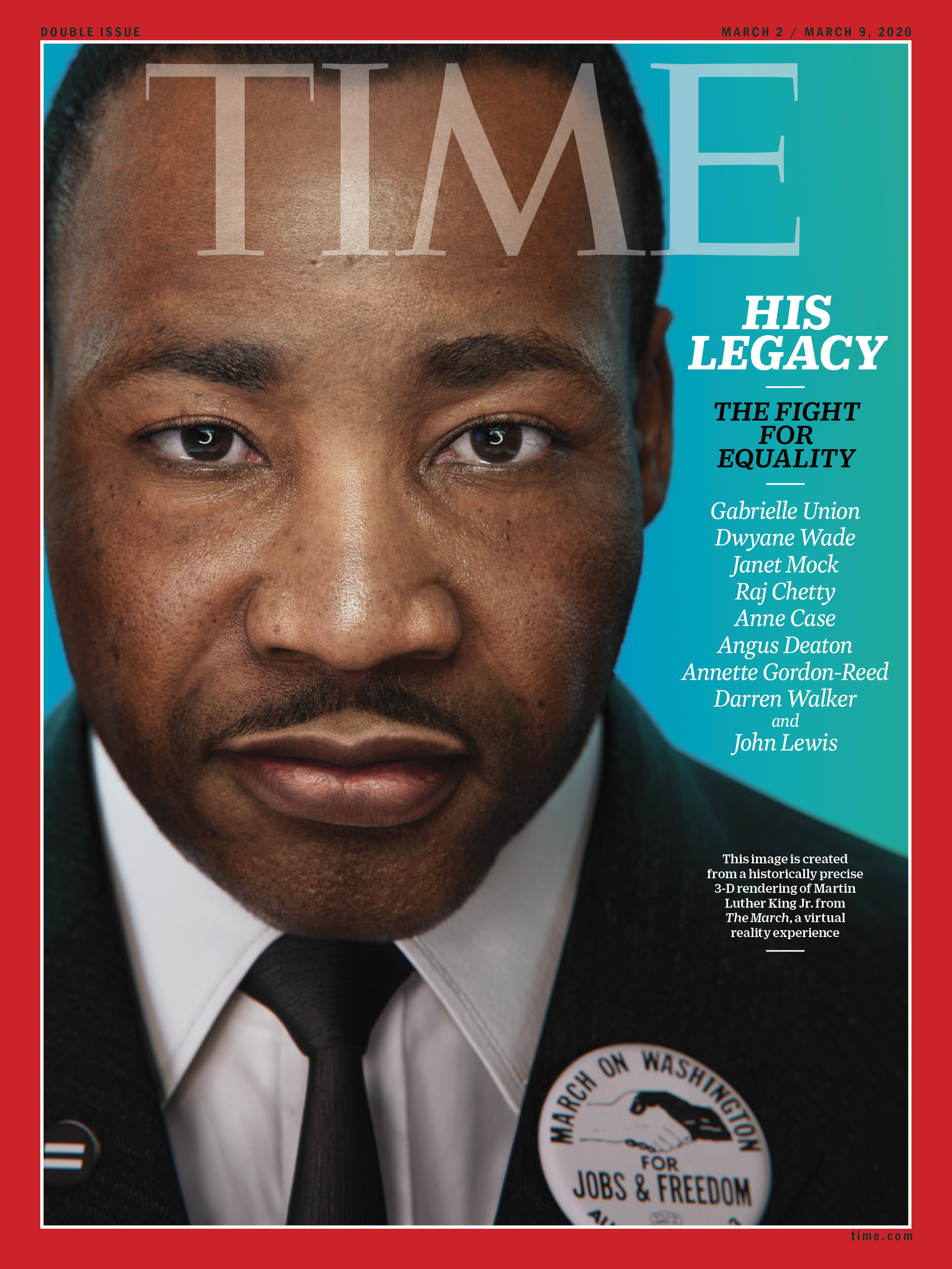

Or rather, it was inside that room that the visual-effects studio Digital Domain captured the expressions, movements and spirit of King, so that he could appear digitally in The March, a virtual reality experience that TIME has produced in partnership with the civil rights leader’s estate. The experience, which is executive–produced and narrated by actor Viola Davis, draws on more than a decade of research in machine learning and human anatomy to create a visually striking re-creation of the country’s National Mall circa 1963—and of King himself.

When work on the project began more than three years ago, a big question needed answering. Was the existing technology capable of accomplishing the project’s goals—not just creating a stunningly realistic digital human, but doing so in a way that met the standards demanded by the subject matter? And Alton Glass, who co-created The March with TIME’s Mia Tramz, points out that another goal was just as key: the creation of what Glass calls a prosthetic memory—something people can use to see a famous historic moment through a different perspective, to surround themselves with those who were willing to make sacrifices in the past for the sake of a more inclusive future. “When you watch these stories, they’re more powerful,” says Glass, “because you’re actually experiencing them instead of reading about them.”

Back in the late ’90s, when Digital Domain used motion-capture footage of stunt performers falling onto airbags to create Titanic’s harrowing scene of passengers jumping from the doomed ship, digitizing those stunts required covering each actor’s body with colorful tape and other markers for reference. To animate faces, an actor’s would be covered with anywhere from a dozen to hundreds of marker dots, used to map their features to a digital one. On the double, those points would be moved manually, frame by frame, to create expressions. That arduous task was essential to avoid falling into the so-called uncanny valley, a term referring to digital or robotic humans that look just wrong enough to be unsettling. The work has gotten easier over the years—the company turned to automation for help making the Avengers baddie Thanos—but remains far from simple.

Step Into History: Learn how to experience the 1963 March on Washington in virtual reality

Calling on the artists behind a fantastical being like Thanos might seem like an unusual choice for a project that needed to be closely matched to real history, but similar know-how is needed, says Peter Martin, CEO of the virtual- and experience-focused creative agency V.A.L.I.S. studio, which partnered with TIME and Digital Domain.

Re-creating the 1963 March on Washington would still stretch the bounds of that experience. For one thing, virtual reality raises its own obstacles. High-end VR headsets that fit over your face achieve their graphical quality via a wired connection to a pricey gaming computer. The March is presented in a museum with high-powered computers, but a wireless option is needed to allow users to more easily move around in that space. It took Digital Domain’s technology director Lance Van Nostrand months to create a system that would solve for wirelessness without compromising quality.

Considering the difficulties of traveling back in time to August 1963, Digital Domain sent a crew to the National Mall and used photogrammetry—a method of extracting measurements and other data from photographs—to digitally map the site of the march. Hours of research went into transforming that data into a vision of the mall from five decades ago, checking the period accuracy of every building, bus or streetlight set to be digitized. Activists who participated in the real march were consulted, as were historians, to help re-create the feeling of being there, and archived audio recordings from that day fleshed out the virtual environment.

And then there was the “I Have a Dream” speech. Generally, to control digital doppelgängers, an actor dons a motion-capture suit along with a head-mounted camera pointed at the face. Where hundreds of dots were once necessary to chart facial movements, today’s real-time face tracking uses computer vision to map a person’s face—in this case, that of motivational speaker Stephon Ferguson, who regularly performs orations of King’s speeches. The digital re-creation of the civil rights leader requires of its audience the same thing Ferguson’s rendition does: a suspension of disbelief and an understanding that, while you may not be seeing the person whose words you’re hearing, this is perhaps the closest you’ll ever get to the feeling of listening to King speak to you.

Even so, it took seven animators nearly three months to perfect King’s movements during the segment of his speech that is included in the experience, working with character modelers to capture his likeness as well as his mannerisms, including his facial tics and saccades—unconscious, involuntary eye movements.

“You cannot have a rubbery Dr. King delivering this speech as though he was in Call of Duty,” says The March’s lead producer, Ari Palitz of V.A.L.I.S. “It needed to look like Dr. King.”

Digital life after death has raised ethical questions before, especially when figures have been used in ways that seemed out of keeping with their real inspirations. King isn’t the first person to be digitally reanimated, and he won’t be the last, so these questions will only become more common, says Jeremy Bailenson, founder of Stanford’s Virtual Human Interaction Lab. “What to do with one’s digital footprint over time has got to be a part of the conversation about one’s estate,” he says. “It is your estate; it is your digital legacy.”

So for The March, though some creative license was taken—the timeline of the day is compressed, for example—every gesture King made had to be based on the truth. Only then would the result be, in its own way, true.

In Los Angeles last December, I put on the headset to see a partially completed version of the entire experience, including a one-on-one with the virtual King, represented as a solitary figure on the steps of the Lincoln Memorial. I gazed at his face in motion, and noticed a mole on his left cheek. It was inconspicuous, the black pinpoint accenting his face. I stepped forward.

When I approached the podium, I was met with a surprise—Dr. King looking right at me. His eyes were piercing, his face a mixture of confidence, austerity and half a million polygons optimized for viewing in a VR headset. He appeared frozen in time, and I found myself without words. Meeting his gaze was more challenging than I’d assumed it would be.

It was then I realized how my view of him had been, for my whole life, flattened. I’d experienced his presence in two dimensions, on grainy film or via big-budget reenactments. How striking to see him, arms outstretched, voice booming in my ears, in three dimensions, all in living color. “This is awesome,” I eked out. He didn’t hear me.

This article is part of a special project about equality in America today. Read more about The March, TIME’s virtual reality re-creation of the 1963 March on Washington and sign up for TIME’s history newsletter for updates.

Want a print of TIME’s Martin Luther King Jr. cover? Find it here.

More Must-Reads from TIME

- Why Trump’s Message Worked on Latino Men

- What Trump’s Win Could Mean for Housing

- The 100 Must-Read Books of 2024

- Sleep Doctors Share the 1 Tip That’s Changed Their Lives

- Column: Let’s Bring Back Romance

- What It’s Like to Have Long COVID As a Kid

- FX’s Say Nothing Is the Must-Watch Political Thriller of 2024

- Merle Bombardieri Is Helping People Make the Baby Decision

Write to Patrick Lucas Austin at patrick.austin@time.com