When technical writer and former WWII pilot Jonathan Ferguson changed his gender in 1958, it made the news in Britain. I’ve imagined the moment many times since I first read about it in a paper called “Hacking the Cis-Tem” by scholar Mar Hicks. Ferguson’s name change, according to the U.K.’s Daily Telegraph and Morning Post, was straightforward: someone took a pen and amended a line in the Official Register. In my imagination, it was a fountain pen and written with a flourish, and in that moment Ferguson felt truly seen after years of hiding his true identity. I’m embellishing, but I want it to have been simple and meaningful. These bureaucratic moments, like signing a marriage license or signing the lease on a first apartment, mark life stage transitions.

Ferguson is less well known than other 1950s trans trailblazers like Christine Jorgensen, but that is part of what makes his story so interesting. Hicks offers some tantalizing insights for those of us obsessed with bureaucratic details. Britons at that point had benefits cards that allowed them to access public benefits, and one of the things Ferguson had to do was change his name on his benefits card. Looking at this exact moment in time was an especially smart choice for Hicks, a historian of technology, because 1958 was about the moment when social patterns were becoming embedded in computer code. The decisions made about how to represent gender in code in the ‘50s were efforts (often deliberate) to enforce ideas about gender on society. Curiously, we’re still living with the legacy of those decisions today.

Our modern digital era started in 1951, when the U.S. Census Bureau started using mainframe computers. It wasn’t magic; programmers would take an existing bureaucratic process and implement it computationally. Paper forms and benefits cards became database tables and became the primary method for citizens to access public benefits like healthcare. Ferguson’s benefits card mattered because it was the key to accessing healthcare or other government services. Hicks writes: “The struggle for trans rights in the mainframe era forms a type of prehistory of algorithmic bias: a clear example of how systems were designed and programmed to accommodate certain people and to deny the existence of others.”

Read More: The Most Destructive Gender Binary

Forty years later, when I was learning to program in the ‘90s, I was taught that you translated a paper form into a digital form, and dumped the data into a database. You usually had your last name field, your first name field, and gender field—M or F. “Back then, nobody imagined that gender would need to be an editable field,” one college friend said to me, reflecting on how we were taught. Today, we know better, and many understand that gender is a spectrum. Our computer systems, however, quite often only have two options for gender. Most people think the issue is changing social norms. It is, but it’s also about the way the gender binary is encoded in systems, and how willing engineers are to change those systems. Over and over again, we have seen technical problems arise because the people designing computer systems replicated a rigid, retrograde status quo inherent in the classification systems used by computational infrastructures.

“While the gender binary is one of the most widespread classification systems in the world today, it is no less constructed than the Facebook advertising platform or, say, the Golden Gate Bridge,” write Catherine D’Ignazio and Lauren F. Klein in Data Feminism. “The Golden Gate Bridge is a physical structure; Facebook ads are a virtual structure; and the gender binary is a conceptual one. But all these structures were created by people: people living in a particular place, at a particular time, and who were influenced—as we all are—by the world around them.”

All of the popular tech platforms grapple with issues of gender in code. Some have options on the surface. This is not always the case under the hood, however. At Facebook, for example, the app appears gender-inclusive. Users can select three options for gender at signup (male, female, or custom). People who choose the “custom” option can select their preferred pronoun (he, she, or they) and type an additional gender description. However, documentation for Facebook ads reveals that users are sold to advertisers as only male or female. The software literally nullifies any gender beyond the binary.

Facebook’s parent company, Meta, is not the only Big Tech firm that claims to support the LGBTQIA+ community while falling short on gender inclusivity. For example: face tagging in Google Photos doesn’t work well for trans people. If you are trans, photos from before and after your transition may be identified as different people, or the software will ask if multiple photos of you are of the same person. There’s also no good way to manage being sneak-attacked by photos of your pre-transition self, suggested by the software on your phone. Cara Esten Hurtle wrote of this problem, “Trans people are constantly having to reckon with the fact that the world has no clear idea of who we are; either we’re the same as we used to be, and thus are called the wrong name or gender at every turn, or we’re different, a stranger to our friends and a threat to airport security. There’s no way to win.”

Most of the intellectual history and the dominant social attitudes in the field of computer science can be found in a single, sprawling database published by the Association for Computing Machinery (ACM), the world’s largest educational and scientific computing society. The ACM digital library holds virtually all of the canonical papers from computer science journals and conferences. The earliest mention of gender in the ACM digital library comes David L. Johnson’s “The Role of the Digital Computer in Mechanical Translation of Languages” in 1958. It has to do with translation and pronoun matching in translation. For the next 20 years, any mention of gender has to do with translation. In other words, even though sweeping social change happened in the 1960s and 1970s, including the widespread recognition that sex is biological and gender is socially constructed, academic computer science (and, for the most part, the computer industry) pointedly ignored the topic of gender except to think about how a computer might accurately translate gendered pronouns from one language to another. (For the record, gendered pronoun translation still remains a poorly solved problem today. As a woman in data science, I routinely get misgendered by Google Translate.)

Computer systems with only two gender options enforce cis-normativity, the assumption that everyone’s gender identity is the same as the sex they were assigned at birth. It is also a kind of violence against a range of people, including nonbinary, queer, trans, intersex, and gender-nonconforming folks. Being referred to using binary pronouns feels “as though ice is being poured down my back,” said nonbinary teen Kayden Reff of Bethesda, Maryland, in court testimony supporting more inclusive gender options in official state documents.

So the question is: Can systems change? Absolutely, yes. Will systems change? That depends on the organizational imperatives and how people choose to invest in creating change.

In my own practice of allyship, starting with the historical perspective helps me think through what needs to be changed in the modern world. When Ferguson transitioned, he had to fill out paper forms and an official register had to be updated. This is a good way to start thinking through the forms and processes that can be updated to be more inclusive. Today’s electronic forms are easier to update than mass-printed paper forms, so one update is to question whether gender needs to be a category on a form at all. If it is necessary to collect gender, systems should make it optional. If not, it should be easy for users to edit and change this field privately, instead of requiring a user to call and have a potentially uncomfortable conversation with a stranger on a customer service line. Forms can provide a range of gender identity options beyond “male” or “female.” Lists of options are easily available online. Gender can be a write-in field, rather than a selection from a list.

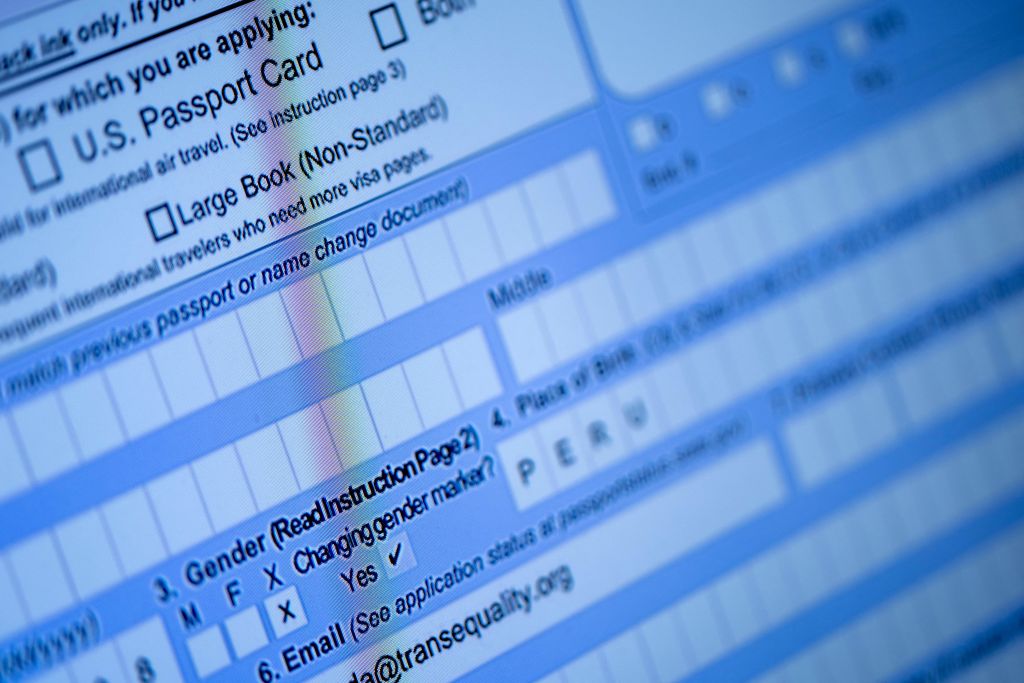

After thinking about forms, the next step is redesigning systems that the form data goes into. In addition to driver’s license systems, public assistance systems, and passport systems, travel systems need to be updated. The U.S. Travel Safety Administration (TSA), which handles airport safety, is a notorious offender when it comes to harassing trans folks. “Transgender people have complained of profiling and other bad experiences of traveling while trans since TSA’s inception and have protested its invasive body scanners since they were first introduced in 2010,” Harper Jean Tobin, director of policy at the National Center for Transgender Equality, told ProPublica. TSA’s full body scanners are programmed with binary representations of gender; some have a pink and a blue button that the agent is supposed to press when a person walks through. Anyone whose gender presentation doesn’t match the image expected in the invasive full body scan is flagged. Trans travelers have been forced to strip, endure invasive pat-downs, out themselves, and have been prevented from getting on their flights.

“My heartbeat speeds up slightly as I near the end of the line, because I know that I’m almost certainly about to experience an embarrassing, uncomfortable, and perhaps humiliating search by a [TSA] officer, after my body is flagged as anomalous by the millimeter wave scanner,” Sasha Costanza-Chock writes in her book Design Justice of their experience passing through the Detroit Metro Airport. “I know that this is almost certainly about to happen because of the particular sociotechnical configuration of gender normativity . . . that has been built into the scanner, through the combination of user interface (UI) design, scanning technology, binary-gendered body-shape data constructs, and risk detection algorithms, as well as the socialization, training, and experience of the TSA agents.” Trans travelers are not the only ones left out of scanners’ programming: Black women’s hair, Sikh turbans, prostheses, and headscarves commonly trigger these poorly designed systems as well.

Amid today’s hype about AI and the overblown promises about the future of technology, it’s important to keep in mind how computers actually work, and, perhaps more importantly, where our technology still has some work to do. Computer systems are sociotechnical, meaning they encode social norms inside their technical architecture. The real challenge ahead is in making our current and future technological systems more inclusive while avoiding the mistakes and biases of the past.

Adapted from previous work, including MORE THAN A GLITCH: CONFRONTING RACE, GENDER, AND ABILITY BIAS IN TECH by Meredith Broussard. Copyright 2023. Reprinted with permission from The MIT Press.

More Must-Reads From TIME

- The 100 Most Influential People of 2024

- Coco Gauff Is Playing for Herself Now

- Scenes From Pro-Palestinian Encampments Across U.S. Universities

- 6 Compliments That Land Every Time

- If You're Dating Right Now , You're Brave: Column

- The AI That Could Heal a Divided Internet

- Fallout Is a Brilliant Model for the Future of Video Game Adaptations

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Contact us at letters@time.com