The debate over artificial intelligence has divided some of Silicon Valley’s brightest minds.

Companies like Google, Microsoft and Amazon are embracing AI, integrating it into their core products. Larry Page, CEO of Google parent company Alphabet, argued in 2014 that AI could bring economic benefits. “When we have computers that can do more and more jobs, it’s going to change how we think about work,” Page told The Financial Times. “There’s no way around that.”

Other major industry figures warn that artificial intelligence could spin out of control. Elon Musk, CEO of Tesla Motors and SpaceX, once said AI could pose the “biggest existential threat” to mankind. Philanthropist and Microsoft cofounder Bill Gates said he’s “concerned” about the development of super intelligent machines.

Yet the technology continues to rapidly advance. Last month, Google’s AlphaGo bot became the first computer system to defeat a professional player of Go, a notoriously difficult game for AI to crack. Many experts considered the feat at least a decade away. Dag Kittlaus, an entrepreneur behind Siri, earlier this week demonstrated an even more sophisticated AI-powered assistant.

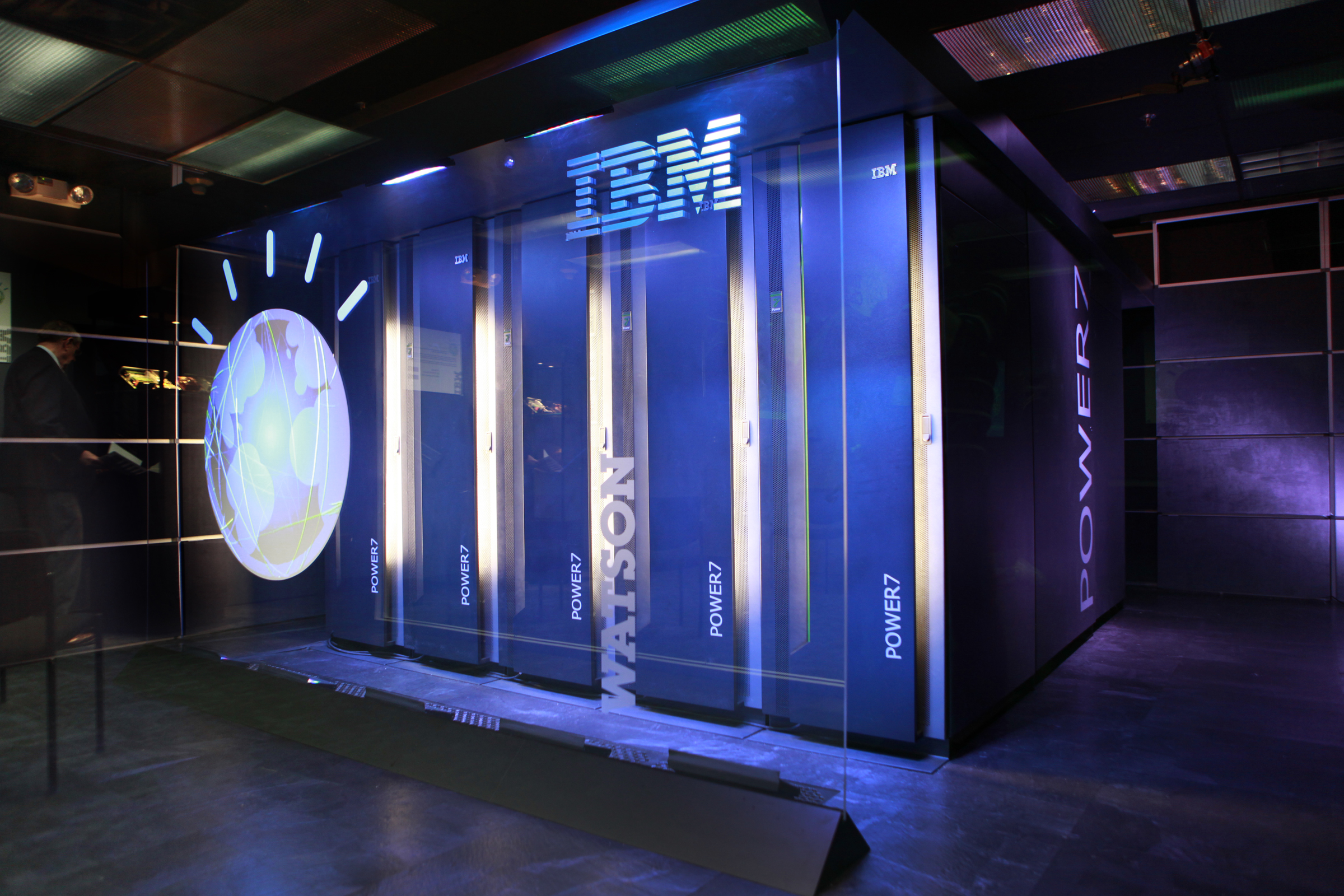

Murray Campbell, a research scientist and senior manager with IBM, doesn’t think we have reason to worry about artificial intelligence in the near term. Campbell has been studying AI for decades since he was recruited to help develop Deep Blue in 1989, the IBM computer famous for defeating former World Chess Champion Garry Kasparov. His current work in the company’s Cognitive Computing division examines artificially intelligent approaches to reasoning, planning, and decision making, and he regularly collaborates with the Watson team.

Watson is famous for winning the television game show Jeopardy! in 2011. Today, IBM is hoping third party developers will use Watson’s cognitive system to analyze images, understand speech, crunch huge amounts of data and more. To pull that off, Campbell says, computers need to learn how to truly participate in conversations rather than just answer questions.

TIME spoke with Campbell to learn what it will be like to have a real conversation with a computer, whether we should fear the idea of robots taking our jobs, and more. Our conversation has been edited for length and clarity.

What is it going to be like to have a real conversation with a computer?

CAMPBELL: When was the last time somebody walked into your office and posed a perfectly well-formed unambiguous question that had all of the information in it required to give a perfectly formed, unambiguous answer? It just doesn’t happen in the real world. And so what happens is, there’s information exchanged. There are some things that are ambiguous or unclear, people will ask questions to try and clarify like “What did you mean by that?” or “You didn’t mention this,” etc.

And if you have to script that all out in a dialogue system for a computer to do this, there are just so many ways that a conversation can go that you can never really do it. So you really have to have a learning approach, where a system learns to do this over time. So that’s where we’re focusing.

It will begin to learn what you mean by certain things when you say them. And if it doesn’t understand what you mean it will ask, rather than just blindly doing what you say. So as we think about computers and people working more closely in the coming years, and we definitely believe that’s going to happen, the natural way of interacting is through dialogue.

Read More: What 7 of the World’s Smartest People Think About Artificial Intelligence

How does a computer actually comprehend and answer a question?

CAMPBELL: When you ask the question, there are lots of [natural language processing] techniques and machine learning techniques that are applied to parse that question into its pieces, and then start coming up with hypotheses of what the answer could be by searching through a large corpus. It could be Wikipedia, it could be The New York Times archives, it could be anything.

And as these hypotheses start to form, there’s evidence that will be found. Some in favor of one answer, some in favor of another answer. Some may be showing that a particular answer is bad. So all of this evidence accumulates and is brought together, again using a machine learning approach to decide which sources to trust the most, which evidence is the most convincing, and then come up with an answer. Not just an answer, however, but a confidence in that answer. So that if it’s not certain, you at least know that the answer you’re being provided is more or less a guess, whereas if it’s certain you can rely on it more.

I know you’ve also had some thoughts about the Go victory. Can you expand on that? How big a milestone for AI is this really?

CAMPBELL: So I think it is a big milestone. It was the last standing traditional board game that hadn’t been conquered by a computer. I think in a sense it’s now the end of an era. There won’t be as much research on board games going forward.

I think it’s more important to move towards messier kinds of problems that have factors like uncertainty involved. There’s some information that you don’t get to see, unlike in Go or in Chess, where everything you need to know is right there in front of you if you can just figure it out.

But in the real world there’s a lot of information that you just don’t get to see and you still have to make a decision in spite of that fact. Or there may be some information that you see but isn’t reliable and you have to know how much to trust it. And in the real world you have to deal with language too.

So what’s an example of a real-world scenario that you’d like to see AI conquer?

CAMPBELL: I think most of the video games that people play provide really great test beds for exploring future AI technology. They require perception because there’s visual input, they often require some kind of language, and there are many possible actions that can be taken. So it’s a step towards the real world.

But the real world is the real world. So you can imagine health care applications where a doctor is meeting with a patient, and is trying to decide what the appropriate course of action is. And there’s so much information out there in the world that might be relevant for this particular patient, but who has time to look through it all?

So if you had a cognitive assistant that could go out and look through all the information, compare this patient with other patients and look at what course of treatment they had . . . and then provide that information to the physician, who’s really the decision maker, they could potentially make better decisions.

Read More: The 100 Most Genius Places in America

Several influential figures in tech, like Elon Musk and Stephen Hawking, have expressed concern about AI. Do we have a real reason to fear AI, or is it being overblown?

CAMPBELL: I definitely think it’s overblown. I think it’s worthwhile to think about these research questions around AI and ethics, and AI and safety. But I think it’s going to be decades before this stuff is really going to be important. I think the big danger right now is, and one of IBM’s senior VPs has stated this publicly, is not following up on these technologies. Because the benefits are so huge, that if we don’t use AI technologies we’re going to be losing out on all of these beneficial effects in health care, in self-driving cars, in education.

People have also expressed concern about how AI will impact the job market in the future. IBM’s goal with Watson is to make jobs easier, not to eliminate them, but I still find it hard to believe that it won’t be an unintended side effect. If a robot like Connie is helping answer questions in the hotel lobby, maybe the hotel could do with one fewer employee.

CAMPBELL: So there’s no doubt that there will be an effect on the job market, more in the mix of jobs and the kinds of jobs that are being done. If we each have our cognitive assistant that can help us be more efficient, then we can get more done and we don’t need as many people to do that particular job.

But each time we create these cognitive assistants, we create new opportunities. That’s the way it’s been in the past: new technologies take away some work, but create new opportunities. But I think these AI systems are going to have gaps. They’re going to have gaps in their knowledge for many years to come. And the practical way to fill those gaps is to partner them with humans who have a general intelligence and common sense reasoning so they can work together as a team to complement each other.

More Must-Reads from TIME

- Donald Trump Is TIME's 2024 Person of the Year

- Why We Chose Trump as Person of the Year

- Is Intermittent Fasting Good or Bad for You?

- The 100 Must-Read Books of 2024

- The 20 Best Christmas TV Episodes

- Column: If Optimism Feels Ridiculous Now, Try Hope

- The Future of Climate Action Is Trade Policy

- Merle Bombardieri Is Helping People Make the Baby Decision

Contact us at letters@time.com