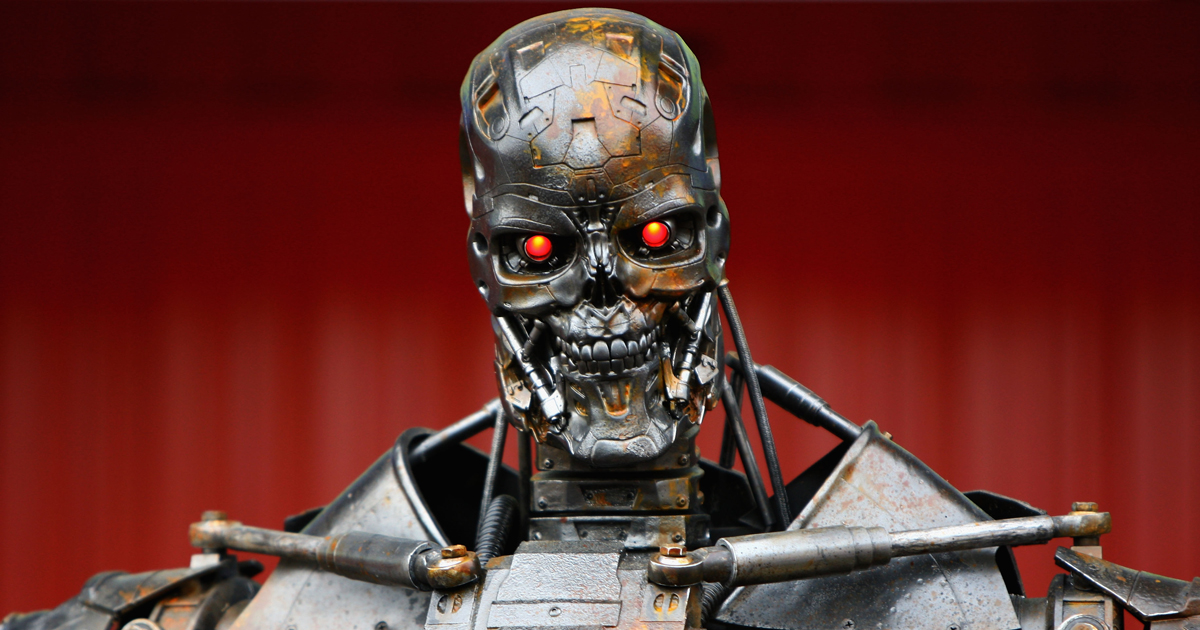

Artificial intelligence (AI) will end us, save us or—less jazzy-sounding but the more probable intersection of both—eventually obsolete us. From humbling chess grandmaster losses at the hands of mathematically brilliant supercomputers to semantic networks with the linguistic grasp of a four-year-old, one thing seems certain: AI is coming.

Here’s what today’s brightest programmers, philosophers and entrepreneurs have said about our terrifying, astonishing future.

Sam Altman

Altman, who’s working on developing an open-source version of AI that would be available to all rather than the few, believes future iterations could be designed to self-police, working only toward benevolent ends. The 30-year-old computer programmer and president of startup incubator Y Combinator says his “OpenAI” system will surpass human intelligence in a matter of decades, but that the fact that it’s available to anyone (as opposed to locked behind private, proprietary doors) should offset any risks.

Nick Bostrom

The 42-year-old director of Oxford’s Future of Humanity Institute takes a dimmer view of AI. In his 2014 book Superintelligence: Paths, Dangers, Strategies, Bostrom warns that AI could quickly turn dark and dispose of humans. The subsequent world would harbor “economic miracles and technological awesomeness, with nobody there to benefit,” like “a Disneyland without children.”

Bill Gates

The 60-year-old computer software magnate and Microsoft cofounder turned philanthropist views near-future low intelligence AI as a positive labor replacement tool, writing that an AI revolution “should be positive if we manage it well.” But he also worries that the “superintelligent” systems coming a few decades down the road will become “strong enough to be a concern.” He adds that he “[doesn’t] understand why some people are not concerned.”

Stephen Hawking

The famed 74-year-old theoretical physicist, author and pioneer of black hole physics believes AI could be both miraculous and catastrophic, calling it (along with several other noteworthy scientists) “the biggest event in human history,” helping wipe out war, disease and poverty. But with its potential to grow so explosively it could wind up “outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we cannot even understand,” Hawkings cautions that it could also potentially be “the last [event in our history], unless we learn how to avoid the risks.”

Michio Kaku

The 69-year-old bestselling author, theoretical physicist and futurist takes a longer, more pragmatic view, calling AI an end-of-the-century problem. He adds that even then, if humanity’s come up with no better methods to constrain rogue AI, it’ll be a matter of putting “a chip in [artificially intelligent robot] brains to shut them off.”

Ray Kurzweil

The 68-year-old inventor, futurist and director of engineering at Google believes human-level AI will be achieved by 2029. Given the technology’s potential to help find cures for diseases and clean up the environment, he says we have “a moral imperative to realize this promise while controlling the peril.”

Elon Musk

The outspoken 44-year-old entrepreneur, SpaceX founder and CEO of Tesla Motors has famously called AI “our biggest existential threat,” fretting that it may be tantamount to “summoning the demon.” And he’s deadly serious, adding as a counterintuitive thought (for an entrepreneur, anyway) that he’s “increasingly inclined to think that there should be some regulatory oversight, maybe at the national and international level, just to make sure that we don’t do something very foolish.”

More Must-Reads From TIME

- The 100 Most Influential People of 2024

- Coco Gauff Is Playing for Herself Now

- Scenes From Pro-Palestinian Encampments Across U.S. Universities

- 6 Compliments That Land Every Time

- If You're Dating Right Now , You're Brave: Column

- The AI That Could Heal a Divided Internet

- Fallout Is a Brilliant Model for the Future of Video Game Adaptations

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Write to Matt Peckham at matt.peckham@time.com