In 2011, a team of researchers set up an experiment to explore how metaphors used to describe crime could influence people’s ideas about how to reduce it. Participants were given one of two reports about rising crime rates in a city, with one describing crime as a “beast preying on” the city and the other as a “virus infecting” it. All other details being equal, participants who read the “beast” metaphor were more likely to suggest enforcement-based solutions (such as increasing police force), while those who read the “virus” metaphor were more likely to suggest reform-based ones (such as curbing poverty).

In business, leaders use metaphors frequently to illustrate new ideas, build belief, and guide people through change. And as the crime study makes clear, choosing the right metaphor matters: People’s attitudes and behaviors are informed by the metaphors we use to communicate. Metaphors don’t just serve to make sense of our reality—they help shape it.

One only has to look to the rise of artificial intelligence, in both business and everyday life, to know how true this is. Just as television was once described as an “electronic hearth” or the internet as an “information superhighway,” leaders are using metaphors to help people make sense of AI and engage with it. “Artificial intelligence” is in itself a metaphor we use to describe algorithmic techniques and their applications, but we keep layering more metaphors on top of it.

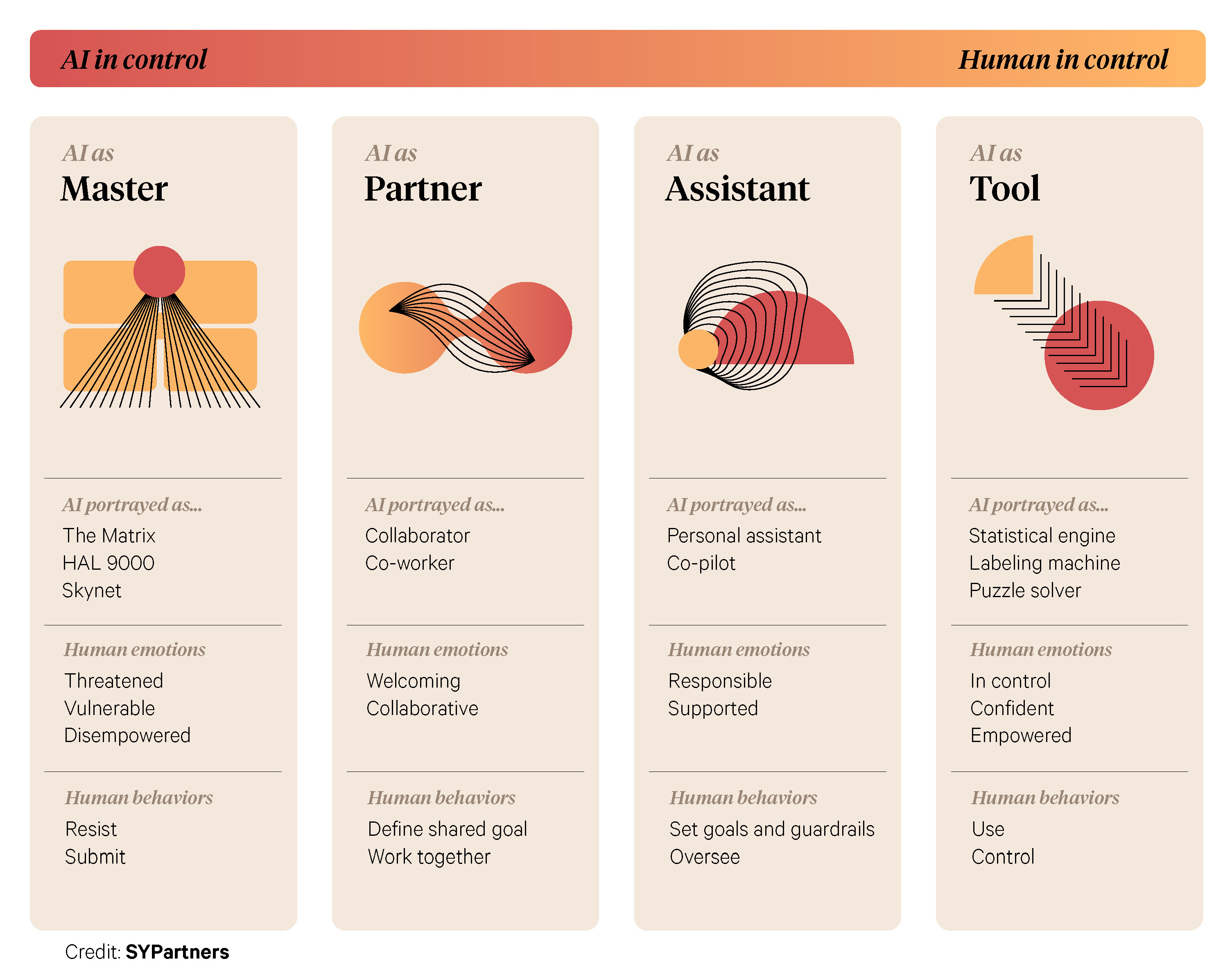

Not all metaphors are equally helpful, though. Broadly speaking, the language we currently use to describe AI tends to fall into one of four categories: master, partner, assistant, and tool. To choose the right one, leaders should look at the type of relationship each implies between humans and AI—including where the metaphor sits on the spectrum from “AI in control” to “humans in control”—and the attitudes and actions each one tends to spark.

AI as tool

The tool metaphor is the only one of the four that isn’t anthropomorphic. Depicting AI as a device used to carry out a particular function reinforces the idea that AI models are created by humans and can therefore be fully shaped by them.

While there is value in describing AI in a non-anthropomorphic way, there might be even greater risk in doing so. Portraying AI as a tool completely under human control fails to recognize how different it is from technologies that precede it. AI models can learn on their own at unprecedented speed and develop logical pathways that even their designers don’t fully understand. The tool metaphor risks lulling people and organizations into a sense of overconfidence—making them blind to AI’s singular strengths and risks, and unintentionally opening the door to harmful usage.

AI as master

Films and books are filled with another metaphor: Stories of AI as a master that gains authority over the human race. This one calls for a binary response: either resistance or submission. Resistance leads, in the extreme, to calls for an outright ban of the technology. Submission is fundamentally disempowering and leads to a paralyzing and—possibly self-fulfilling—belief that nothing can be done to mitigate AI’s unintended consequences. Either way, portraying AI as a master seems counterproductive.

AI as partner

In recent months, plenty of articles have welcomed AI as a new “co-worker” and praised the benefits of “collaborating” or “partnering” with AI. The metaphor of AI as partner suggests a relationship of equals between the technology and the humans who use it, but that isn’t exactly accurate: Humans set the models’ initial goals and design them accordingly, which creates a hierarchy in the relationship.

AI as assistant

This metaphor is one of the most widely used—from AI as “conversational assistant” to AI as “co-pilot.”

It is also one of the most helpful. Many of the attitudes and behaviors required to build a healthy, productive relationship with an assistant are relevant in guiding interactions with AI:

- Understanding strengths and weaknesses: When they start a new job, assistants bring with them the sum of their past experiences. They have learned and grown in previous roles. They excel at certain tasks, and lag in others. Similarly, AI models have been trained. They, too, come in with strengths and weaknesses. And they, too, reflect unique values and biases encoded by the teams that built them and the data they ingested. For example, a model trained on older data won’t be helpful when queried about recent events. The assistant metaphor reminds leaders using AI models to first ask: How does the model work? What is it great at? What might be its shortcomings?

- Setting goals and guardrails: Assistants need to understand their goals, and know which tasks are in their scope and which aren’t. Similarly, using AI responsibly requires setting clear goals for the models, and intentional guardrails for their usage. Sometimes that line can be a fine one. While it is acceptable for predictive algorithms to infer preferences (such as assuming that a consumer browsing pink products likes pink), using the same algorithms to infer characteristics (such as assuming that a consumer browsing pink products is a woman) might lead to privacy violations.

- Overseeing: Once goals and guardrails are clear, assistants thrive with a level of autonomy—not just in deciding how to accomplish tasks, but also in defining the tasks that need to be performed. Similarly, AI models should be trusted to carry out the tasks that they were designed to perform. But a complete lack of supervision is unnecessarily risky. Generative AI is prone to erroneous outputs that look like truth. Experienced users know when and how to fact-check the models’ outputs. Much like a productive relationship with an assistant involves regularly checking in, a responsible use of AI requires thoughtful monitoring, ongoing assessment, and purposeful course-correction.

Of course, the assistant metaphor isn’t perfect. There is no perfect way to portray AI. Each metaphor elevates one aspect of an idea and minimizes others. And as technology keeps evolving, so might our interactions with AI. Today’s assistant might be tomorrow’s manager.

Still, reflecting on whether an AI metaphor implies “AI in control” or “humans in control” is in itself valuable. It reminds us to calibrate our trust in AI. Under-trusting AI prevents any gains in performance. Over-trusting it creates risk of overreliance, error, and misalignment. This shows how metaphors can shape reality. Leaders must choose carefully the ones they use based on the behaviors they want to spark in their organizations, and the future they hope to build.

___

Allison F. Avery is the Chief People & Flourishing Officer at SYPartners, integrating human flourishing research and a Jungian perspective into People, leadership and organizational development strategies. She was previously the global VP of Inclusion & Community at Dow Jones, and is a certified Jungian psychoanalyst.

Nicolas Maitret is a Managing Partner at SYPartners, a transformation and innovation consultancy based in New York City. He has helped launch the Data & Trust Alliance, and is partnering with leading businesses and nonprofits to develop responsible AI practices that create value and earn trust.