Imagine seeing the world through the eyes of a six-month-old child. You don’t have the words to describe anything. How could you possibly begin to understand language, when each sound that comes out of the mouths of those around you has an almost infinite number of potential meanings?

This question has led many scientists to hypothesize that humans must have some intrinsic language facility to help us get started in acquiring language. But a paper published in Science this week found that a relatively simple AI system fed with data filmed from a baby’s-eye view began to learn words.

The paper builds off a dataset of footage filmed from a helmet-mounted camera worn by an Australian baby over the course of eighteen months, between the ages of six to 25 months old. Research assistants painstakingly went through and annotated 37,5000 utterances—such as “you see this block the triangle,” spoken by a parent while the baby is playing with a toy block set—from 61 hours of video. A clip shared with TIME shows the baby groping for the toy set before turning its attention to an unimpressed cat.

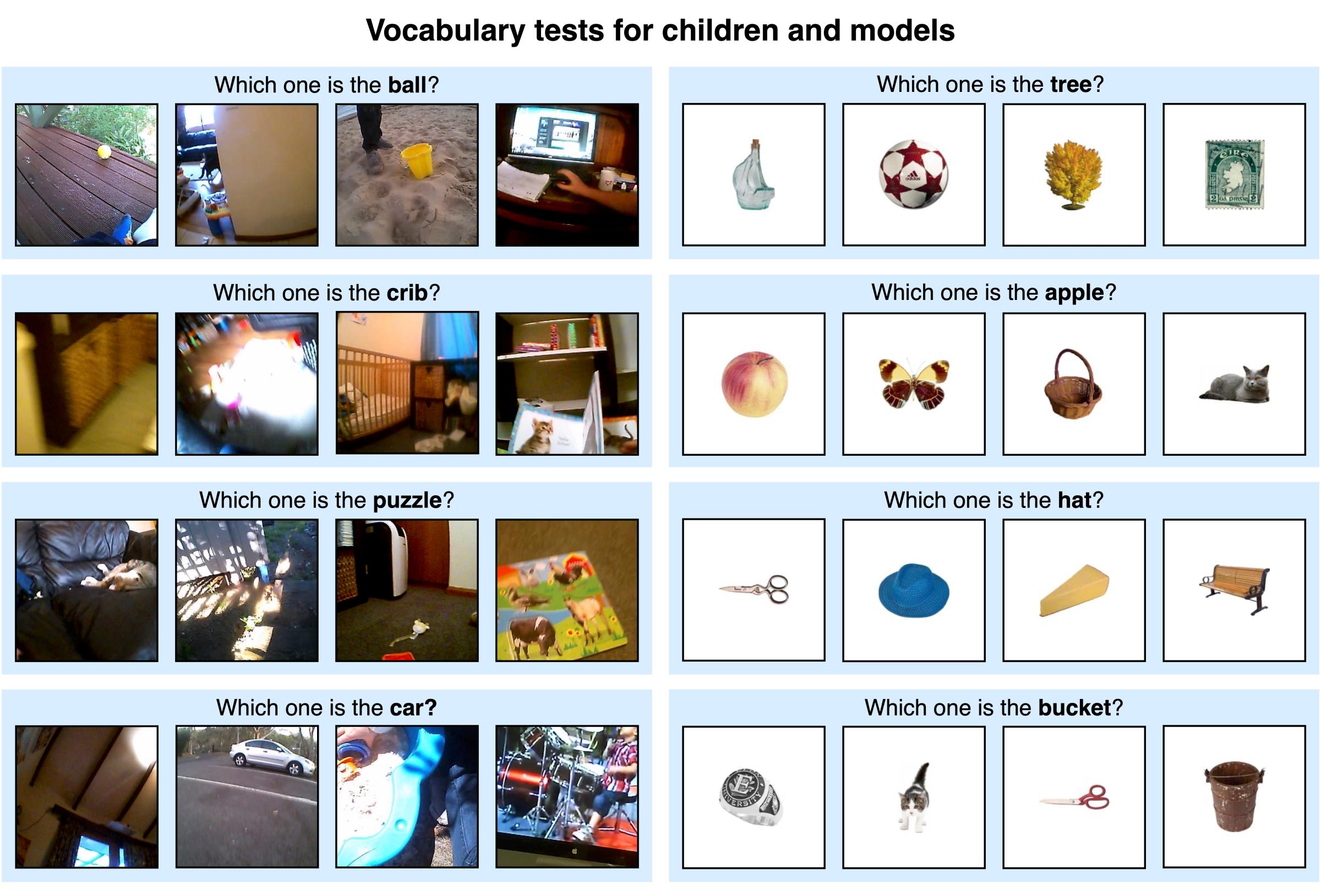

Researchers from New York University’s Center for Data Science and Department of Psychology fed this dataset into a multimodal AI system—one that could ingest both text and images. They found that the AI model could identify many different objects, both in tests using data from the head-mounted camera and in tests using a dataset of idealized images of various objects, although its accuracy was somewhat limited.

The AI system was better at naming objects it had seen more frequently, including apples (which are ubiquitous in children’s books) and cribs. It also was better able to pick out objects that weren’t obscured in the head camera images. It was particularly poor at recognizing knives, says Wai Keen Vong, one of the paper’s authors.

Some psychologists and linguists believe that children would not be able to form associations between words and objects without having some innate language ability. But the fact that the AI model, which is relatively simple, could even begin to learn word associations on such a small dataset challenges this view, says Vong.

However, it’s important to note that the footage collected by the camera captures the baby interacting with the world, and its parents reacting to it. This means that the AI model is “harvesting what that child knows,” giving it an advantage in developing word associations, says Andrei Barbu, a research scientist at Massachusetts Institute of Technology’s Computer Science and Artificial Intelligence Laboratory. “If you took this model and you put it on a robot, and you had it run for 61 hours, you would not get the kind of data that they got here, that will then be useful for updating a model like this.”

Since writing up their results, the NYU researchers have transcribed four times more data from the head camera footage, which they intend to feed into their model. They hope to examine how much more the AI model learns when it's given more data, Vong says. They also hope to test whether the model can start to learn more challenging words and linguistic behaviors that tend to develop later in life.

These experiments could shed further light on how babies learn to speak, as well as help researchers understand the differences between human and artificial intelligence. “There's much to be gleaned from studying how humans acquire language,” says Vong, “and how we do it so efficiently compared to machines right now.”

More Must-Reads from TIME

- Why Biden Dropped Out

- Ukraine’s Plan to Survive Trump

- The Rise of a New Kind of Parenting Guru

- The Chaos and Commotion of the RNC in Photos

- Why We All Have a Stake in Twisters’ Success

- 8 Eating Habits That Actually Improve Your Sleep

- Welcome to the Noah Lyles Olympics

- Get Our Paris Olympics Newsletter in Your Inbox

Write to Will Henshall at will.henshall@time.com