Beijing is poised to implement sweeping new regulations for artificial intelligence services this week, trying to balance state control of the technology with enough support that its companies can become viable global competitors.

The government issued 24 guidelines that require platform providers to register their services and conduct a security review before they’re brought to market. Seven agencies will take responsibility for oversight, including the Cyberspace Administration of China and the National Development and Reform Commission.

The final regulations are less onerous than an original draft from April, but they show China, like Europe, moving ahead with government oversight of what may be the most promising — and controversial — technology of the last 30 years. The U.S., by contrast, has no legislation under serious consideration even after industry leaders warned that AI poses a “risk of extinction” and OpenAI’s Sam Altman urged Congress in public hearings to get involved.

“China got started very quickly,” said Matt Sheehan, a fellow at the Carnegie Endowment for International Peace who is writing a series of research papers on the subject. “It started building the regulatory tools and the regulatory muscles, so they’re going to be more ready to regulate more complex applications of the technology.”

China’s regulations go beyond anything contemplated in Western democracies. But they also include practical steps that have support in places like the U.S.

Read More: Tech Leaders Warn the U.S. Military Is Falling Behind China on AI

Beijing, for example, will mandate conspicuous labels on synthetically created content, including photos and videos. That’s aimed at preventing deceptions like an online video of Nancy Pelosi that was doctored to make her appear drunk. China will also require any company introducing an AI model to use “legitimate data” to train their models and to disclose that data to regulators as needed. Such a mandate may placate media companies that fear their creations will be co-opted by AI engines. Additionally, Chinese companies must provide a clear mechanism for handling public complaints about services or content.

While the U.S.’ historically hands-off approach to regulation gave Silicon Valley giants the space to become global juggernauts, that strategy holds serious dangers with generative AI, said Andy Chun, an artificial intelligence expert and adjunct professor at the City University of Hong Kong.

“AI has the potential to profoundly change how people work, live, and play in ways we are just beginning to realize,” he said. “It also poses clear risks and threats to humanity if AI development proceeds without adequate oversight.”

Read More: How the World Must Respond to the AI Revolution

In the U.S., federal lawmakers have proposed a wide range of AI regulations but efforts remain in the early stages. The U.S. Senate has held several AI briefings this summer to help members come up to speed on the technology and its risks before pursuing regulations.

In June, the European Parliament passed a draft of the AI Act, which would impose new guardrails and transparency requirements for artificial intelligence systems. The parliament, EU member states and European Commission must negotiate final terms before the legislation becomes law.

Beijing has spent years laying the groundwork for the rules that take effect Tuesday. The State Council, the country’s cabinet, put out an AI roadmap in 2017 that declared development of the technology a priority and laid out a timetable for putting government regulations in place.

Agencies like the CAC then consulted with legal scholars such as Zhang Linghan from the China University of Political Science and Law about AI governance, according to Sheehan. As China’s draft guidelines on generative AI evolved into the latest version, there were months of consultation between regulators, industry players and academics to balance legislation and innovation. That initiative on Beijing’s part is driven in part by the strategic importance of AI, and the desire to gain a regulatory edge over other governments, said You Chuanman, director of the Institute for International Affairs Center for Regulation and Global Governance at the Chinese University of Hong Kong in Shenzhen.

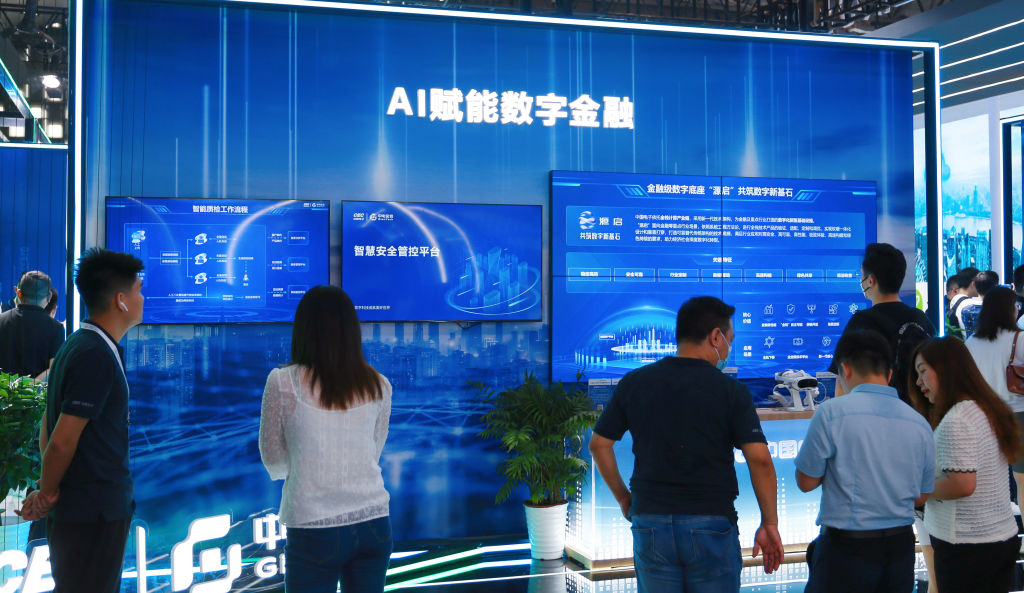

Now, China’s biggest AI players, from Baidu Inc. to Alibaba Group Holding and SenseTime Group Inc., are getting to work. Beijing has targeted AI as one of a dozen tech priorities and, after a two-year regulatory crackdown, the government is seeking private sector help to prop up the flagging economy and compete with the U.S. After the introduction of ChatGPT set off a global AI frenzy, leading tech executives and aspiring entrepreneurs are pouring billions of dollars into the field.

“In the context of fierce global competition, lack of development is the most unsafe thing,” Zhang, the scholar from China University of Political Science and Law, wrote about the guidelines.

In a flurry of activity this year, Alibaba, Baidu and SenseTime all showed off AI models. Xu Li, chief executive officer of SenseTime, pulled off the flashiest presentation, complete with a chatbot that writes computer code from prompts either in English or Chinese.

Still, Chinese companies trail global leaders like OpenAI and Alphabet’s Google. They will likely struggle to challenge such rivals, especially if American companies are regulated by no one but themselves.

“China is trying to walk a tightrope between several different objectives that are not necessarily compatible,” said Helen Toner, a director at Georgetown’s Center for Security and Emerging Technology. “One objective is to support their AI ecosystem, and another is to maintain social control and maintain the ability to censor and control the information environment in China.”

In the U.S., OpenAI has shown little control over information even if it’s dangerous or inaccurate. Its ChatGPT made up fake legal precedents and provided bomb-building instructions to the public. A Georgia radio host claims the bot generated a false complaint that accused him of embezzling money.

Read More: China Is Betting Big on Artificial Intelligence—Even as It Cracks Down on ChatGPT

In China, companies have to be much more careful. This February, the Hangzhou-based Yuanyu Intelligence pulled the plug on its ChatYuan service only days after launch. The bot had called Russia’s attack on Ukraine a “war of aggression” — in contravention of Beijing’s stance — and raised doubts about China’s economic prospects, according to screenshots that circulated online.

Now the startup has abandoned a ChatGPT model entirely to focus on an AI productivity service called KnowX. “Machines cannot achieve 100% filtering,” said Xu Liang, head of the company. “But what you can do is to add human values of patriotism, trustworthiness, and prudence to the model.”

Beijing, with its authoritarian powers, plays by different rules than Washington. When Chinese agencies reprimand and fine tech companies, the corporations can’t fight back and often publicly thank the government for its oversight. In the U.S., Big Tech hires armies of lawyers and lobbyists to contest almost any regulatory action. Alongside the robust public debate among stakeholders, this will make it difficult to install effective AI regulations, said Aynne Kokas, associate professor of media studies at the University of Virgina.

In China, AI is beginning to make its way into the sprawling censorship regime that keeps the country’s internet scrubbed of taboo and controversial topics. That doesn’t mean it is easy, technically speaking. “One of the most attractive innovations of ChatGPT and similar AI innovations is its unpredictability or its own innovation beyond our human intervention,” You, from the Chinese University of Hong Kong, said. “In many cases, it’s beyond control of the platform service providers.”

Some Chinese tech companies are using two-way keyword filtering, using one large language model to ensure that another LLM is scrubbed of any controversial content. One tech startup founder, who declined to be named due to political sensitivities, said the government will even do spot-checks on how AI services are labeling data.

“What is potentially the most fascinating and concerning time-line is the one where censorship happens through new large language models developed specifically as censors,” said Nathan Freitas, a fellow at Harvard University’s Berkman Klein Center for Internet and Society.

The European Union may be the most progressive in protecting individuals from such overreach. The draft law passed in June ensures privacy controls and curbs the use of facial recognition software. The EU proposal would also require companies to perform some analysis of the risks their services entail, for, say, health systems or national security.

But the EU’s approach has drawn objections. OpenAI’s Altman suggested his company may “cease operating” within countries that implement overly onerous regulations.

One thing Washington can learn from Chinese regulators is to be “targeted and iterative,” Sheehan said. “Build these tools that they can keep improving as they keep regulating.”

—With assistance from Emily Cadman, Alice Truong and Seth Fiegerman.

More Must-Reads from TIME

- Donald Trump Is TIME's 2024 Person of the Year

- Why We Chose Trump as Person of the Year

- Is Intermittent Fasting Good or Bad for You?

- The 100 Must-Read Books of 2024

- The 20 Best Christmas TV Episodes

- Column: If Optimism Feels Ridiculous Now, Try Hope

- The Future of Climate Action Is Trade Policy

- Merle Bombardieri Is Helping People Make the Baby Decision

Contact us at letters@time.com