OpenAI CEO Sam Altman said Wednesday his company could “cease operating” in the European Union if it is unable to comply with the provisions of new artificial intelligence legislation that the bloc is currently preparing.

“We’re gonna try to comply,” Altman said on the sidelines of a panel discussion at University College London, part of an ongoing tour of European countries. He said he had met with E.U. regulators to discuss the AI act as part of his tour, and added that OpenAI had “a lot” of criticisms of the way the act is currently worded.

Altman said that OpenAI’s skepticism centered on the E.U. law’s designation of “high risk” systems as it is currently drafted. The law is still undergoing revisions, but under its current wording it may require large AI models like OpenAI’s ChatGPT and GPT-4 to be designated as “high risk,” forcing the companies behind them to comply with additional safety requirements. OpenAI has previously argued that its general purpose systems are not inherently high-risk.

“Either we’ll be able to solve those requirements or not,” Altman said of the E.U. AI Act’s provisions for high risk systems. “If we can comply, we will, and if we can’t, we’ll cease operating… We will try. But there are technical limits to what’s possible.”

Read More: OpenAI CEO Sam Altman Asks Congress to Regulate AI

The law, Altman said, was “not inherently flawed,” but he went on to say that “the subtle details here really matter.” During an on-stage interview earlier in the day, Altman said his preference for regulation was “something between the traditional European approach and the traditional U.S. approach.”

Altman also said on stage that he was worried about the risks stemming from artificial intelligence, singling out the possibility of AI-generated disinformation designed to appeal to an individual’s own personal biases. For example, AI-generated disinformation could have an impact on the upcoming 2024 U.S. election, he said. But he suggested that social media platforms were more important drivers of disinformation than AI language models. “You can generate all the disinformation you want with GPT-4, but if it’s not being spread, it’s not going to do much,” he said.

Read More: The AI Arms Race Is Changing Everything

On the whole, Altman presented a rosy view to the London crowd of a potential future where the technology’s benefits far outweighed its risks. “I am an optimist,” he said.

In a foray into socioeconomic policy, Altman raised the prospect of the need for wealth redistribution in an AI-driven future. “We’ll have to think about distribution of wealth differently than we do today, and that’s fine,” Altman said on stage. “We think about that somewhat differently after every technological revolution.”

Altman told TIME after the talk that OpenAI was preparing, in 2024, to begin making public interventions on the topic of wealth redistribution, in much the same way that it is currently doing on AI regulatory policy. “We’re going to try,” he said. “That’s kind of a next-year project for us.” OpenAI is currently carrying out a five-year study into universal basic income, he said, which will conclude next year. “That’ll be a good time to do it,” Altman said.

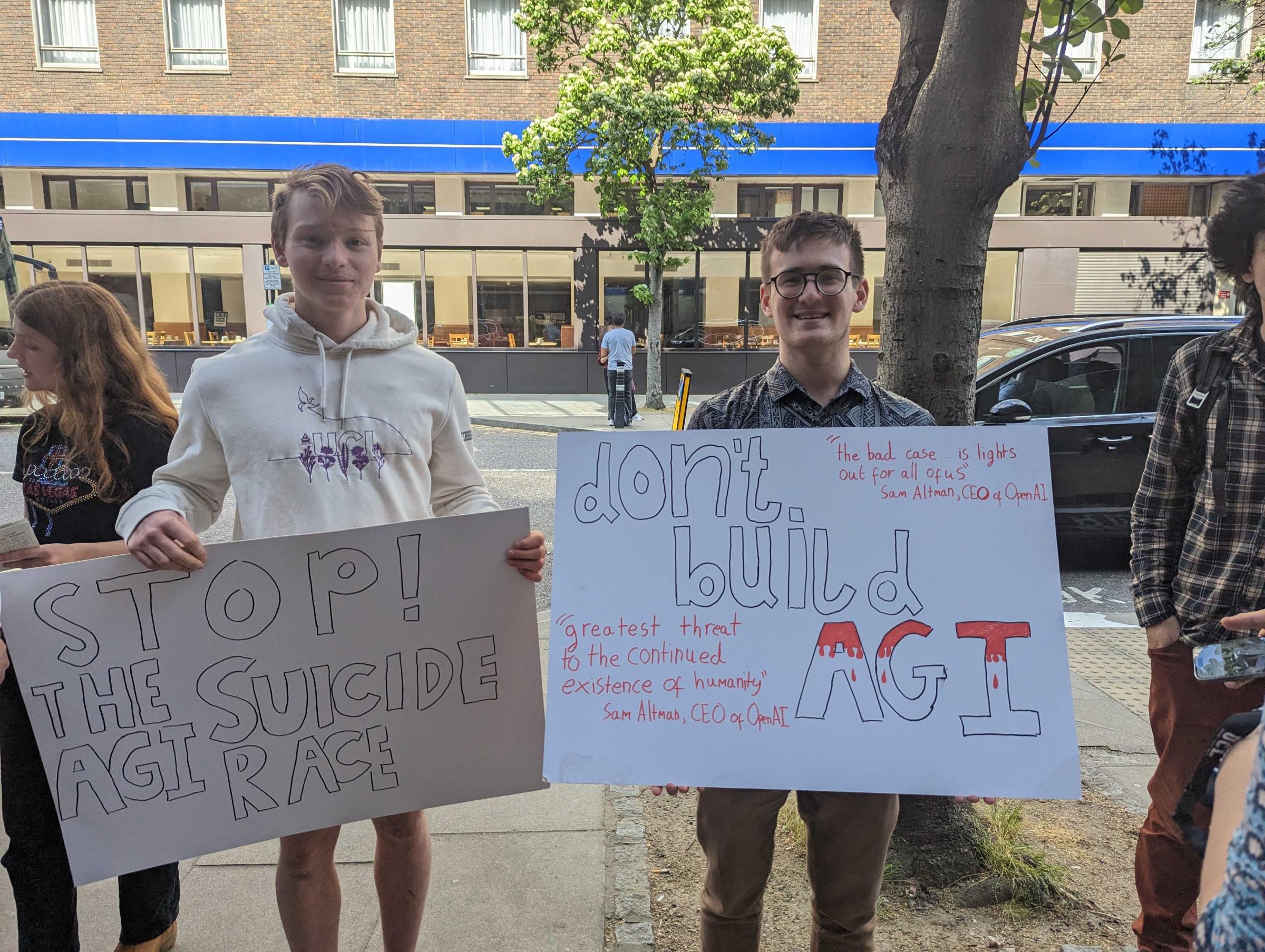

Altman’s appearance at the London university drew some negative attention. Outside the packed-out lecture theater, a handful of protesters milled around talking to people who’d failed to get in. One protester carried a sign saying: “Stop the suicide AGI race.” (AGI stands for “Artificial General Intelligence,” a hypothetical superintelligent AI that OpenAI has said that it aims to one day build.) The protesters handed out fliers urging people to “Stand up against Sam Altman’s dangerous vision for the future.”

“It’s time that the public step up and say: it is our future and we should have a choice over it,” said Gideon Futerman, 20, one of the protesters, who said he is a student studying solar geoengineering and existential risk at the University of Oxford. “We shouldn’t be allowing multimillionaires from Silicon Valley with a messiah complex to decide what we want.”

Read more: Pausing AI Developments Isn’t Enough. We Need to Shut it All Down

“What we’re doing is making a deal with the devil,” Futerman says. “A very large number of people who think that these systems are on track for AGI, also think that a bad future is more likely than a good future.”

Futerman told TIME that Altman came out and had a brief conversation with him and the other protesters after his panel appearance.

“He said he understood our concerns, but thinks that safety and capabilities can’t be separated from each other,” Futerman said. “He said that OpenAI isn’t a player in the [AI] race, despite the fact that this is so clearly what they are doing. He basically said that he doesn’t think this development can be stopped, and said he’s got confidence in their safety.”

Correction: May 30

The original version of this story misspelled the last name of a protester outside the lecture theater where Altman spoke. It is Futerman, not Futterman.

More Must-Reads from TIME

- Caitlin Clark Is TIME's 2024 Athlete of the Year

- Where Trump 2.0 Will Differ From 1.0

- Is Intermittent Fasting Good or Bad for You?

- The 100 Must-Read Books of 2024

- Column: If Optimism Feels Ridiculous Now, Try Hope

- The Future of Climate Action Is Trade Policy

- FX’s Say Nothing Is the Must-Watch Political Thriller of 2024

- Merle Bombardieri Is Helping People Make the Baby Decision

Write to Billy Perrigo/London at billy.perrigo@time.com