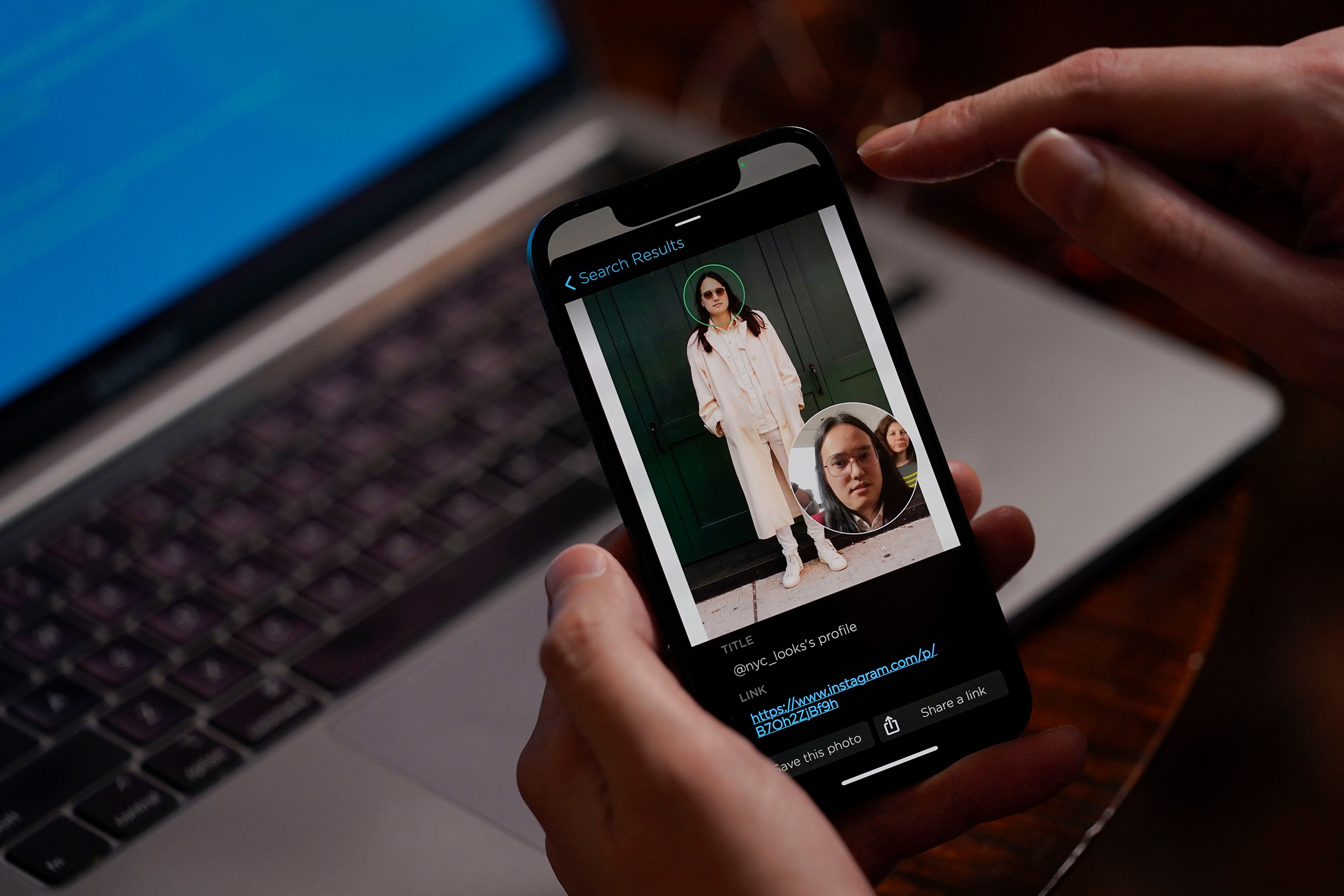

More and more privacy watchdogs around the world are standing up to Clearview AI, a U.S. company that has collected billions of photos from the internet without people’s permission.

The company, which uses those photos for its facial recognition software, was fined £7.5 million ($9.4 million) by a U.K. regulator on May 26. The U.K. Information Commissioner’s Office (ICO) said the firm, Clearview AI, had broken data protection law. The company denies breaking the law.

But the case reveals how nations have struggled to regulate artificial intelligence across borders.

Facial recognition tools require huge quantities of data. In the race to build profitable new AI tools that can be sold to state agencies or attract new investors, companies have turned to downloading—or “scraping”—trillions of data points from the open web.

In the case of Clearview, these are pictures of peoples’ faces from all over the internet, including social media, news sites and anywhere else a face might appear. The company has reportedly collected 20 billion photographs—the equivalent of nearly three per human on the planet.

Those photos underpin the company’s facial recognition algorithm. They are used as training data, or a way of teaching Clearview’s systems what human faces look like and how to detect similarities or distinguish between them. The company says its tool can identify a person in a photo with a high degree of accuracy. It is one of the most accurate facial recognition tools on the market, according to U.S. government testing, and has been used by U.S. Immigration and Customs enforcement and thousands of police departments, as well as businesses like Walmart.

The vast majority of people have no idea their photographs are likely included in the dataset that Clearview’s tool relies on. “They don’t ask for permission. They don’t ask for consent,” says Abeba Birhane, a senior fellow for trustworthy AI at Mozilla. “And when it comes to the people whose images are in their data sets, they are not aware that their images are being used to train machine learning models. This is outrageous.”

The company says its tools are designed to keep people safe. “Clearview AI’s investigative platform allows law enforcement to rapidly generate leads to help identify suspects, witnesses and victims to close cases faster and keep communities safe,” the company says on its website.

But Clearview has faced other intense criticism, too. Advocates for responsible uses of AI say that facial recognition technology often disproportionately misidentifies people of color, making it more likely that law enforcement agencies using the database could arrest the wrong person. And privacy advocates say that even if those biases are eliminated, the data could be stolen by hackers or enable new forms of intrusive surveillance by law enforcement or governments.

Read More: Uber Drivers Say a ‘Racist’ Facial Recognition Algorithm Is Putting Them Out of Work

Will the U.K.’s fine have any impact?

In addition to the $9.4 million fine, the U.K. regulator ordered Clearview to delete all data it collected from U.K. residents. That would ensure its system could no longer identify a picture of a U.K. user.

But it is not clear whether Clearview will pay the fine, nor comply with that order.

“As long as there are no international agreements, there is no way of enforcing things like what the ICO is trying to do,” Birhane says. “This is a clear case where you need a transnational agreement.”

It wasn’t the first time Clearview has been reprimanded by regulators. In February, Italy’s data protection agency fined the company 20 million euros ($21 million) and ordered the company to delete data on Italian residents. Similar orders have been filed by other E.U. data protection agencies, including in France. The French and Italian agencies did not respond to questions about whether the company has complied.

In an interview with TIME, the U.K. privacy regulator John Edwards said Clearview had informed his office that it cannot comply with his order to delete U.K. residents’ data. In an emailed statement, Clearview’s CEO Hoan Ton-That indicated that this was because the company has no way of knowing where people in the photos live. “It is impossible to determine the residency of a citizen from just a public photo from the open internet,” he said. “For example, a group photo posted publicly on social media or in a newspaper might not even include the names of the people in the photo, let alone any information that can determine with any level of certainty if that person is a resident of a particular country.” In response to TIME’s questions about whether the same applied to the rulings by the French and Italian agencies, Clearview’s spokesperson pointed back to Ton-That’s statement.

Ton-That added: “My company and I have acted in the best interests of the U.K. and their people by assisting law enforcement in solving heinous crimes against children, seniors, and other victims of unscrupulous acts … We collect only public data from the open internet and comply with all standards of privacy and law. I am disheartened by the misinterpretation of Clearview AI’s technology to society.”

Clearview did not respond to questions about whether it intends to pay, or contest, the $9.4 million fine from the U.K. privacy watchdog. But its lawyers have said they do not believe the U.K.’s rules apply to them. “The decision to impose any fine is incorrect as a matter of law,” Clearview’s lawyer, Lee Wolosky, said in a statement provided to TIME by the company. “Clearview AI is not subject to the ICO’s jurisdiction, and Clearview AI does no business in the U.K. at this time.”

Regulation of AI: unfit for purpose?

Regulation and legal action in the U.S. has had more success. Earlier this month, Clearview agreed to allow users from Illinois to opt out of their search results. The agreement was a result of a settlement to a lawsuit filed by the ACLU in Illinois, where privacy laws say that the state’s residents must not have their biometric information (including “faceprints”) used without permission.

Still, the U.S. has no federal privacy law, leaving enforcement up to individual states. Although the Illinois settlement also requires Clearview to stop selling its services to most private businesses across the U.S., the lack of a federal privacy law means companies like Clearview face little meaningful regulation at the national and international levels.

“Companies are able to exploit that ambiguity to engage in massive wholesale extractions of personal information capable of inflicting great harm on people, and giving significant power to industry and law enforcement agencies,” says Woodrow Hartzog, a professor of law and computer science at Northeastern University.

Hartzog says that facial recognition tools add new layers of surveillance to people’s lives without their consent. It is possible to imagine the technology enabling a future where a stalker could instantly find the name or address of a person on the street, or where the state can surveil people’s movements in real time.

The E.U. is weighing new legislation on AI that could see forms of facial recognition based on scraped data being banned almost entirely in the bloc starting next year. But Edwards—the U.K. privacy tsar whose role includes helping to shape incoming post-Brexit privacy legislation—doesn’t want to go that far. “There are legitimate uses of facial recognition technology,” he says. “This is not a fine against facial recognition technology… It is simply a decision which finds one company’s deployment of technology in breach of the legal requirements in a way which puts the U.K. citizens at risk.”

It would be a significant win if, as demanded by Edwards, Clearview were to delete U.K. residents’ data. Clearview doing so would prevent them from being identified by its tools, says Daniel Leufer, a senior policy analyst at digital rights group Access Now in Brussels. But it wouldn’t go far enough, he adds. “The whole product that Clearview has built is as if someone built a hotel out of stolen building materials. The hotel needs to stop operating. But it also needs to be demolished and the materials given back to the people who own them,” he says. “If your training data is illegitimately collected, not only should you have to delete it, you should delete models that were built on it.”

But Edwards says his office has not ordered Clearview to go that far. “The U.K. data will have contributed to that machine learning, but I don’t think that there’s any way of us calculating the materiality of the U.K. contribution,” he says. “It’s all one big soup, and frankly, we didn’t pursue that angle.”

More Must-Reads From TIME

- The 100 Most Influential People of 2024

- Coco Gauff Is Playing for Herself Now

- Scenes From Pro-Palestinian Encampments Across U.S. Universities

- 6 Compliments That Land Every Time

- If You're Dating Right Now , You're Brave: Column

- The AI That Could Heal a Divided Internet

- Fallout Is a Brilliant Model for the Future of Video Game Adaptations

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Write to Billy Perrigo at billy.perrigo@time.com