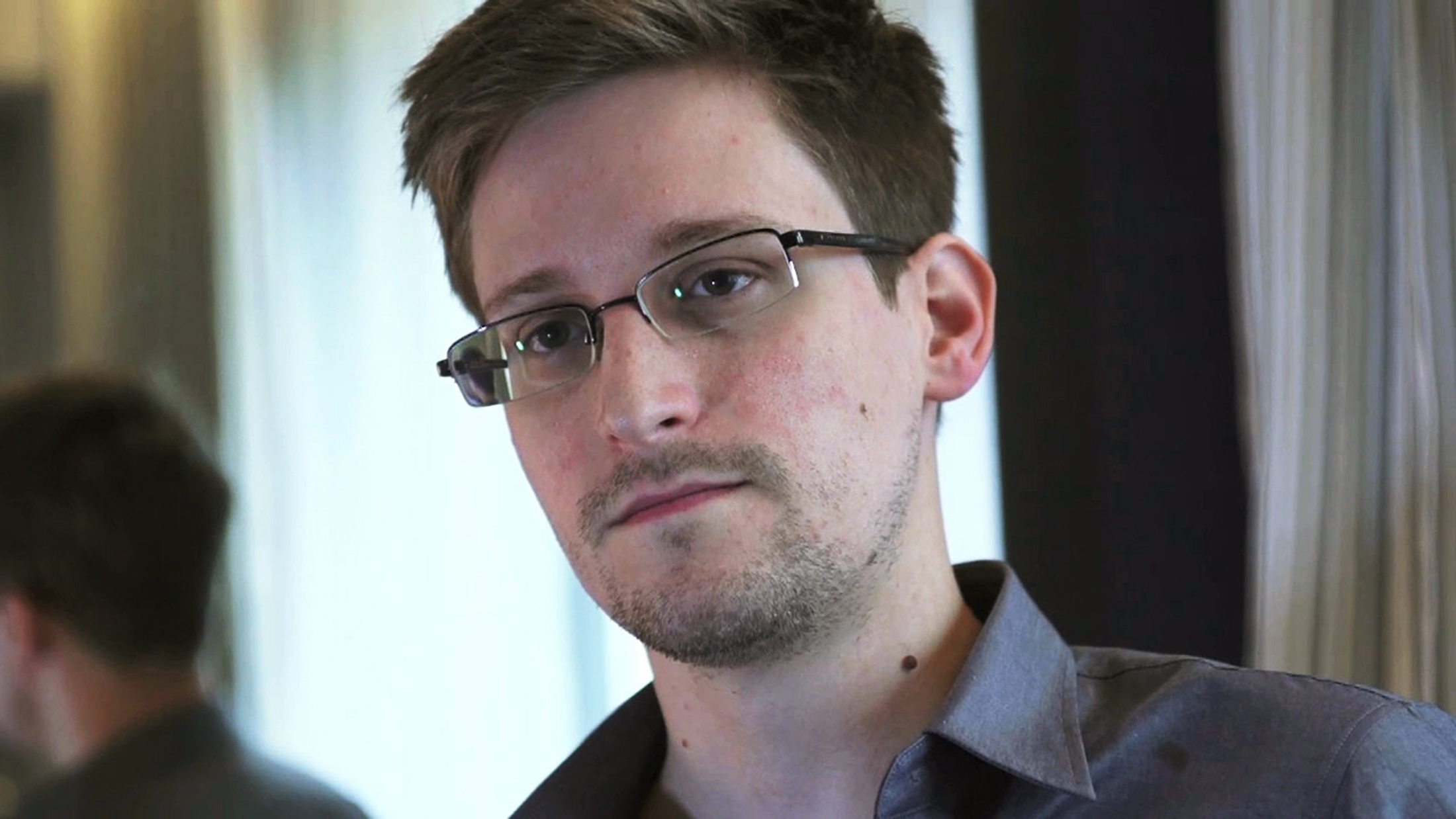

Edward Snowden used widely available automated software to steal classified data from the National Security Agency’s networks, intelligence officials have determined, raising questions about the security of other top-secret military and intelligence systems under the NSA’s purview.

The New York Times, citing anonymous sources, reported that the former NSA contractor used a Web crawler, cheap software designed to index and back up websites, to scour the NSA’s data and return a trove of confidential documents. Snowden apparently programmed his search to find particular subjects and determine how deeply to follow links on the NSA’s internal networks.

Investigators found that Snowden’s method of obtaining the data was hardly sophisticated and should have been easily detected. Snowden accessed roughly 1.7 million files, intelligence officials said last week, partly because the NSA “compartmented” relatively little information, making it easier for a Web crawler like the one Snowden used to access a large number of files.

[NYT]

More Must-Reads from TIME

- Why Biden Dropped Out

- Ukraine’s Plan to Survive Trump

- The Rise of a New Kind of Parenting Guru

- The Chaos and Commotion of the RNC in Photos

- Why We All Have a Stake in Twisters’ Success

- 8 Eating Habits That Actually Improve Your Sleep

- Welcome to the Noah Lyles Olympics

- Get Our Paris Olympics Newsletter in Your Inbox

Contact us at letters@time.com