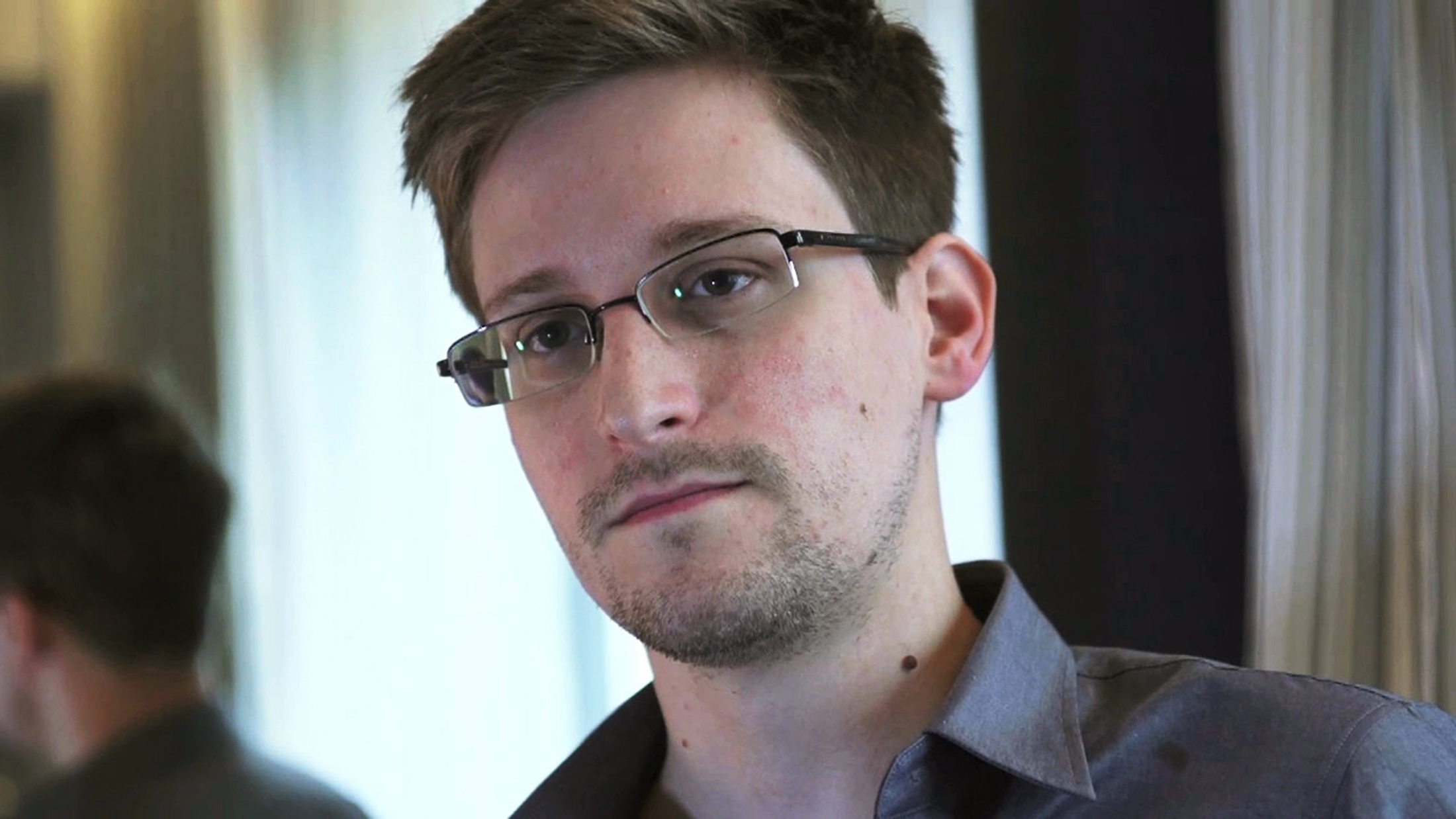

Edward Snowden used widely available automated software to steal classified data from the National Security Agency’s networks, intelligence officials have determined, raising questions about the security of other top-secret military and intelligence systems under the NSA’s purview.

The New York Times, citing anonymous sources, reported that the former NSA contractor used a Web crawler, cheap software designed to index and back up websites, to scour the NSA’s data and return a trove of confidential documents. Snowden apparently programmed his search to find particular subjects and determine how deeply to follow links on the NSA’s internal networks.

Investigators found that Snowden’s method of obtaining the data was hardly sophisticated and should have been easily detected. Snowden accessed roughly 1.7 million files, intelligence officials said last week, partly because the NSA “compartmented” relatively little information, making it easier for a Web crawler like the one Snowden used to access a large number of files.

[NYT]

More Must-Reads from TIME

- Cybersecurity Experts Are Sounding the Alarm on DOGE

- Meet the 2025 Women of the Year

- The Harsh Truth About Disability Inclusion

- Why Do More Young Adults Have Cancer?

- Colman Domingo Leads With Radical Love

- How to Get Better at Doing Things Alone

- Michelle Zauner Stares Down the Darkness

Contact us at letters@time.com