In 1872, with the publication of “The Expression of the Emotions in Man and Animals,” Charles Darwin went rogue. Only a decade after the anatomist Duchenne de Boulogne’s produced the first neurology text illustrated by photographs, Darwin claimed to be the first to use photographs in a scientific publication to actually document the expressive spectrum of the face.

Combining speculation about raised eyebrows and flushed skin with vile commentary about mental illness, he famously logged diagrams of facial musculature, along with drawings of sulky chimpanzees and photographs of weeping infants, to create a study that spanned species, temperament, age, and gender. But what really interested him was not so much the specificity of the individual as the universality of the tribe: If expressions could, as de Boulogne had suggested, be physically localized, could they also be culturally generalized?

As a man of science, he set out to analyze the visual difference between types, which is to say races. While Darwin’s scientific contributions remain ever significant, it’s worth remembering he was also a man of his era — privileged, white, affluent, commanding — who generalized as much as, if not more than, he analyzed, especially when it came to objectifying people’s looks. In spite of his influence on evolutionary biology and his role in the scientific study of emotion, Darwin’s prognostications read today as remarkably prejudicial. (“No determined man,” he writes in “The Expression of the Emotions in Man and Animals,” “probably ever had an habitually gaping mouth.”) This urge to label “types” — a loaded and unfortunate term — would essentially go viral in the early years of the coming century, with such assumptions reasserting themselves as dogmatic, even axiomatic, fact.

Hardly the first to postulate on the graphic evidence of the grimace, Darwin hoped to introduce a system by which facial expressions might be properly evaluated. He shared with many of his generation a predisposition toward history: simply put, the idea that certain facial traits might have a basis in evolution. Empirically, the idea itself is not unreasonable. We are, after all, genetically predisposed to share traits with those in our familial line, occasionally by virtue of our geographic vicinity. At the same time, certain specimens, when classified by visual genre, become the easy targets of discrimination. In so doing, comparisons can — and do — glide effortlessly from hypothesis to hyperbole, particularly when images are in play.

Almost exactly a century after the arrival of Darwin’s volume, Paul Ekman, a psychologist at the University of California, published a study in which he determined that there were seven principal facial expressions deemed universal across all cultures: anger, contempt, fear, happiness, interest, sadness, and surprise. His Facial Action Coding System (FACS) supported many of Darwin’s earlier findings and remains, to date, the gold standard for identifying any movement the face can make. As a methodology for parsing facial expression, Ekman’s work provides a practical rubric for understanding these distinctions: It’s logical, codified, and clear. But what happens to such comparative practices when supposition trumps proposition, when the science of scrutiny is eclipsed by the lure of a bigger, messier, more global extrapolation? When does the quest for the universal backfire — and become a discriminatory practice?

The real seduction, in Darwin’s era and in our own, lies in the notion that pictures — and especially pictures of our faces — are remarkably powerful tools of persuasion and do, in so many instances, speak louder than words.

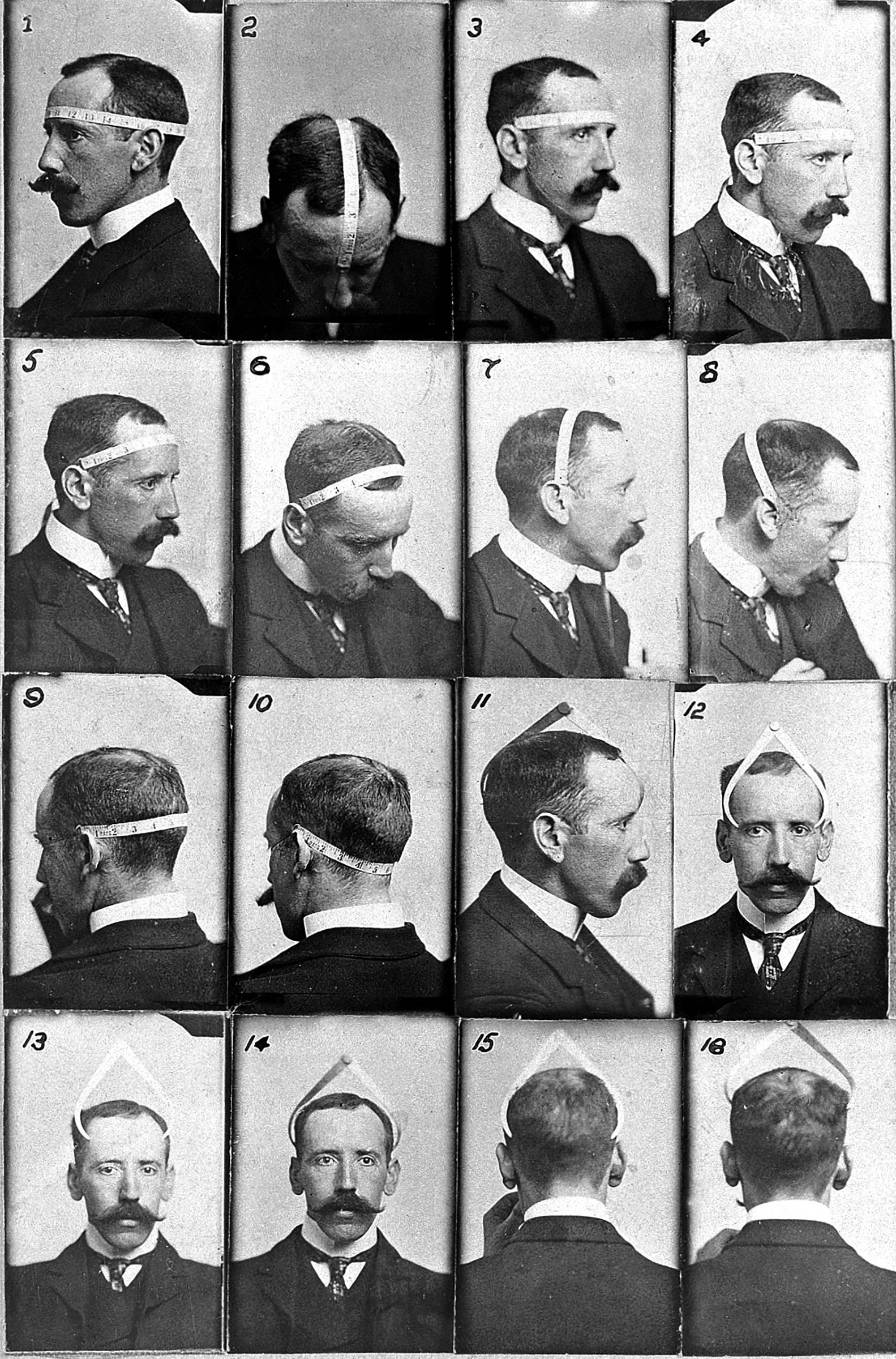

The idea that photography allowed for the demonstration and distribution of objective visual evidence was a striking development for clinicians. Unlike the interpretive transference of a drawing, or the abstract data of a diagram, the camera was clear and direct, a vehicle for proof. The process itself allowed for a kind of massive stockpiling — pictures compared to one another, minutiae contrasted, hypotheses often mistakenly corroborated — which, while arguably rooted in scientific inquiry, led to a stunning degree of generalization in the name of fact. If evolution is seen as the study of unseen development — biological, generational, temporal, and by definition intangible — the camera provided the illusion of quantifiable benchmarks, an irresistible proposition for the proponents of theoretical ideas.

Darwin’s cousin, the noted statistician Francis Galton, saw such generalizations as precisely the point. Long before computer software would make such computational practice commonplace, he introduced not a lateral but a synthetic system for facial comparison: what he termed “composite portraiture” was, in fact, a neologism for pictorial averaging. Galton’s objective was to identify deviation and, in so doing, to reverse-engineer an ideal “type,” which he did by repeat printing — upon a single photographic plate and within the same vicinity to one another — thereby creating a force-amalgamated portrait of multiple faces. At once besotted with mechanical certainty and mesmerized by the scope of visual wonder before him, Galton thrilled to the notion of mathematical precision — the lockup on the photographic plate, the reckoning of the binomial curve — but appeared uninterested in actual details unless they could help reaffirm his suppositions about averages, about types, even about the photomechanical process itself.

That Galton drew upon the language of statistical fact — and benefited from the presumed sovereignty of his own exalted social position — to become an evangelist for the camera is questionable in itself, but the fact that he viewed his composite photographs as plausible evidence for an unforgiving sociocultural rationale shifts the legacy of his scholarship into far more pernicious territory.

At once driven by claims of biological determinism and supported by the authoritarian heft of British empiricism, Francis Galton pioneered an insidious form of human scrutiny that would come to be known as eugenics. The word itself comes from the Greek word eugenes (noble, well-born, and “good in stock”), though Galton’s own definition is a bit more sinister: For him, it was a science addressing “all influences that improve the inborn qualities of a race, also with those that develop them to the utmost advantage.” The idea of social betterment through better breeding (indeed, the notion of better anything through breeding) led to a horrifying era of social supremacism in which “deviation” would come to be classified across a broad spectrum of race, religion, health, wealth, and every imaginable kind of human infirmity. Grossly and idiosyncratically defined — even a “propensity” for carpentry or dress-making was considered a genetically inherited trait — Galton’s remarkably flawed (and deeply racist) ideology soon found favor with a public eager to assert, if nothing else, its own vile claims to vanity.

The social climate into which eugenic doctrine inserted itself appealed to precisely this fantasy, beginning with “Better Baby” and “Fitter Family” contests, an unfortunate staple of recreational entertainment that emerged across the regional United States during the early years of the 20th century. Widely promoted as a wholesome public health initiative, the idea of parading good-looking children for prizes (a practice that essentially likened kids to livestock) was one of a number of practices predicated on the notion that better breeding outcomes were in everyone’s best interest. The resulting photos conferred bragging rights on the winning (read “white”) contestants, but the broader message — framing beauty, but especially facial beauty, as a scientifically sanctioned community aspiration — implicitly suggests that the inverse was also true: that to be found “unfit” was to be doomed to social exile and thus restricted, among other things, by fierce reproductive protocols.

In 29 states — beginning in 1907 and until the laws were repealed in the 1940s — those deemed socially inferior (an inexcusable euphemism for what was then defined as physically “inadequate”) were, in fact, subject to compulsory sterilization. From asthma to scoliosis, mental disability to moral delinquency, eugenicists denounced difference in light of a presumed cultural superiority, a skewed imperialism that found its most nefarious expression during the Third Reich. To measure difference was to eradicate it, exterminate it, excise it from evolutionary fact. Though ultimately discredited following the atrocities endured during multiple years of Nazi reign, eugenic theory was steeped in this sinister view of genetic governance, manifest destiny run amok.

Later, once detached from Galton’s maniacal gaze, the composite portrait would inspire others to play with the optics of the amalgamated image. The 19th-century French photographer Arthur Batut, known for being one of the first aerial photographers (he shot from a kite), may have been drawn to the hints of movement generated by a portrait’s animated edges. American photographer Nancy Burson has experimented with composite photography to merge black, Asian, and Caucasian faces against population statistics: Introduced in 2000, her Human Race Machine lets you see how you would look as another race. The artist Richard Prince flattened every one of Jerry Seinfeld’s fifty-seven TV love interests into a 2013 composite he called “Jerry’s Girls,” while in 2017, data scientist Giuseppe Sollazzo created a blended face for the BBC that used a carefully plotted algorithm to combine every face in the U.S. Senate.

Galton would have appreciated the speed of the software and the advantages of the algorithm — but what of the ethics of the very act of image capture and comparison, of the ethics of pictorial appropriation itself? There’s an implicit generalization to this kind of image production and indeed, seen over time, composite portraiture would become a way to amalgamate and assess an entire culture, even an era. In a 1931 radio interview, the German portraitist August Sander claimed he wanted to “capture and communicate in photography the physiognomic time exposure of a whole generation,” an observation that reframes the composite as a kind of collected census, or population survey.

The camera, after all, bears witness over time, its outcome an extension of the eye, the mind, the soul of the photographer. Sander was right. (So was Susan Sontag: “Humanity,” she once wrote, “is not one.”) With the advent of better, cheaper, faster, and more mobile technologies for capturing our faces, the time exposure of a whole generation was about to become a great deal more achievable.

More Must-Reads from TIME

- Cybersecurity Experts Are Sounding the Alarm on DOGE

- Meet the 2025 Women of the Year

- The Harsh Truth About Disability Inclusion

- Why Do More Young Adults Have Cancer?

- Colman Domingo Leads With Radical Love

- How to Get Better at Doing Things Alone

- Michelle Zauner Stares Down the Darkness

Contact us at letters@time.com