In a week that saw the official death toll from the COVID-19 pandemic in the United States surpass 100,000, when the number of applications for unemployment insurance exceeded 40 million and Americans were protesting in dozens of cities, President Trump launched an attack against social-media platforms, most notably Twitter, Facebook and YouTube. If it were to survive inevitable legal challenges, Trump’s Executive Order would enable government oversight of political speech on Internet platforms. An order that claims to promote freedom of speech would actually crush it. The Executive Order is a mistargeted approach to a serious and nuanced problem.

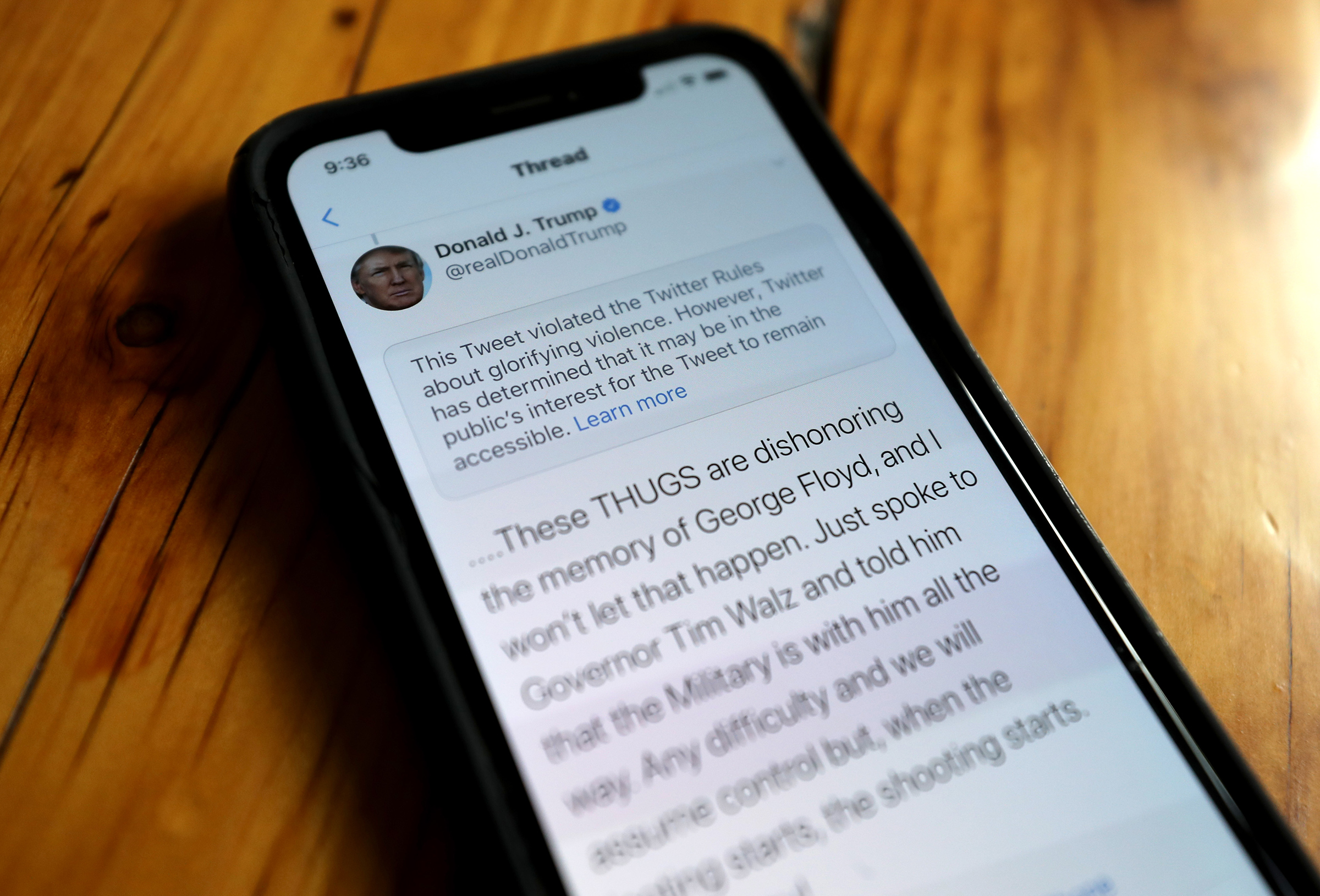

Trump has long argued–without evidence–that Internet platforms are biased against conservative voices. In reality, conservative voices have thrived on these platforms. Facebook, Instagram, Twitter and YouTube have consistently allowed conservatives to violate their terms of service. Twitter recently fact-checked two of Trump’s tweets that contained falsehoods, the first time it had done so, which appeared to trigger the Executive Order. Twitter subsequently issued a warning on a Trump tweet for endangering public safety. These actions were long overdue efforts to treat the President’s posts the same as any other user’s. At the same time, Facebook refused to take action with identical posts on its platform.

The President’s Executive Order calls for changes to Section 230 of the Communications Decency Act of 1996, a law created to protect the nascent Internet’s ability to moderate extreme, harmful and inflammatory content without fear of litigation. Internet platforms have interpreted Section 230 as providing blanket immunity, and the courts have concurred even in cases where their platforms directly facilitate terrorism, defamation, false information, communications between adolescents and alleged sexual predators, violations of fair-housing laws, and a range of other issues. The result is that Section 230 acts both as a protective barrier and a subsidy because plat-forms do not pay the cost of the damage they do.

As currently interpreted, Section 230 also protects Internet platforms from responsibility for amplifying content that potentially undermines our country’s response to the COVID-19 pandemic and to protests against police brutality. At a time when facts may represent the difference between life and death, the most pervasive communications platforms in society enable the spread of disinformation. Tiny minorities have used Facebook, Instagram, YouTube and Twitter to help convert public-health remedies such as masks and social distancing into elements of the culture wars. These platforms have also played a central role in helping spread white supremacy, climate-change denial and the antivax campaign by giving disproportionate political power to small groups with extreme views. Thanks to the safe harbor of Section 230, Facebook has no incentive to prevent right-wing militias and white supremacists from using its apps as organizing infrastructure.

The benefits of Internet platforms are evident to everyone with access to a computer or smartphone. But like the chemicals industry in the 1950s, Internet giants are exceptionally profitable because they do not pay any cost for the harm they cause. Where industrial companies dumped toxic chemicals in fresh water, Internet platforms pollute society with toxic content. The Internet is central to our way of life, but we have to find a way to get the benefits with fewer harms.

Facebook, Instagram, YouTube, Twitter and others derive their economic value primarily from advertising. They compete for your attention. In the guise of giving consumers what they want, these platforms employ surveillance to identify the hot buttons for every consumer and algorithms to amplify content most likely to engage each user emotionally. Thanks to the fight-or-flight instinct wired into each of us, some forms of content force us to pay attention as a matter of self-preservation. Targeted harassment, disinformation and conspiracy theories are particularly engaging, so the algorithms of Internet platforms amplify them. Harmful content crowds out facts and expertise.

Policymakers and users have asked repeatedly for years that the Internet platforms do a better job. It is clear that self-regulation has failed. There is no economic incentive to change behavior. They have made some half-hearted steps: Facebook and YouTube have hired thousands of underpaid and underresourced human moderators and invested in artificial intelligence. Twitter and Facebook have implemented fact-checking on an inconsistent basis. Twitter, YouTube and Facebook have banned some extremists, most notably the conspiracy theorist Alex Jones. Each platform cites impressive claims about the benefits of their actions, but the numbers do not tell the true story. Despite their professed diligence, we’ve seen the worst impulses of humanity continue to thrive.

Without changes to Section 230, election interference will remain a central feature of our democracy and climate deniers will continue to sow confusion and white supremacists to spread fear. I would like to believe that we can enjoy the benefits of Internet platforms without suffering irreparable harm to democracy and public health.

But the goal of regulation should not be to ban speech. That would be contrary to the First Amendment and might further undermine democracy. Stanford researcher Renée DiResta frames the central challenge of reforming Section 230 as “free reach,” the perception by many, including the President, that all content is equally deserving of amplification. When platforms optimize algorithms for attention, or use their ample tools to allow targeting of harmful content to vulnerable communities, the benefits of amplification accrue disproportionately to harmful content.

Changes to Section 230 should be structured to encourage innovation and startups. The goal of any reform should be to limit the spread of harmful speech and conduct, and to restrict business models likely to enable harm.

Algorithms need not fill our feeds with targeted and dehumanizing disinformation and conspiracy theories. They do now because amplifying emotionally dangerous content is a choice made to maximize profits. There are many other ways to organize a news feed, the most basic of which is reverse chronological order. “Optimizing for engagement” undermines democracy and public health. It increases political polarization and fosters hostility to expertise and facts. It undermines journalism, not just by taking advertising dollars from the media but also by forcing news into an environment that discourages critical thinking–and by putting junk news, disinformation and harmful content on an equal footing with credible news sources.

The optimal solution is unlikely to be found at the extremes–the status quo or eliminating Section 230 altogether–but through modifications to create more constructive incentives. The protections of Section 230 should no longer apply to platforms that use algorithmic amplification for attention. It may be appropriate to extend the exception to all platforms above a certain size, measured either by revenue or users. If these changes were effective today, platforms like Twitter, Facebook and YouTube would potentially be liable for harms that result. To complement the changes to Section 230, there should be national legislation to give users the right to sue for damages if they have been harmed as a result of using an Internet platform. The goal of these reforms is to change behavior by changing incentives.

We cannot allow these technology platforms to continue to threaten the core tenets of our democracy or undermine our pandemic response and ability to protest peacefully. Reform should not be done out of spite or through poorly conceived Executive Orders, but in a way that will benefit all of us. President Trump has given us an opportunity to do the right thing. Just not the way he wants.

McNamee, the author of Zucked: Waking Up to the Facebook Catastrophe, is a co-founder of Elevation Partners and an early investor in Facebook

More Must-Reads from TIME

- Cybersecurity Experts Are Sounding the Alarm on DOGE

- Meet the 2025 Women of the Year

- The Harsh Truth About Disability Inclusion

- Why Do More Young Adults Have Cancer?

- Colman Domingo Leads With Radical Love

- How to Get Better at Doing Things Alone

- Michelle Zauner Stares Down the Darkness

Contact us at letters@time.com