In the final hours of Dec. 31, 1999, John Koskinen boarded an airplane bound for New York City. He was accompanied by a handful of reporters but few other passengers, among them a tuxedo-clad reveler who was troubled to learn that, going by the Greenwich Mean Time clock used by airlines, he too would enter the 20th century in the air.

Koskinen, however, had timed his flight that way on purpose. He was President Bill Clinton’s Y2K “czar,” and he flew that night to prove to a jittery public — and scrutinizing press— that after an extensive, multi-year effort, the country was ready for the new millennium.

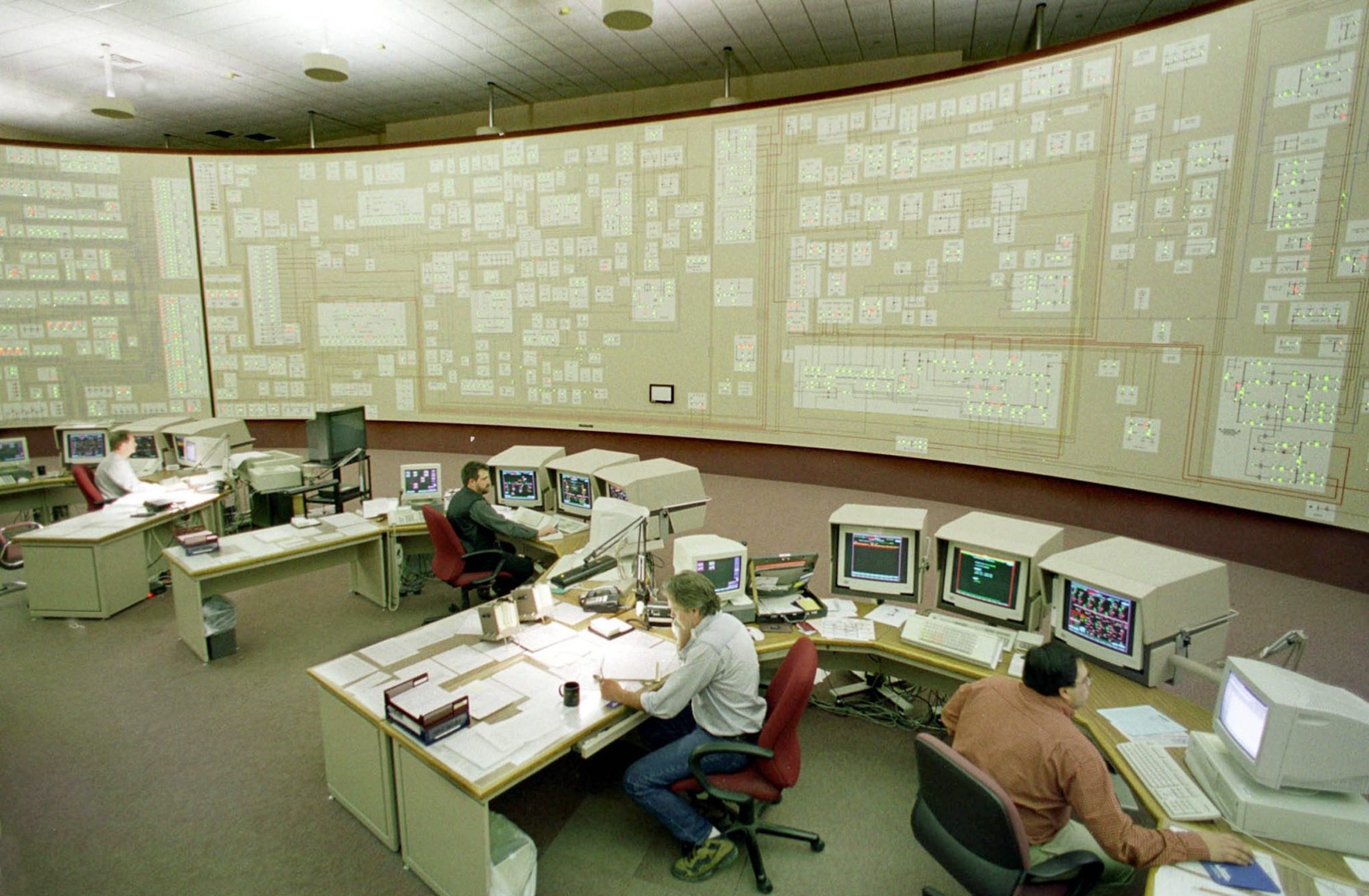

The term Y2K had become shorthand for a problem stemming from the clash of the upcoming Year 2000 and the two-digit year format utilized by early coders to minimize use of computer memory, then an expensive commodity. If computers interpreted the “00” in 2000 as 1900, this could mean headaches ranging from wildly erroneous mortgage calculations to, some speculated, large-scale blackouts and infrastructure damage.

It was an issue that everyone was talking about 20 years ago, but few truly understood. “The vast majority of people have absolutely no clue how computers work. So when someone comes along and says look we have a problem…[involving] a two-digit year rather than a four-digit year, their eyes start to glaze over,” says Peter de Jeger, host of the podcast “Y2K: An Autobiography.”

Yet it was hardly a new concern — technology professionals had been discussing it for years, long before Y2K entered the popular vernacular.

President Clinton had exhorted the government in mid-1998 to “put our own house in order,” and large businesses — spurred by their own testing — responded in kind, racking up an estimated expenditure of $100 billion in the United States alone. Their preparations encompassed extensive coordination on a national and local level, as well as on a global scale, with other digitally reliant nations examining their own systems.

It was as years of behind-the-scenes work culminated that public awareness peaked. Amid the uncertainty, some Americans stocked up on food, water and guns in anticipation of a computer-induced apocalypse. Ominous news reports warned of possible chaos if critical systems failed, but, behind the scenes, those tasked with avoiding the problem were — correctly — confident the new year’s beginning would not bring disaster.

“The Y2K crisis didn’t happen precisely because people started preparing for it over a decade in advance. And the general public who was busy stocking up on supplies and stuff just didn’t have a sense that the programmers were on the job,” says Paul Saffo, a futurist and adjunct professor at Stanford University.

But even among corporations that were sure in their preparations, there was sufficient doubt to hold off on declaring victory prematurely. The former IT director of a grocery chain recalls executives’ reticence to publicize their efforts for fear of embarrassing headlines about nationwide cash register outages. As Saffo notes, “better to be an anonymous success than a public failure.”

Get your history fix in one place: sign up for the weekly TIME History newsletter

After the collective sigh of relief in the first few days of January 2000, however, Y2K morphed into a punch line, as relief gave way to derision — as is so often the case when warnings appear unnecessary after they are heeded. It was called a big hoax; the effort to fix it a waste of time.

But what if no one had taken steps address the matter? Isolated incidents that illustrate the potential for adverse consequences — albeit of varying degrees of severity — ranging from a comically absurd century’s worth of late fees at a video rental store to a malfunction at a nuclear plant in Tennessee. “We had a problem. For the most part, we fixed it. The notion that nothing happened is somewhat ludicrous,” says de Jager, who was criticized for delivering dire early warnings.

“Industries and companies don’t spend $100 billion dollars or devote these personnel resources to a problem they think is not serious,” Koskinen says, looking back two decades later. “…[T]he people who knew best were the ones who were working the hardest and spending the most.”

The innumerable programmers who devoted months and years to implementing fixes received scant recognition. (One programmer recalls the reward for a five-year project at his company: lunch and a pen.) It was a tedious, unglamorous effort, hardly the stuff of heroic narratives — nor conducive to an outpouring of public gratitude, even though some of the fixes put in place in 1999 are still used today to keep the world’s computer systems running smoothly.

“There was no incentive for everybody to say, ‘we should put up a monument to the anonymous COBOL [common business language] programmer who changed two lines of code in the software at your bank.’ Because this was solved by many people in small ways,” says Saffo.

The inherent conundrum of the Y2K debate is that those on both ends of the spectrum — from naysayers to doomsayers — can claim that the outcome proved their predictions correct.

Koskinen and others in the know felt a high degree of confidence 20 years ago, but only because they were aware of the steps that had been taken — an awareness that, decades later, can still lend a bit more gravity to the idea of Y2K. “If nobody had done anything,” he says, “I wouldn’t have taken the flight.”

More Must-Reads From TIME

- The 100 Most Influential People of 2024

- How Far Trump Would Go

- Why Maternity Care Is Underpaid

- Scenes From Pro-Palestinian Encampments Across U.S. Universities

- Saving Seconds Is Better Than Hours

- Why Your Breakfast Should Start with a Vegetable

- Welcome to the Golden Age of Ryan Gosling

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Contact us at letters@time.com