Machines can discriminate in harmful ways.

I experienced this firsthand, when I was a graduate student at MIT in 2015 and discovered that some facial analysis software couldn’t detect my dark-skinned face until I put on a white mask. These systems are often trained on images of predominantly light-skinned men. And so, I decided to share my experience of the coded gaze, the bias in artificial intelligence that can lead to discriminatory or exclusionary practices.

Altering myself to fit the norm—in this case better represented by a white mask than my actual face—led me to realize the impact of the exclusion overhead, a term I coined to describe the cost of systems that don’t take into account the diversity of humanity. How much does a person have to change themselves to function with technological systems that increasingly govern our lives?

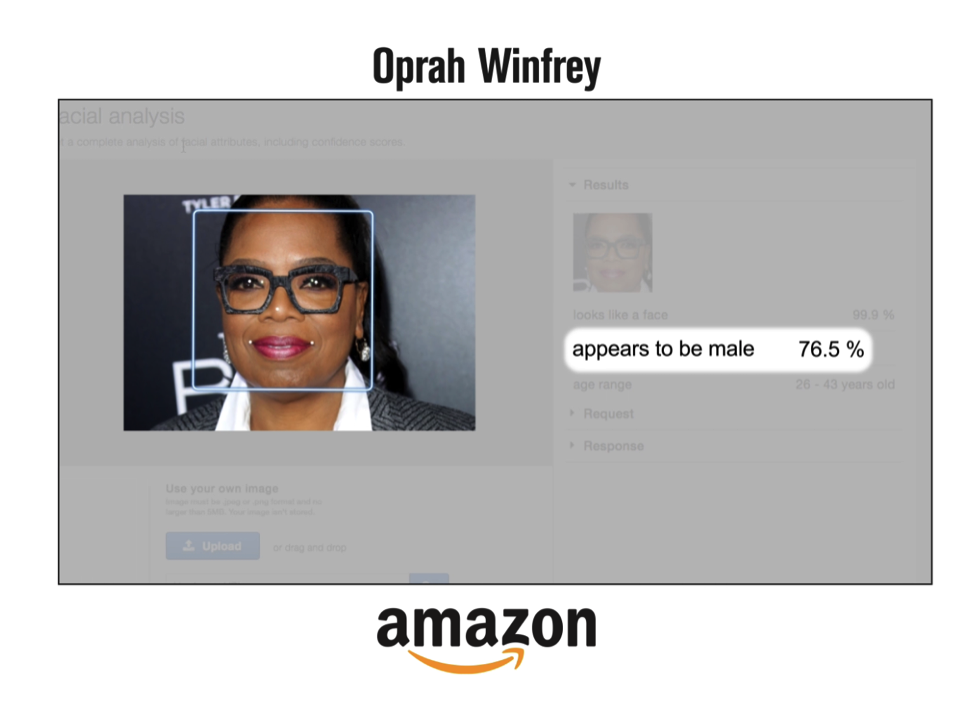

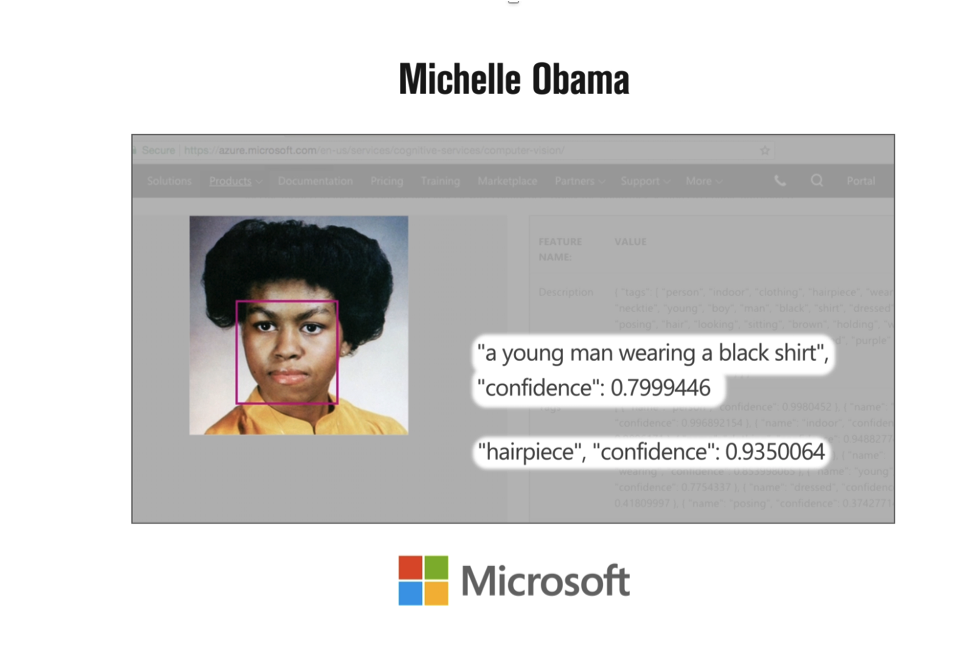

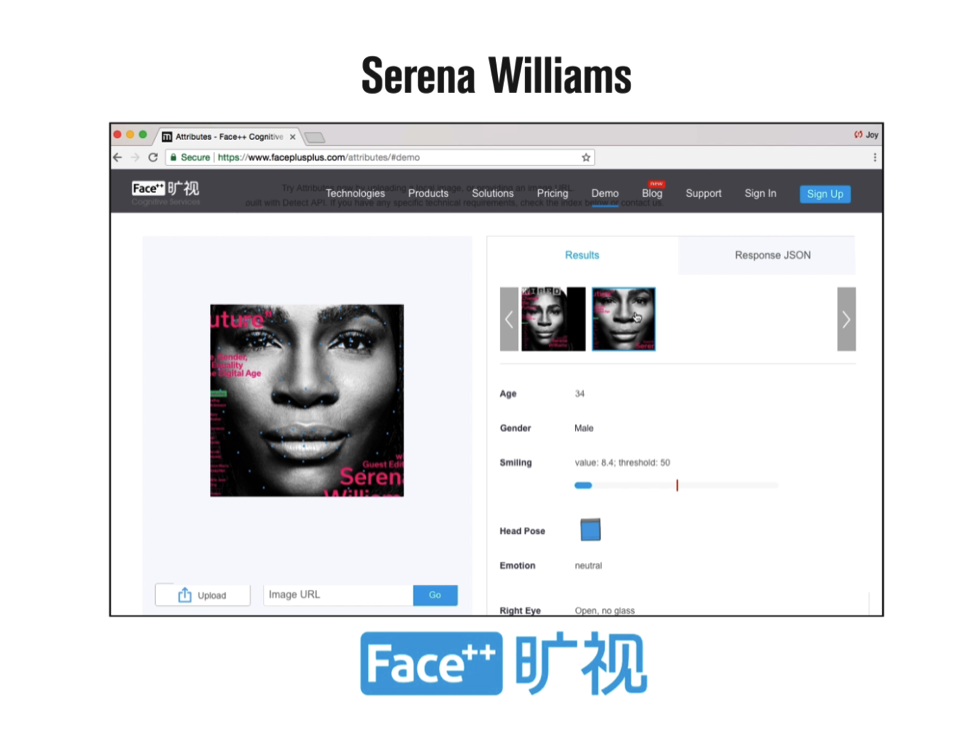

We often assume machines are neutral, but they aren’t. My research uncovered large gender and racial bias in AI systems sold by tech giants like IBM, Microsoft, and Amazon. Given the task of guessing the gender of a face, all companies performed substantially better on male faces than female faces. The companies I evaluated had error rates of no more than 1% for lighter-skinned men. For darker-skinned women, the errors soared to 35%. AI systems from leading companies have failed to correctly classify the faces of Oprah Winfrey, Michelle Obama, and Serena Williams. When technology denigrates even these iconic women, it is time to re-examine how these systems are built and who they truly serve.

There’s no shortage of headlines highlighting tales of failed machine learning systems that amplify, rather than rectify, sexist hiring practices, racist criminal justice procedures, predatory advertising, and the spread of false information. Though these research findings can be discouraging, at least we’re paying attention now. This gives us the opportunity to highlight issues early and prevent pervasive damage down the line. Computer vision experts, the ACLU, and the Algorithmic Justice League, which I founded in 2016, have all uncovered racial bias in facial analysis and recognition technology. Given what we know now, as well as the history of racist police brutality, there needs to be a moratorium on using such technology in law enforcement—including in equipping drones or police body cameras with facial analysis or recognition software for lethal operations.

See the 2019 Optimists issue, guest-edited by Ava DuVernay.

We can organize to protest this technology being used dangerously. When people’s lives, livelihoods, and dignity are on the line, AI must be developed and deployed with care and oversight. This is why I launched the Safe Face Pledge to prevent the lethal use and mitigate abuse of facial analysis and recognition technology. Already three companies have agreed to sign the pledge.

As more people question how seemingly neutral technology has gone astray, it’s becoming clear just how important it is to have broader representation in the design, development, deployment, and governance of AI. The underrepresentation of women and people of color in technology, and the under-sampling of these groups in the data that shapes AI, has led to the creation of technology that is optimized for a small portion of the world. Less than 2% of employees in technical roles at Facebook and Google are black. At eight large tech companies evaluated by Bloomberg, only around a fifth of the technical workforce at each are women. I found one government dataset of faces collected for testing that contained 75% men and 80% lighter-skinned individuals and less than 5% women of color—echoing the pale male data problem that excludes so much of society in the data that fuels AI.

Issues of bias in AI tend to most adversely affect the people who are rarely in positions to develop technology. Being a black woman, and an outsider in the field of AI, enables me to spot issues many of my peers overlooked.

I am optimistic that there is still time to shift towards building ethical and inclusive AI systems that respect our human dignity and rights. By working to reduce the exclusion overhead and enabling marginalized communities to engage in the development and governance of AI, we can work toward creating systems that embrace full spectrum inclusion.

In addition to lawmakers, technologists, and researchers, this journey will require storytellers who embrace the search for truth through art and science. Storytelling has the power to shift perspectives, galvanize change, alter damaging patterns, and reaffirm to others that their experiences matter. That’s why art can explore the emotional, societal, and historical connections of algorithmic bias in ways academic papers and statistics cannot. And as long as stories ground our aspirations, challenge harmful assumptions, and ignite change, I remain hopeful.

See the 2019 Optimists issue, guest-edited by Ava DuVernay.

More Must-Reads from TIME

- Why Trump’s Message Worked on Latino Men

- What Trump’s Win Could Mean for Housing

- The 100 Must-Read Books of 2024

- Sleep Doctors Share the 1 Tip That’s Changed Their Lives

- Column: Let’s Bring Back Romance

- What It’s Like to Have Long COVID As a Kid

- FX’s Say Nothing Is the Must-Watch Political Thriller of 2024

- Merle Bombardieri Is Helping People Make the Baby Decision

Contact us at letters@time.com