In 2015, a man named Nigel Richards won the title of French-language Scrabble World Champion. This was especially noteworthy because Richards does not speak French. What the New Zealander had done was memorize each of the 386,000 words in the entire French Scrabble dictionary, in the space of just nine weeks.

Richards’ impressive feat is a useful metaphor for how artificial intelligence works—real AI, not the paranoid fantasies that some self-appointed “futurists” like to warn us about. Just as Richards committed vast troves of words to memory in order to master the domain of the Scrabble board, state-of-the-art AI—or deep learning—takes in massive amounts of data from a single domain and automatically learns from the data to make specific decisions within that domain. Deep learning can automatically optimize human-given goals—called “objective functions”—with unlimited memory and superhuman accuracy.

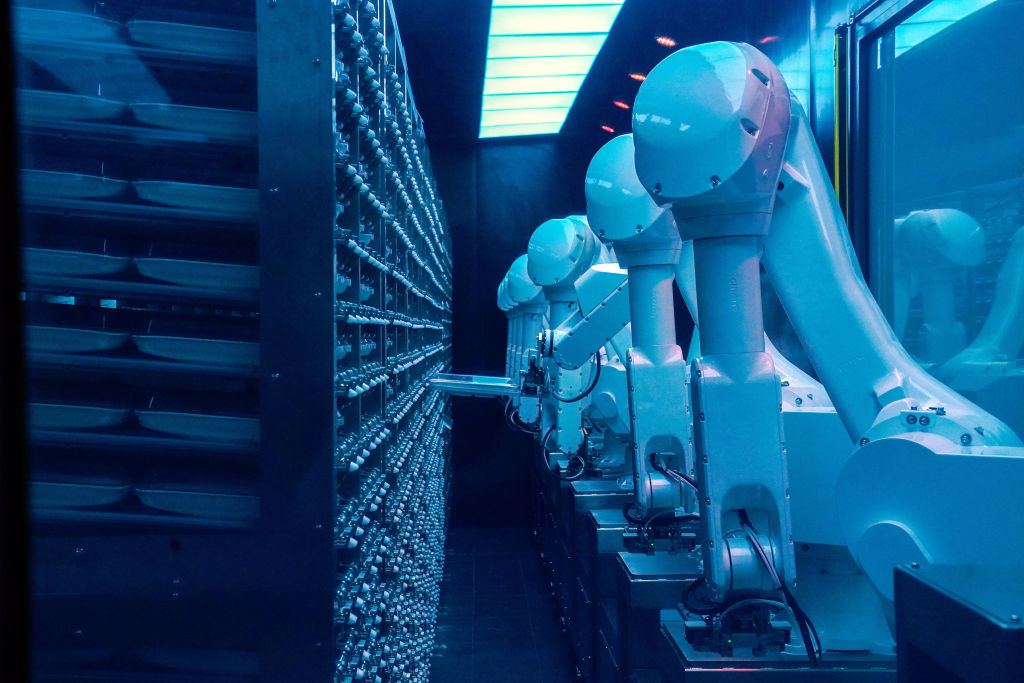

While limited in scope, deep learning is usable by everyone and powerful within a certain domain. It can help Amazon maximize profit from recommendations or Facebook maximize minutes spent by users in its app, just as it can help banks minimize loan-default rates or an airport camera determine if a terrorist has queued up for boarding.

The potential applications for AI are extremely exciting. Autonomous vehicles, for example, will dramatically reduce cost and improve safety and efficiency. But the rise of AI also brings many challenges, and it’s worth taking time to sort between the genuine risks of this coming technological revolution and the misunderstandings and hype that sometimes surround the topic.

First, let’s talk about job displacement. Because AI can outperform humans at routine tasks—provided the task is in one domain with a lot of data—it is technically capable of displacing hundreds of millions of white and blue collar jobs in the next 15 years or so.

But not every job will be replaced by AI. In fact, four types of jobs are not at risk at all. First, there are creative jobs. AI needs to be given a goal to optimize. It cannot invent, like scientists, novelists and artists can. Second, the complex, strategic jobs—executives, diplomats, economists—go well beyond the AI limitation of single-domain and Big Data. Then there are the as-yet-unknown jobs that will be created by AI.

Are you worried that these three types of jobs won’t employ as many people as AI will displace? Not to worry, as the fourth type is much larger: empathetic and compassionate jobs, such as teachers, nannies and doctors. These jobs require compassion, trust and empathy—which AI does not have. And even if AI tried to fake it, nobody would want a chatbot telling them they have cancer, or a robot to babysit their children.

So there will still be jobs in the age of AI. The key then must be retraining the workforce so people can do them. This must be the responsibility not just of the government, which can provide subsidies, but also of corporations and AI’s ultra-wealthy beneficiaries.

As well as job displacement, AI has the potential to multiply inequality— both between the ultra-wealthy and the displaced workers and also among countries. In contrast with the U.S. and China, poorer and smaller countries will be unable to reap the economic rewards that will come with AI and less well placed to mitigate job displacement.

The technology also poses serious challenges in terms of security; the consequences of hacking into AI-controlled systems could be severe—imagine if autonomous vehicles were hacked by terrorists and used as weapons.

Finally, there are the issues of privacy, exacerbated bias and manipulation. Sadly, we’ve already seen failures on this front; Facebook couldn’t resist the temptation to use AI technology to optimize usage and profit, at the expense of user privacy and fostering bias and division.

All of these risks require governments, businesses and technologists to work together to develop a new rule book for AI applications. What happened with Facebook is proof that self-governing regulatory systems are bound to fail. And rather than compete against one another, countries must share best practices and work together to ensure this technology is used for the good of all.

One thing we don’t have to worry about is the fevered warnings of utopians and dystopians about AI making humans obsolete. The former predict we shall be “assimilated” and evolve into human cyborgs; the latter warn of world domination by robot overlords. Neither are showing much in the way of actual intelligence about artificial intelligence.

The age of “artificial general intelligence”—or when AI will be able to perform intellectual tasks better than humans—is far in the distance. General AI requires advanced capabilities such as reasoning, conceptual learning, common sense, planning, creativity and even self-awareness and emotions, all of which remain beyond our scientific reach. There are no known engineering paths to evolve toward these general capabilities. And big breakthroughs will not come easily or quickly.

Think back to Nigel Richards, who defeated the Francophone world at Scrabble. He had an amazing ability to memorize data and optimally select from permutations. But if you asked him to evaluate a novel by Gustave Flaubert, he would be completely lost. So asking when AI will entirely surpass humans is a little like asking when Richards will win the Prix Goncourt, France’s most prestigious literature prize. It’s not entirely impossible—but it is extremely unlikely.

More Must-Reads from TIME

- Cybersecurity Experts Are Sounding the Alarm on DOGE

- Meet the 2025 Women of the Year

- The Harsh Truth About Disability Inclusion

- Why Do More Young Adults Have Cancer?

- Colman Domingo Leads With Radical Love

- How to Get Better at Doing Things Alone

- Michelle Zauner Stares Down the Darkness

Contact us at letters@time.com