Imagine picking up the morning newspaper and feeling moral outrage at the latest action taken by the opposing political party. Or turning the page and seeing people around the world suffering famine and heartbreak, and flinching with empathy at their pain.

One of the most fundamental tasks we have as social creatures is to figure out whom we can trust, whom we should help and who means us harm. These are questions that are central to morality in everyday life.

In our work, we use tools from psychology to better understand these gut-level moral reactions that matter for everyday life. My research focuses on two facets of morality: moral judgments and empathy for the pain of others. Below, I discuss two new behavioral measures I have developed with my colleagues to capture these moral sentiments.

Why not just ask people?

One way to get a sense for people’s moral beliefs is to simply ask them. A researcher could ask you to rate on a one-to-five scale how morally wrong is a particular action, such as assaulting someone. Or to report on how frequently you tend to have empathy for other people in everyday life.

One potential problem with asking people to self-report their reactions is that these reports can be influenced by a lot of factors, especially when the topics are sensitive, such as morality and empathy. If people think their reputation is at stake, they may be very good at reporting what they think others want to hear.

So, sometimes self-reports will be useful, but sometimes people edit these reports to give a good impression to others. If you want to know who is likely to feel your pain, and not make “you” feel the pain, then relying on self-report, although a good start, may not always be enough.

A new measure of moral judgment

Rather than asking people what they think is moral, or how much empathy they feel, our work attempts to assess people’s immediate, spontaneous reactions before they have had much time to think at all. In other words, we examine how people behave to get a sense for their moral reactions.

For example, consider the new task that my collaborators and I developed to measure people’s gut reactions that certain actions are morally wrong. Gut reactions have been thought by many psychologists to play a powerful role in moral decision-making and behavior.

In this task, people go through a series of trials. In each trial, they see two words flash, one after the other. These words are actions typically thought either to be morally wrong or morally neutral. People are asked to judge whether the second words describe actions that are morally wrong, while avoiding being influenced by the first words. So, for example, in a particular trial, people might see “murder” immediately followed by “baking.” Their task is to judge whether “baking” is wrong while ignoring any influence of “murder.”

People are also not given much time to respond. If they take longer than half a second to respond, they get an annoying warning to “Please respond faster.” This is meant to make sure people respond without thinking too much.

My collaborators and I find that people make a systematic pattern of mistakes. When they see morally wrong actions such as “murder” come first, they make mistaken moral judgments about the actions that come second: They are more likely to mistakenly judge neutral actions such as “baking” as morally wrong. The idea here is that people are having a gut moral reaction to the words that come first, which is shaping how they make moral judgments about the words that come second.

This effect described above happens even when people are intending for it not to. So even if you are trying to stop that first word from influencing you, it still does.

You might think, does this connect to real-world morality? After all, responding quickly to words on a screen may not track the moral values we care about.

We find that people who show a stronger response on our task have features of a “moral personality.” We correlated the effect on our morality task with people’s self-reported measures of morally relevant traits.

People who show a stronger response on our task are more likely to feel guilt when considering doing unethical actions. They are more likely to indicate caring about being a moral person. And they report fewer psychopathic tendencies such as callousness. These associations are modest, but suggest that we’re capturing something relevant to morality.

A new measure of empathy

My collaborators and I have taken a similar approach to understanding empathy, or the tendency to vicariously feel the pain of others. Empathy research has often gone beyond self-report to use brain imaging or physiology as measures. But these are often quite costly to implement and may not always provide a clear lens on social emotions.

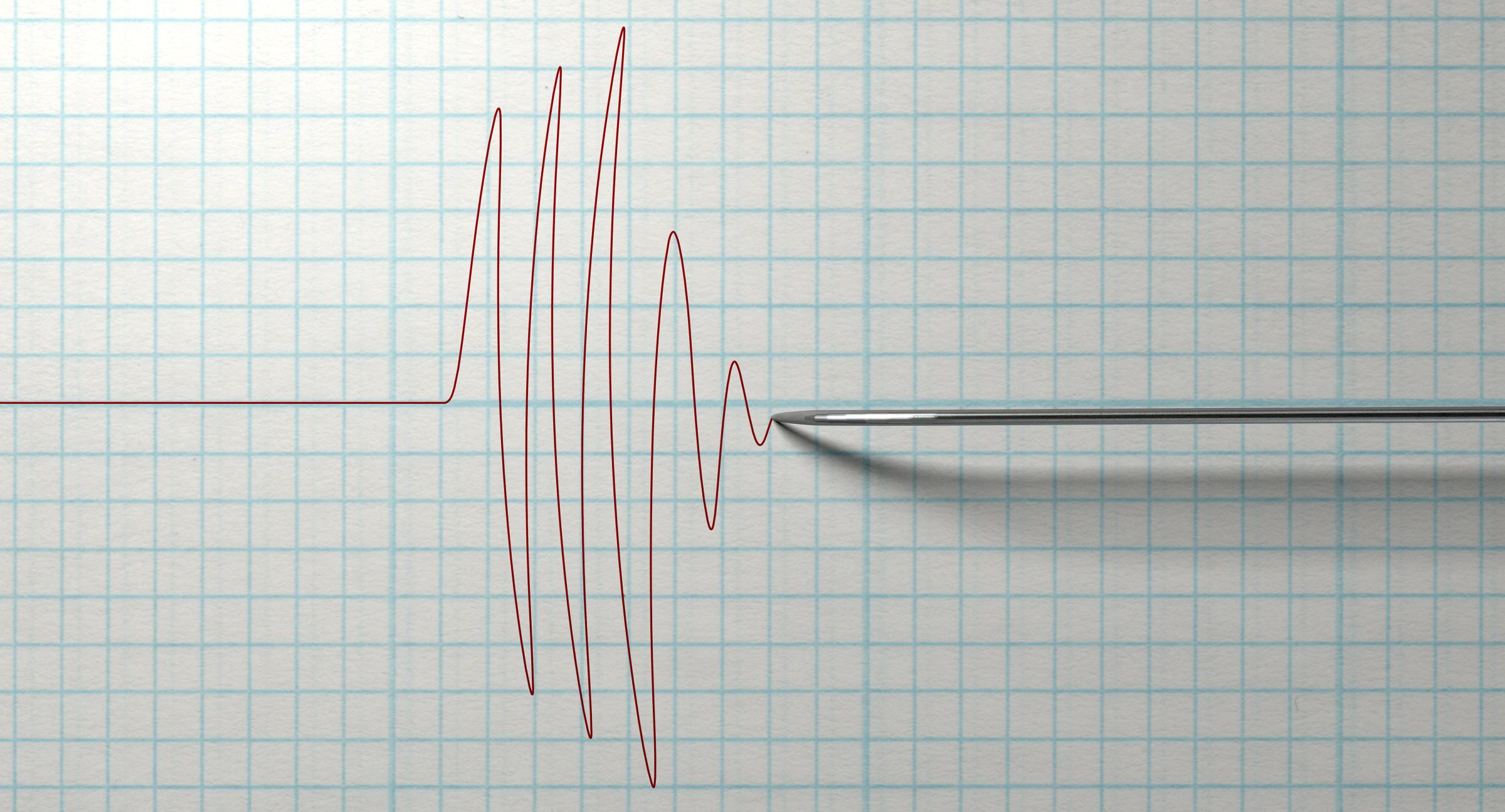

We created a new empathy task that’s very similar to the morality task except this time, people see two images rather than two words. The images depict hands being pierced with needles or brushed with Q-tips, which are implements that are considered respectively painful and nonpainful by most people.

People are asked to judge whether the experiences of the second images are painful or not, while avoiding being influenced by the first images.

As with the morality task, people show a systematic and robust pattern of mistakes; when they see painful experiences (i.e., needles) come first, they are more likely to mistakenly judge nonpainful experiences (i.e., Q-tips) as painful.

Importantly, we found that the empathy measured in our behavioral task connected to costly prosocial behavior: In one of our experiments, people who showed stronger empathetic reactions donated more of their own money to cancer charities when given the opportunity to do so.

Where do we go from here?

So, how can researchers use these tasks, and what can they imply for everyday moral interactions?

The tasks could help suggest who lacks the moral sentiments that support moral behavior. For example, criminal psychopaths can self-report normal feelings of empathy and morality and yet their behavior speaks otherwise. By assessing their gut-level behavioral responses, researchers may be better able to detect whether such offenders differ in morality and empathy.

In terms of everyday interactions, it might be good to understand people’s gut-level moral reactions: This may provide some indication of who shares your values and moral beliefs.

More research needs to further understand the nature of these moral sentiments that are captured by our tasks: These moral sentiments could also change over time, and it is important to know if they could predict a broader range of behaviors that are relevant to ethics and morality.

In sum, if we want to know who shares our moral sentiments, maybe just asking others isn’t quite enough. Self-reports are useful, but may not provide a complete picture of human morality. By looking at how people behave when they don’t have much time to think, we can see whether their moral sentiments compel them even when they are intending otherwise.

C. Daryl Cameron, Assistant Professor of Psychology and Research Associate in the Rock Ethics Institute, Pennsylvania State University

This article was originally published on The Conversation. Read the original article.

More Must-Reads from TIME

- Donald Trump Is TIME's 2024 Person of the Year

- Why We Chose Trump as Person of the Year

- Is Intermittent Fasting Good or Bad for You?

- The 100 Must-Read Books of 2024

- The 20 Best Christmas TV Episodes

- Column: If Optimism Feels Ridiculous Now, Try Hope

- The Future of Climate Action Is Trade Policy

- Merle Bombardieri Is Helping People Make the Baby Decision

Contact us at letters@time.com