Sometimes it’s the quick and easy emails that go unanswered. Perhaps a friend is asking if you’re available for dinner, and you forgot to respond even though you were free that night.

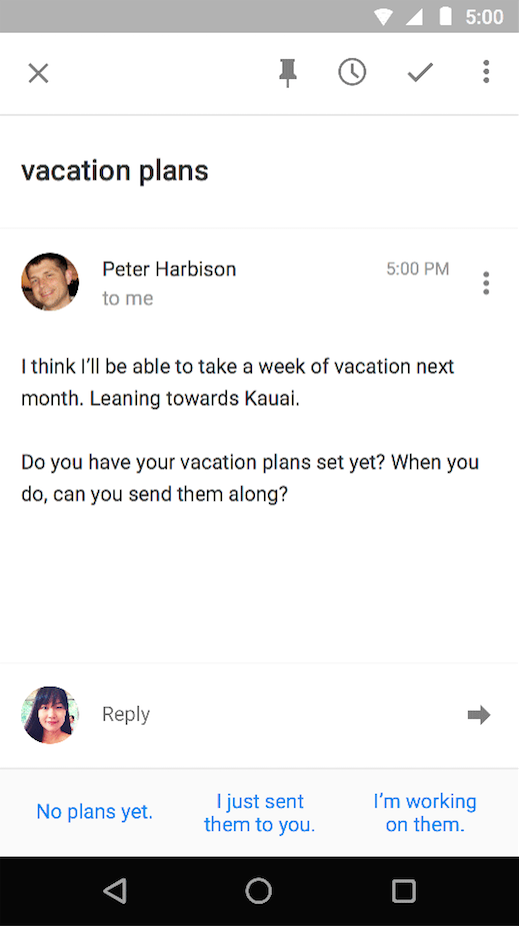

Google’s latest feature for Inbox, the email app it unveiled last October, attempts to make situations like this easier to handle by suggesting responses to emails. Before even pressing the reply button, Google will suggest three responses based on the content in a received email.

The feature, which is called Smart Reply and launches on Nov. 5, uses machine learning to understand the context of a message and compose replies that make sense.

Machine learning refers to specific types of computer algorithms that can learn how do do things wtithout being specifically programmed to do so — such as completing a task or making predictions.

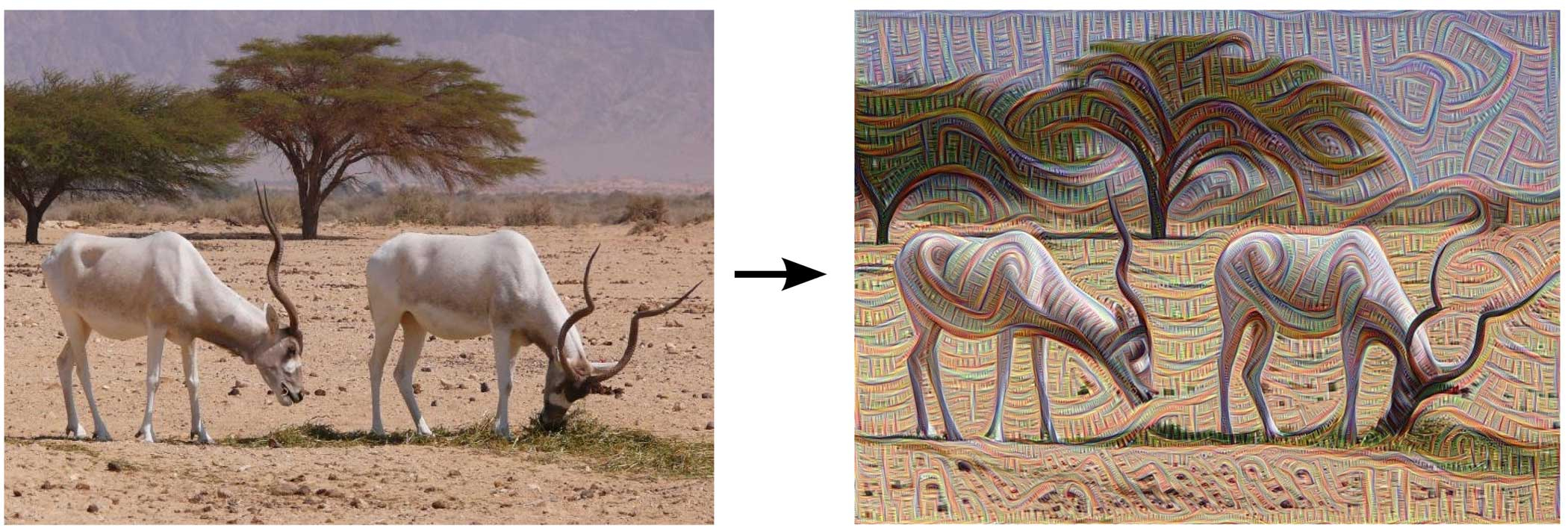

Google uses machine learning in several of its apps and services, including its Photos app, which can intelligently decipher subjects in photos so that the user can perform really targeted searches. For instance, a search for “dogs” should pull up all of the images in your library that contain dogs.

Google promises this new Smart Reply feature will improve as the user chooses responses from its suggestions more often. This makes sense — the more Smart Reply is used, the more Google learns about how that particular user typically responds to emails. Therefore, it can make predictions that are more accurate.

Smart Reply isn’t revolutionary — similar features exist on smartwatches, as typing on a tiny screen or speaking into a watch isn’t usually ideal. Still, it seems like a handy addition for responding to emails quickly on the go.

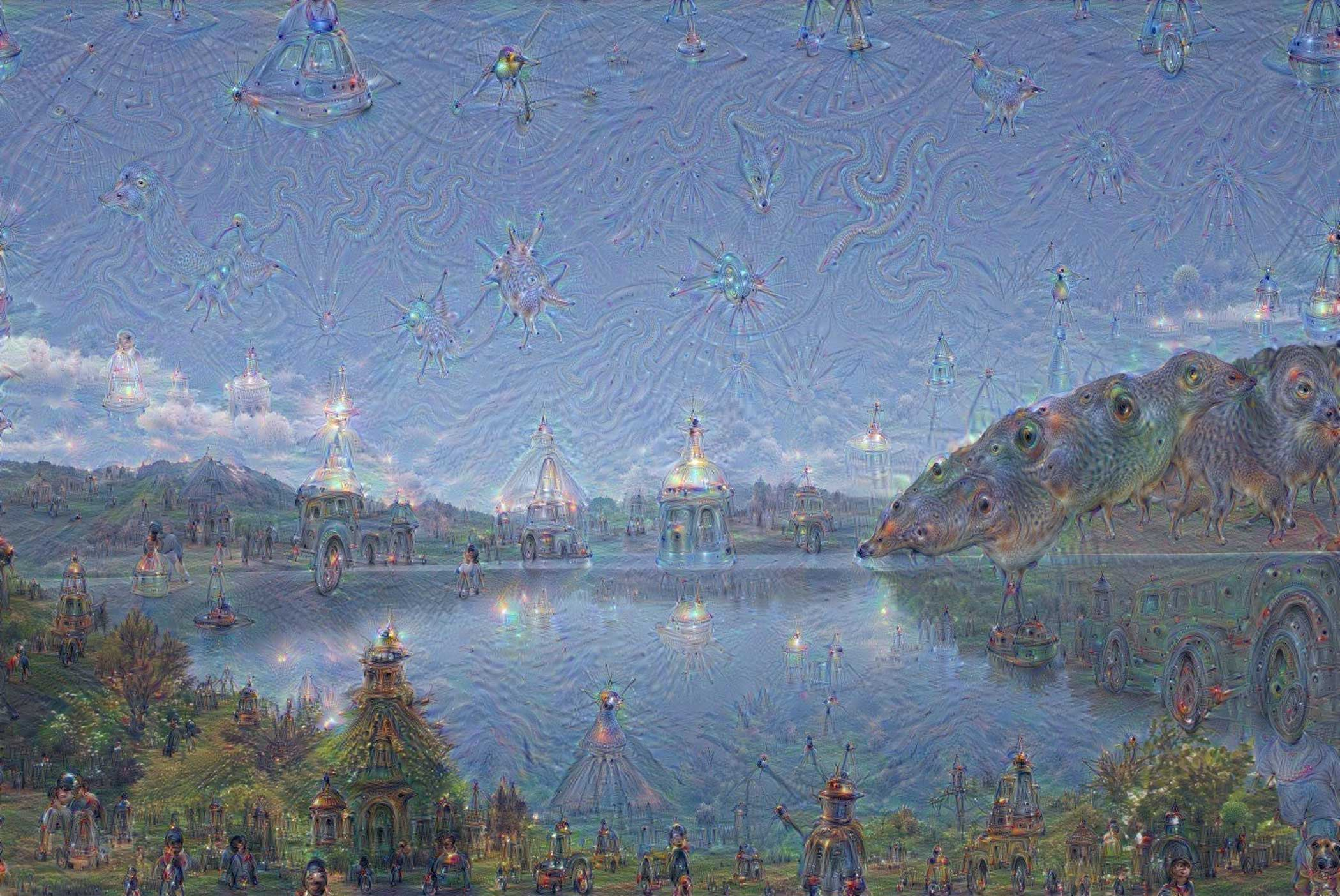

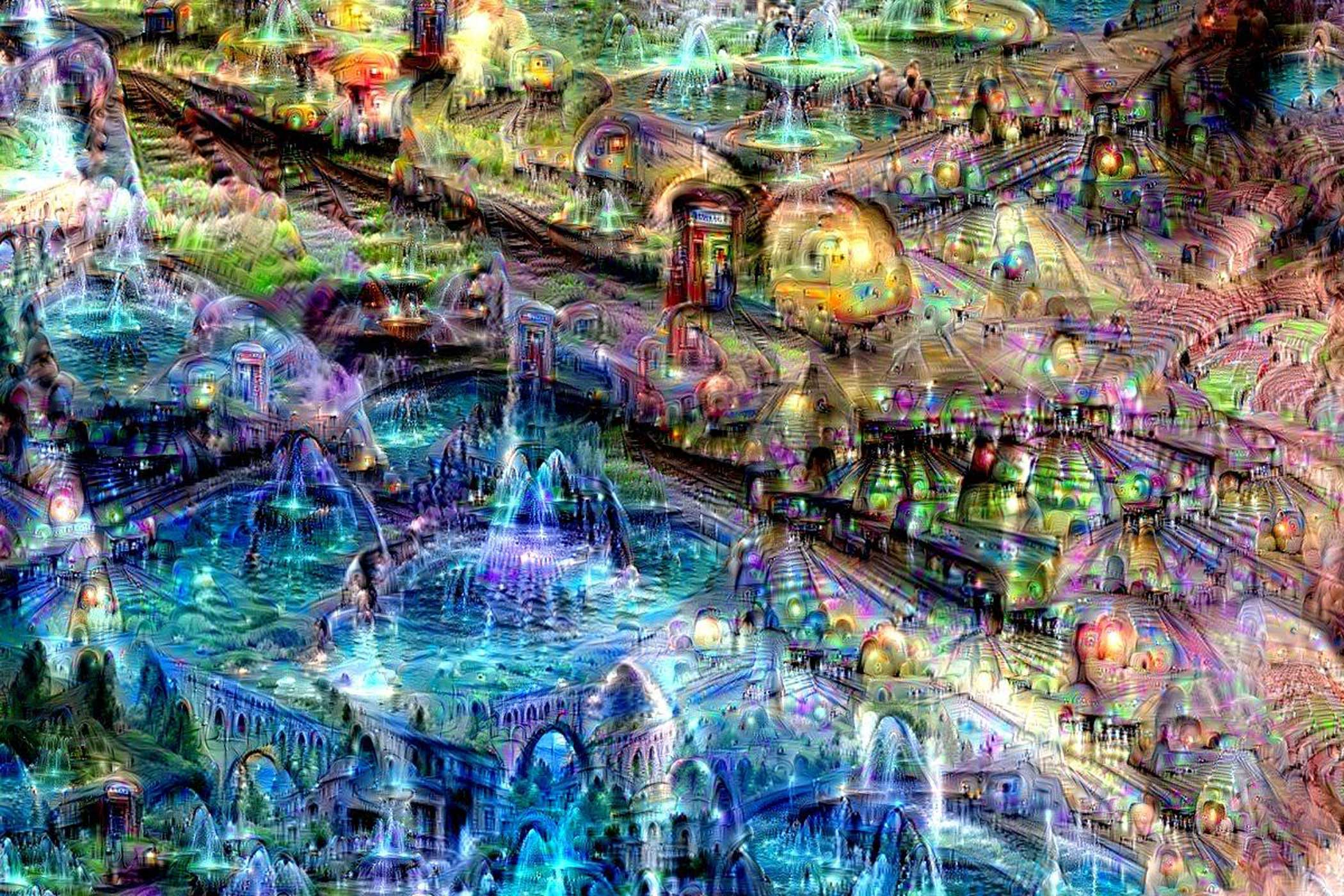

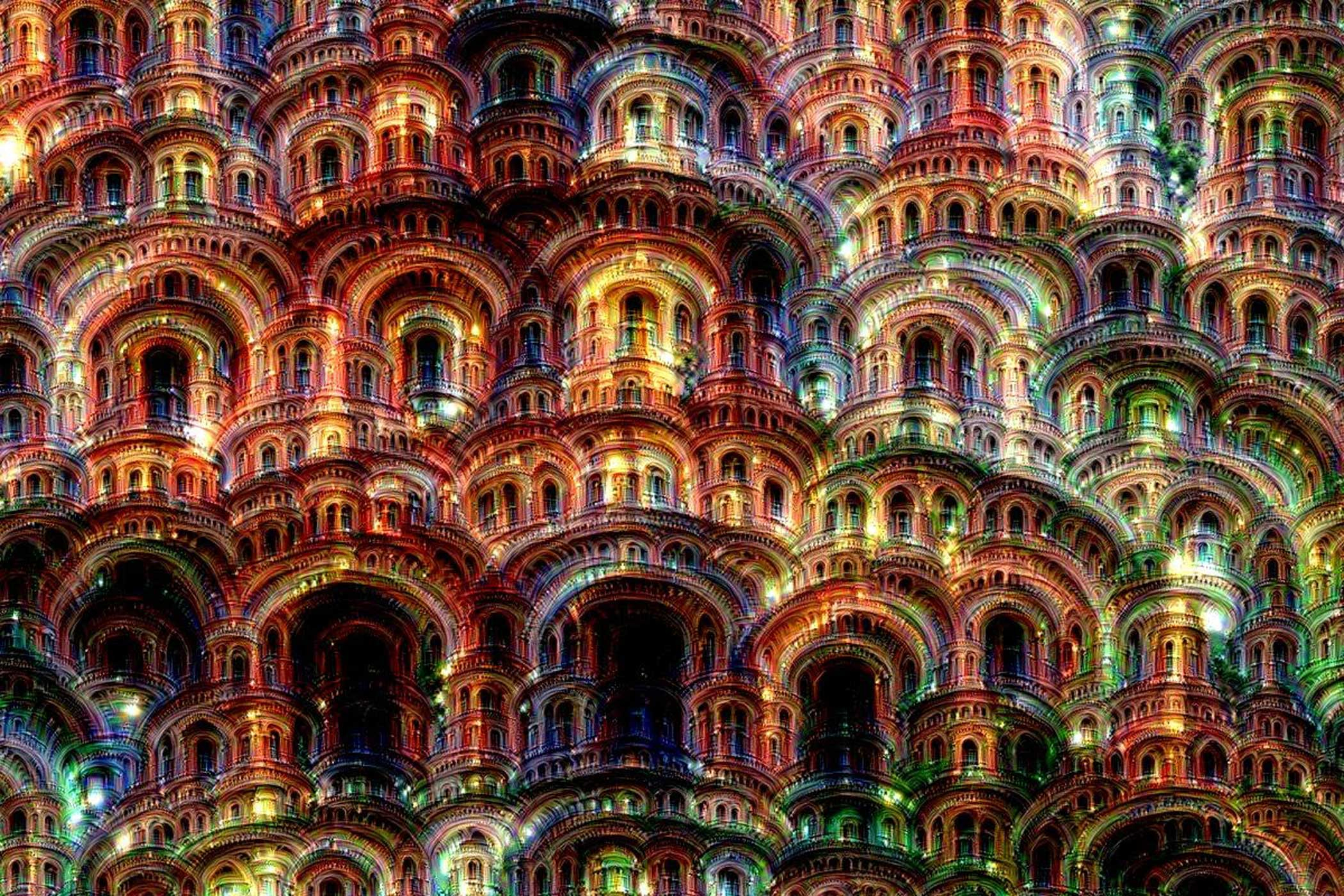

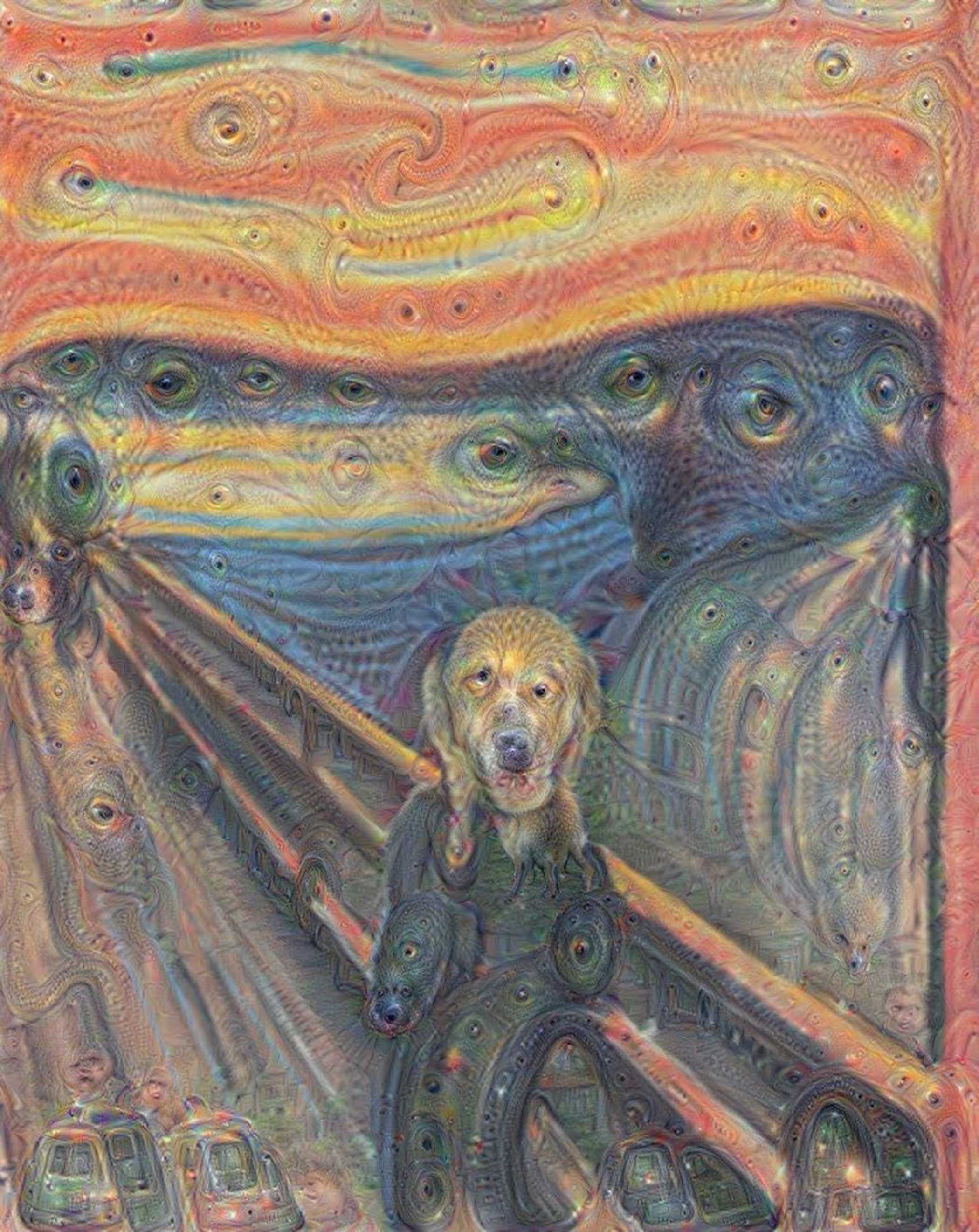

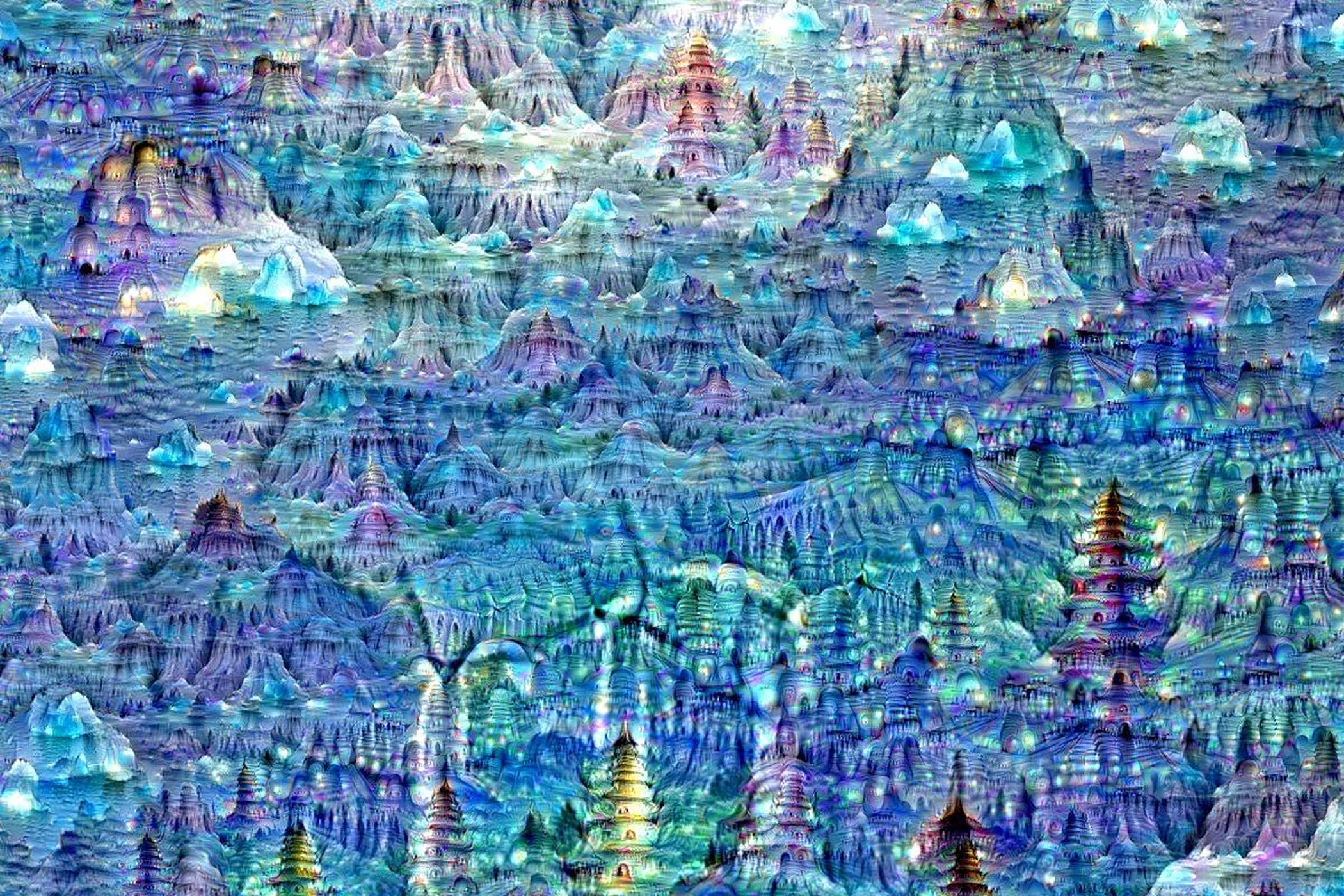

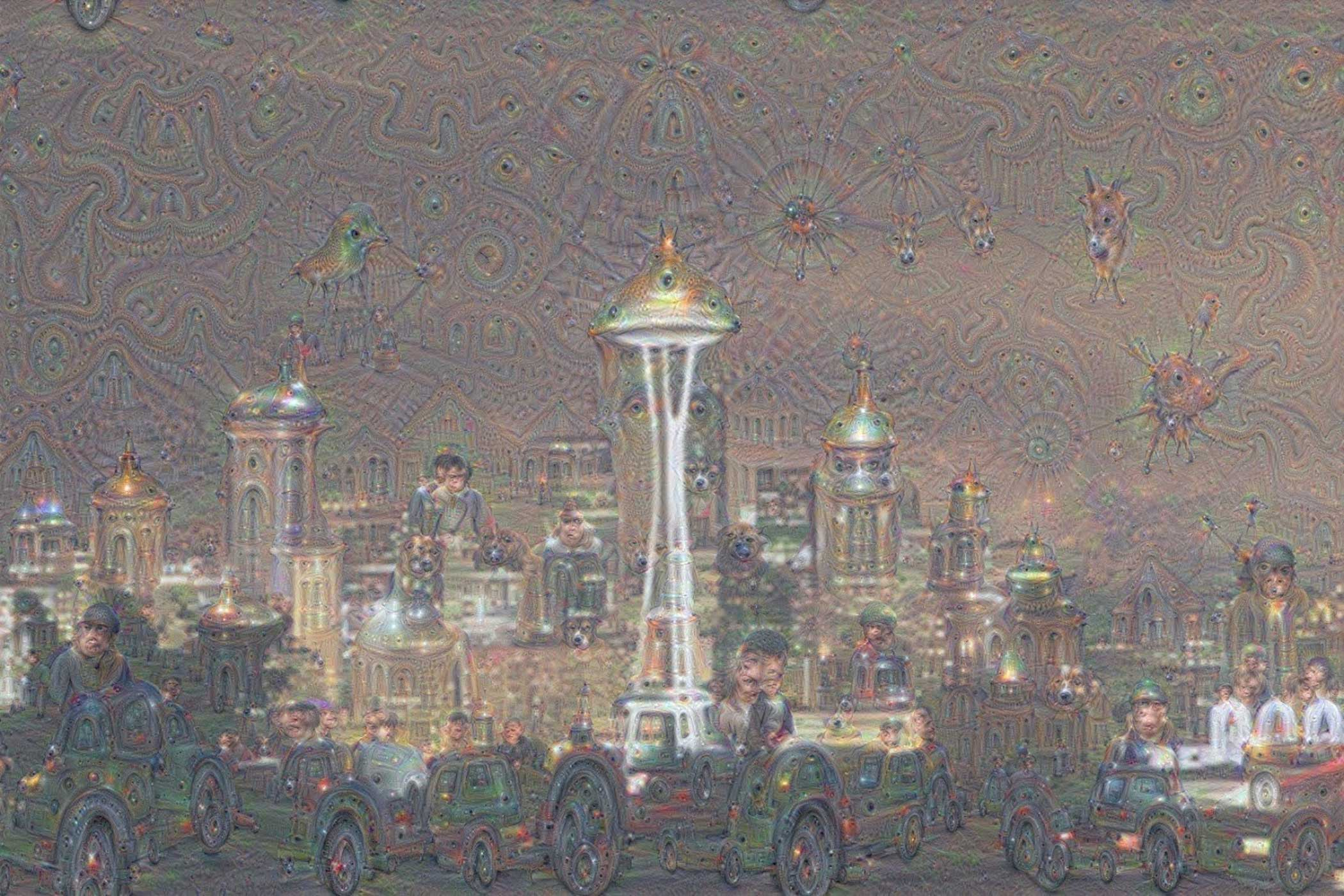

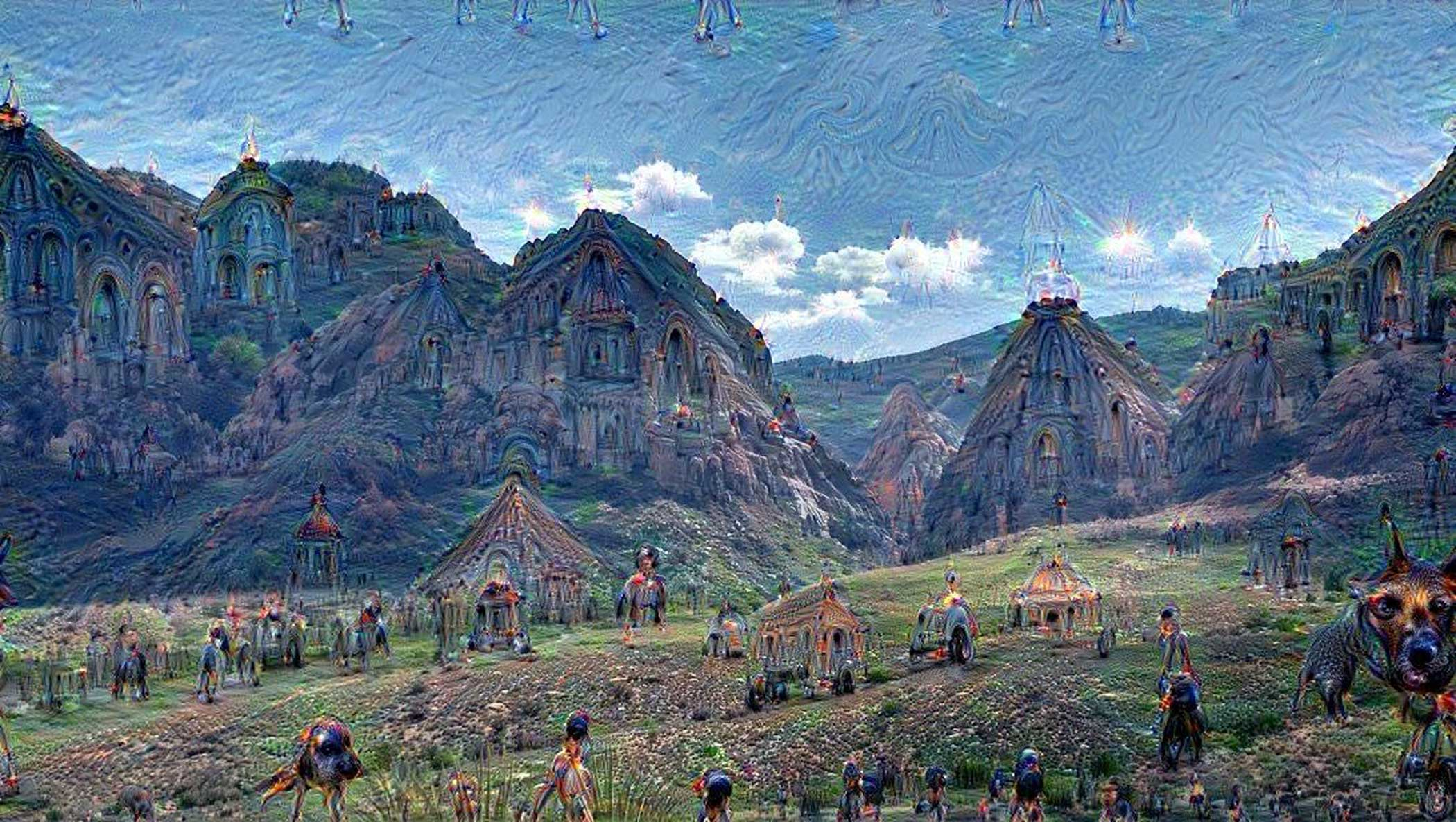

See the Fantastically Weird Images Google’s Self-Evolving Software Made

More Must-Reads from TIME

- Why Biden Dropped Out

- Ukraine’s Plan to Survive Trump

- The Rise of a New Kind of Parenting Guru

- The Chaos and Commotion of the RNC in Photos

- Why We All Have a Stake in Twisters’ Success

- 8 Eating Habits That Actually Improve Your Sleep

- Welcome to the Noah Lyles Olympics

- Get Our Paris Olympics Newsletter in Your Inbox

Contact us at letters@time.com