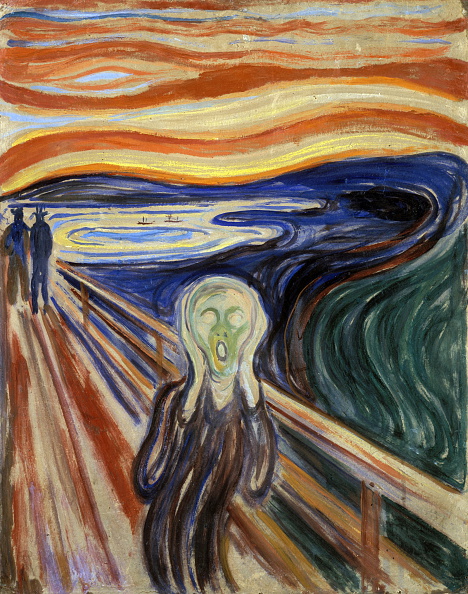

From Picasso’s The Young Ladies of Avignon to Munch’s The Scream, what was it about these paintings that arrested people’s attention upon viewing them, that cemented them in the canon of art history as iconic works?

In many cases, it’s because the artist incorporated a technique, form or style that had never been used before. They exhibited a creative and innovative flair that would go on to be mimicked by artists for years to come.

Throughout human history, experts have often highlighted these artistic innovations, using them to judge a painting’s relative worth. But can a painting’s level of creativity be quantified by Artificial Intelligence (AI)?

At Rutgers’ Art and Artificial Intelligence Laboratory, my colleagues and I proposed a novel algorithm that assessed the creativity of any given painting, while taking into account the painting’s context within the scope of art history.

In the end, we found that, when introduced with a large collection of works, the algorithm can successfully highlight paintings that art historians consider masterpieces of the medium.

The results show that humans are no longer the only judges of creativity. Computers can perform the same task – and may even be more objective.

How is creativity defined?

Of course, the algorithm depended on addressing a central question: how do you define – and measure – creativity?

There is a historically long and ongoing debate about how to define creativity. We can describe a person (a poet or a CEO), a product (a sculpture or a novel) or an idea as being “creative.”

In our work, we focused on the creativity of products. In doing so, we used the most common definition for creativity, which emphasizes the originality of the product, along with its lasting influence.

These criteria resonate with Kant’s definition of artistic genius, which emphasizes two conditions: being original and “exemplary.”

They’re also consistent with contemporary definitions, such as Margaret A Boden’s widely accepted notion of Historical Creativity (H-Creativity) and Personal/Psychological Creativity (P-Creativity). The former assesses the novelty and utility of the work with respect to scope of human history, while the latter evaluates the novelty of ideas with respect to its creator.

Building the algorithm

Using computer vision, we built a network of paintings from the 15th to 20th centuries. Using this web (or network) of paintings, we were able to make inferences about the originality and influence of each individual work.

Through a series of mathematical transformations, we showed that the problem quantifying creativity could be reduced to a variant of network centrality problems – a class of algorithms that are widely used in the analysis of social interaction, epidemic analysis and web searches. For example, when you search the web using Google, Google uses an algorithm of this type to navigate the vast network of pages to identify the individual pages that are most relevant to your search.

Any algorithm’s output depends on its input and parameter settings. In our case, the input was what the algorithm saw in the paintings: color, texture, use of perspective and subject matter. Our parameter setting was the definition of creativity: originality and lasting influence.

The algorithm made its conclusions without any encoded knowledge about art or art history, and made its assessments of paintings strictly by using visual analysis and considering their dates.

Innovation identified

When we ran an analysis of 1,700 paintings, there were several notable findings. For example, the algorithm scored the creativity of Edvard Munch’s The Scream (1893) much higher than its late 19th-century counterparts. This, of course, makes sense: it’s been deemed one of the most outstanding Expressionist paintings, and is one of the most-reproduced paintings of the 20th century.

The algorithm also gave Picasso’s Ladies of Avignon (1907) the highest creativity score of all the paintings it analyzed between 1904 and 1911. This is in line with the thinking of art historians, who have indicated that the painting’s flat picture plane and its application of Primitivism made it a highly innovative work of art – a direct precursor to Picasso’s Cubist style.

The algorithm pointed to several of Kazimir Malevich’s first Suprematism paintings that appeared in 1915 (such as Red Square) as highly creative as well. Its style was an outlier in a period then-dominated by Cubism. For the period between 1916 and 1945, the majority of the top-scoring paintings were by Piet Mondrian and Georgia O’Keeffe.

Of course, the algorithm didn’t always coincide with the general consensus among art historians.

For example, the algorithm gave a much higher score to Domenico Ghirlandaio’s Last Supper (1476) than to Leonardo da Vinci’s eponymous masterpiece, which appeared about 20 years later. The algorithm favored da Vinci’s St John the Baptist (1515) over his other religious paintings that it analyzed. Interestingly, da Vinci’s Mona Lisa didn’t score high by the algorithm.

Withstanding the test of time

Given the aforementioned departures from the consensus of art historians (notably, the algorithm’s evaluation of da Vinci’s works), how do we know that the algorithm generally worked?

As a test, we conducted what we called “time machine experiments,” in which we changed the date of an artwork to some point in the past or in the future, and recomputed their creativity scores.

We found that paintings from the Impressionist, Post-Impressionist, Expressionist and Cubism movements saw significant gains in their creativity scores when moved back to around AD 1600. In contrast, Neoclassical paintings did not gain much when moved back to 1600, which is understandable, because Neoclassicism is considered a revival of the Renaissance.

Meanwhile, paintings from Renaissance and Baroque styles experienced losses in their creativity scores when moved forward to AD 1900.

We don’t want our research to be perceived as a potential replacement for art historians, nor do we hold the opinion that computers are a better determinant of a work’s value than a set of human eyes.

Rather, we’re motivated by Artificial Intelligence (AI). The ultimate goal of research in AI is to make machines that have perceptual, cognitive and intellectual abilities similar to those of humans.

We believe that judging creativity is a challenging task that combines these three abilities, and our results are an important breakthrough: proof that a machine can perceive, visually analyze and consider paintings much like humans can.

This article originally appeared on The Conversation

More Must-Reads from TIME

- Donald Trump Is TIME's 2024 Person of the Year

- Why We Chose Trump as Person of the Year

- Is Intermittent Fasting Good or Bad for You?

- The 100 Must-Read Books of 2024

- The 20 Best Christmas TV Episodes

- Column: If Optimism Feels Ridiculous Now, Try Hope

- The Future of Climate Action Is Trade Policy

- Merle Bombardieri Is Helping People Make the Baby Decision

Contact us at letters@time.com