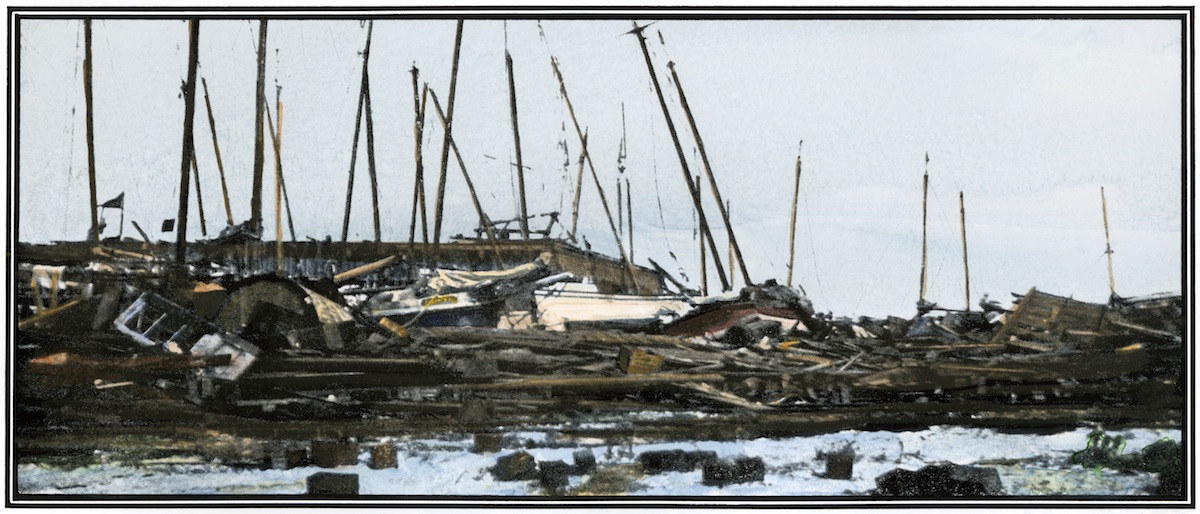

Meteorology hasn’t always been as exact a science as it is today—as Al Roker well knows. His upcoming book, The Storm of the Century, is a narrative account of the hurricane that devastated Galveston, Tex., in September of 1900, essentially destroying a city in one single day. One of the many figures who populates the story of unprecedented disaster is Isaac Cline, the chief meteorologist for Galveston. The turn of the century was an exciting time to be in meteorology: it seemed that, as Roker writes, “nature’s terrors would succumb to the superior intelligence of the human race.” Galveston proved that theory wrong, even though Cline was well versed in the most advanced weather science of his day, which Roker explains in the exclusive excerpt below:

While the science of forecasting was becoming, in Cline’s day, a modern and objective one, much of the technology on which it depended was ancient.

Of the big three, the anemometer used the oldest technology. Four fine, metal, hemispherical cups, their bowls set vertically against the wind, caught air flow. Because each cup was fixed to one of the four posts of a thin, square metal cross, lying horizontally, and because the cross’s crux was fixed to a vertical pole, when wind pushed the cups, they made the whole cross rotate. It made revolutions around the pole.

In Cline’s day, the pole was connected to a sensor with a dial read-out display. The number of revolutions the cross made per minute—clocked by the sensor, transferred by the turnings of the wheels, and displayed on the dial—indicated a proportion of the wind’s speed in miles per hour.

Rotating cups, wheels, and a dial: the anemometer was fully mechanical, with no reliance on electricity. And while other competing anemometer designs existed, involving liquids and tubes, the four-cup design became standard in American meteorology in the nineteenth century, remaining remarkably stable.

In 1846, an Irish meteorologist named John Thomas Romney Robinson upgraded the technology. But before that, the biggest development in clocking wind speeds had been made in 1485—by Leonardo da Vinci. The anemometer was already a durable meteorology classic when Isaac Cline began studying.

The second member of the forecasting big three, which Isaac Cline studied with such interest under the Signal Corps, was the hygrometer, which measures relative humidity. Like the anemometer, it’s been around ever since a not-very-accurate means of measuring relative humidity was built by—once again—Leonardo da Vinci.

By Cline’s day, a basic hygrometer measured the degree of moisture in the air by using two glass bulbs, each at one end of a glass tube. The tube passed through the top of a wooden post and bent downward on both sides of the post, farther down one side than the other. Thus one of the bulbs was lower than the other. In that lower bulb sat a thermometer, dipped in ether, a gas that had condensed in the bulb into a liquid.

The other, higher bulb contained ether too, but here the gas remained in its vapor form. That bulb was covered in a light fabric.

When condensed ether was poured over the fabric covering the higher bulb, the bulb cooled, and the vaporized ether within condensed, lowering vapor pressure in the bulb. That lowering of pressure caused the liquid ether in the lower bulb to begin evaporating into the space provided. So the lower bulb’s temperature fell as well.

Moisture—known as a “dew”—therefore formed on the outside of the lower bulb. As it did, the temperature indicated by the thermometer in that bulb was read and noted. That reading is called the dew-point temperature. Simply comparing the dew-point temperature to the air temperature outside the bulbs—as measured by a common weather thermometer, conveniently mounted on the hygrometer’s wooden post—gives the relative humidity. It’s a ratio of dew-point temperature to air temperature. The closer dew-point temperature gets to air temperature, the higher the relative humidity.

As a student of humidity, Isaac Cline read tables (sometimes built into the hygrometer for quick reference) showing the exact humidity ratios. But experienced forecasters know the rough ratios by heart.

We concern ourselves with humidity mainly on hot days. When there’s lots of moisture in the air, it can’t accept much more moisture, and that means warmth has a harder time leaving our bodies via perspiration. With a temperature of 95 degrees Fahrenheit and a dew point of 90 degrees Fahrenheit, you’ll get a relative humidity of nearly 86 percent—quite uncomfortable. When air temperature and dew point are identical, humidity is said to be 100 percent. We really don’t like that.

There were other kinds of hygrometers as well, developed during Isaac Cline’s early career as a meteorologist. One, called a psychrometer, compared a wet thermometer bulb, cooled by evaporation, with a dry thermometer bulb.

And in 1892, a German scientist—he had the unfortunate name of Richard Assmann—built what was known as an aspiration psychrometer for even more minute accuracy. It used two matched thermometers, protected from radiation interference by a thermal shield, and a drying fan driven by a motor. By 1900, when Isaac Cline was working in the weather station in the Levy Building in Galveston, hygrometer science was at its apex.

But perhaps the most important element in weather forecasting is the barometer.

The role of barometric pressure—air pressure—is counterintuitive. We can directly feel the phenomena measured by anemometers and hygrometers—wind speeds and relative humidity: wind knocks us around and humidity makes us sticky. But the sensations caused by air pressure work differently from the way we might expect.

That difference has to do with the very nature of air. Usually we don’t think much about air. While we know it gives us oxygen, breathing is largely unconscious. We notice air when it’s very still or very windy. And we notice air when it stinks.

Otherwise we generally ignore the air. We imagine it as nothing but a weightless emptiness.

But air does have weight. That weight exerts pressure on the Earth’s surface, as well as on everything on the Earth: human skin and inanimate objects. We refer to the pressure of that weight as “atmospheric pressure,” and we measure it with a barometer.

When more and bigger molecules gather, air’s weight increases, and the atmosphere bears down snugly on all surfaces. We call that effect, not surprisingly, “high pressure.” The strange thing, though, is that high pressure—all that heavy weight of air—makes us feel freer, more energetic. It makes the air feel not heavier but lighter.

That’s because where pressure is high, relative humidity is suppressed. Warmth can’t lift as easily from the surface of any object—including from the Earth’s surface. Warm air currents are held at bay, moisture is blocked, winds remain stable. Rain, lightning, and thunder are discouraged. High pressure usually means nice weather.

By the same token, when we complain that the air feels heavy—on those days of sluggishness, when we feel as if we’re struggling through a swamp—heaviness is not really what we’re feeling. Just the opposite. On those days, the air has less weight, lower pressure.

The result, usually, is just some unpleasantness. That’s because lighter and fewer molecules in the atmosphere cause atmospheric “lifting.” Heat and moisture lift upward from all surfaces. The humidity gets bad.

But when barometric pressure falls low enough, winds may be expected to rise, clouds form, and rain, thunder, and lightning follow. With very low air pressure, things aren’t just unpleasant. They’re dangerous, sometimes deadly.

Barometers for measuring pressure had been part of experimentation in natural science since the 1640s, well before modern weather forecasting. For a long time, it just seemed interesting, and possibly useful, to know that atmospheric pressure exists at all. Or to see that it can do work—like pushing mercury upward in a column.

But soon people began to apply the science. They used pressure readings not only to note the existing weather but to predict future changes in weather. One scientist graduated the scale so the pressure could be measured in exact increments. Another realized that instead of pushing mercury upward, the scale could be turned into a circle to form a dial; that enabled far subtler readings.

Yet another change came with the portable barometer. Using no liquid, and therefore easier to transport on ships, the portable barometer took the form of a small vacuum-sealed metal box, made of beryllium and copper. Atmospheric pressure made the box expand and contract, thus moving a needle on its face. A barometer like that could be carried in a pocket by a ship’s captain. He could watch the pressure fall and know that he was sailing into a storm.

Just before Isaac Cline began studying, the widely traveled Vice-Admiral Robert FitzRoy, of the British Royal Navy, formalized a new system for detailed weather prediction based on barometric readings. FitzRoy had served as captain on HMS Beagle, Charles Darwin’s exploration ship, and also as governor of New Zealand. His idea was to go beyond just noting existing and future weather conditions. He found ways of communicating conditions from ship to ship. That aided safety at sea.

By the mid-nineteenth century, a large barometer of FitzRoy’s design was set up on big stone housings at every British port. Captains and crews could see what they were about to get into. In 1859, a storm at sea caused so many deaths that FitzRoy began working up a system of charts that would allow for what he called, for the first time anywhere, “forecasting the weather.”

The Storm of the Century by Al Roker will be available on Aug. 11, 2015.

Read next: Panama Canal to Place Limits on Shipping Due to El Niño Drought

Download TIME’s mobile app for iOS to have your world explained wherever you go

More Must-Reads from TIME

- Why Biden Dropped Out

- Ukraine’s Plan to Survive Trump

- The Rise of a New Kind of Parenting Guru

- The Chaos and Commotion of the RNC in Photos

- Why We All Have a Stake in Twisters’ Success

- 8 Eating Habits That Actually Improve Your Sleep

- Welcome to the Noah Lyles Olympics

- Get Our Paris Olympics Newsletter in Your Inbox

Contact us at letters@time.com