When Alan Turing submitted his paper On Computable Numbers to the Proceedings of the London Mathematical Society on this day, May 28, in 1936, he could not have guessed that it would lead not only to the computer as we know it today, but also nearly all of the gadgets and devices that are so crucial a part of our lives.

The paper demonstrated that a so-called Turing Machine could perform solvable computations, a proof that is commonly seen as one of the original stepping stones toward the existence of modern computers. Though Turing, who died in 1954, never got to see a smartphone, his paper remains the touchstone behind the technology.

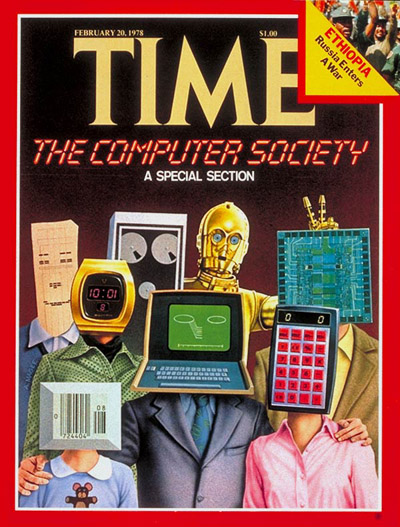

For a 1978 cover story about “The Computer Society,” TIME broke down how computers work in easy(-ish)-to understand terms, thus explaining why Turing mattered so much:

In the decimal system, each digit of a number read from right to left is understood to be multiplied by a progressively higher power of 10. Thus the number 4,932 consists of 2 multiplied by 1, plus 3 multiplied by 10, plus 9 multiplied by 10 X 10, plus 4 multiplied by 10 X 10 X 10. In the binary system, each digit of a number, again read from right to left, is multiplied by a progressively higher power of 2. Thus the binary number 11010 equals 0 times 1, plus 1 times 2, plus 0 times 2 X 2, plus 1 times 2 X 2 X 2, plus 1 times 2 X 2 X 2 X 2–for a total of 26 (see chart).

Working with long strings of 1s and 0s would be cumbersome for humans–but it is a snap for a digital computer. Composed mostly of parts that are essentially on-off switches, the machines are perfectly suited for binary computation. When a switch is open, it corresponds to the binary digit 0; when it is closed, it stands for the digit 1. Indeed, the first modern digital computer completed by Bell Labs scientists in 1939 employed electromechanical switches called relays, which opened and closed like an old-fashioned Morse telegraph key. Vacuum tubes and transistors can also be used as switching devices and can be turned off and on at a much faster pace.

But how does the computer make sense out of the binary numbers represented by its open and closed switches? At the heart of the answer is the work of two other gifted Englishmen. One of them was the 19th century mathematician George Boole, who devised a system of algebra, or mathematical logic, that can reliably determine if a statement is true or false. The other was Alan Turing, who pointed out in the 1930s that, with Boolean algebra, only three logical functions are needed to process these “trues” and “falses”–or, in computer terms, 1s and 0s. The functions are called AND, OR and NOT, and their operation can readily be duplicated by simple electronic circuitry containing only a few transistors, resistors and capacitors. In computer parlance, they are called logic gates (because they pass on information only according to the rules built into them). Incredible as it may seem, such gates can, in the proper combinations, perform all the computer’s high-speed prestidigitations.

The simplest and most common combination of the gates is the half-adder, which is designed to add two 1s, a 1 and a 0, or two 0s. If other half-adders are linked to the circuit, producing a series of what computer designers call full adders, the additions can be carried over to other columns for tallying up ever higher numbers. Indeed, by using only addition, the computer can perform the three other arithmetic functions.

Read the full story from 1978, here in the TIME Vault: The Numbers Game

More Must-Reads from TIME

- Welcome to the Noah Lyles Olympics

- Melinda French Gates Is Going It Alone

- What to Do if You Can’t Afford Your Medications

- How to Buy Groceries Without Breaking the Bank

- Sienna Miller Is the Reason to Watch Horizon

- Why So Many Bitcoin Mining Companies Are Pivoting to AI

- The 15 Best Movies to Watch on a Plane

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Write to Lily Rothman at lily.rothman@time.com