The long-held notion that Facebook’s algorithm leads to the creation of “echo chambers” among users isn’t exactly true, according to a report published Thursday in the journal Science.

After studying the accounts of 10 million users, data scientists at Facebook found that liberals and conservatives are regularly exposed to at least some “crosscutting” political news, meaning stories that don’t conform to their pre-existing biases.

The algorithm for Facebook’s News Feed leads conservatives to see 5% less liberal content than their friends share and liberals to see 8% less conservative content. But the biggest impact on what users see comes from what they clicked on in the past. Liberals are about 6% less likely to click on crosscutting content, according to the research, and conservatives are about 17% less likely. Facebook’s algorithm serves users stories based in part on the content they have clicked in the past.

Ultimately, the study suggest it’s not Facebook’s algorithm that’s making your profile politically one-sided, it’s your own decisions to click on or ignore certain stories. However, some observers argue the Facebook study is flawed because of sampling problems and interpretation issues.

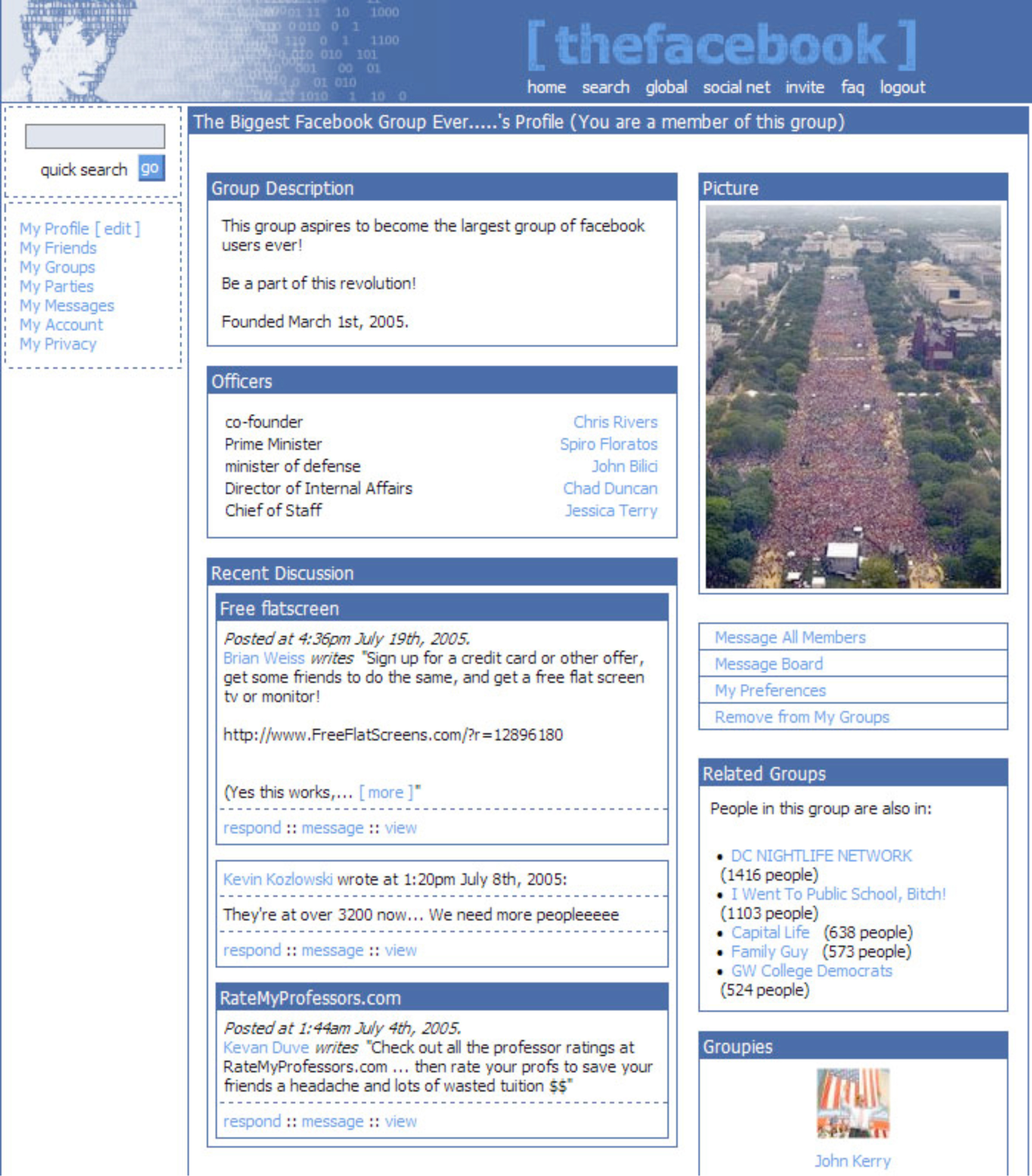

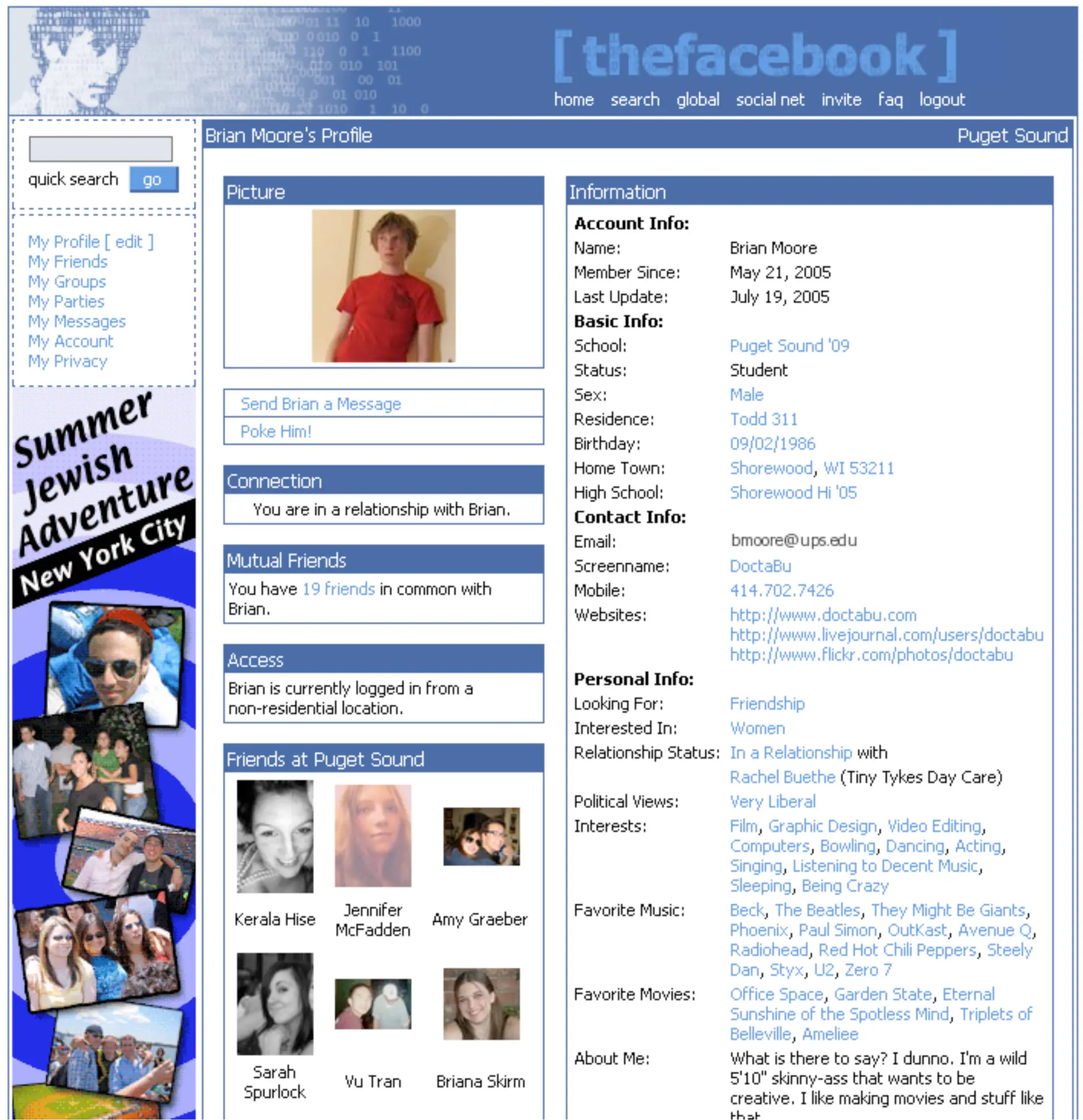

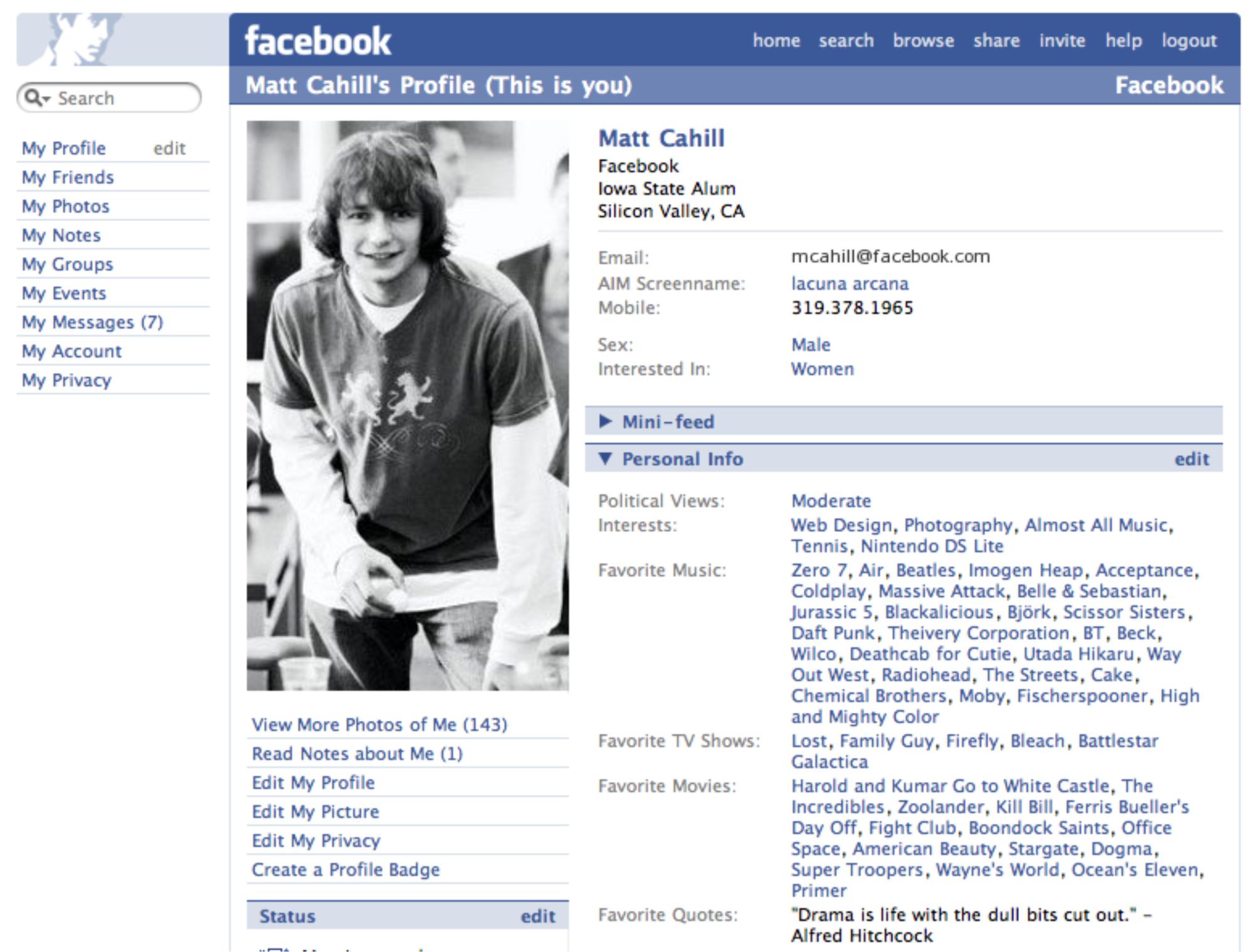

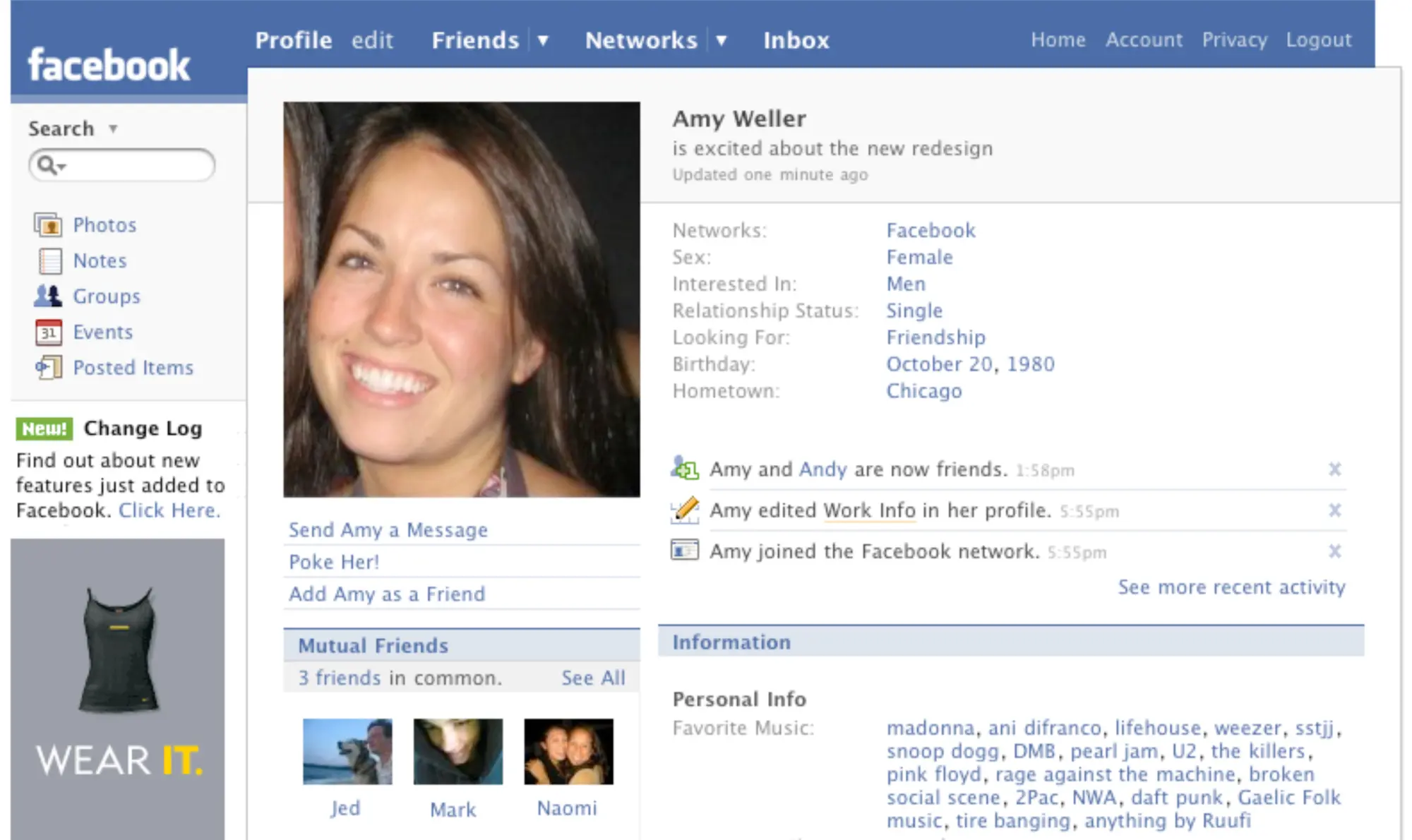

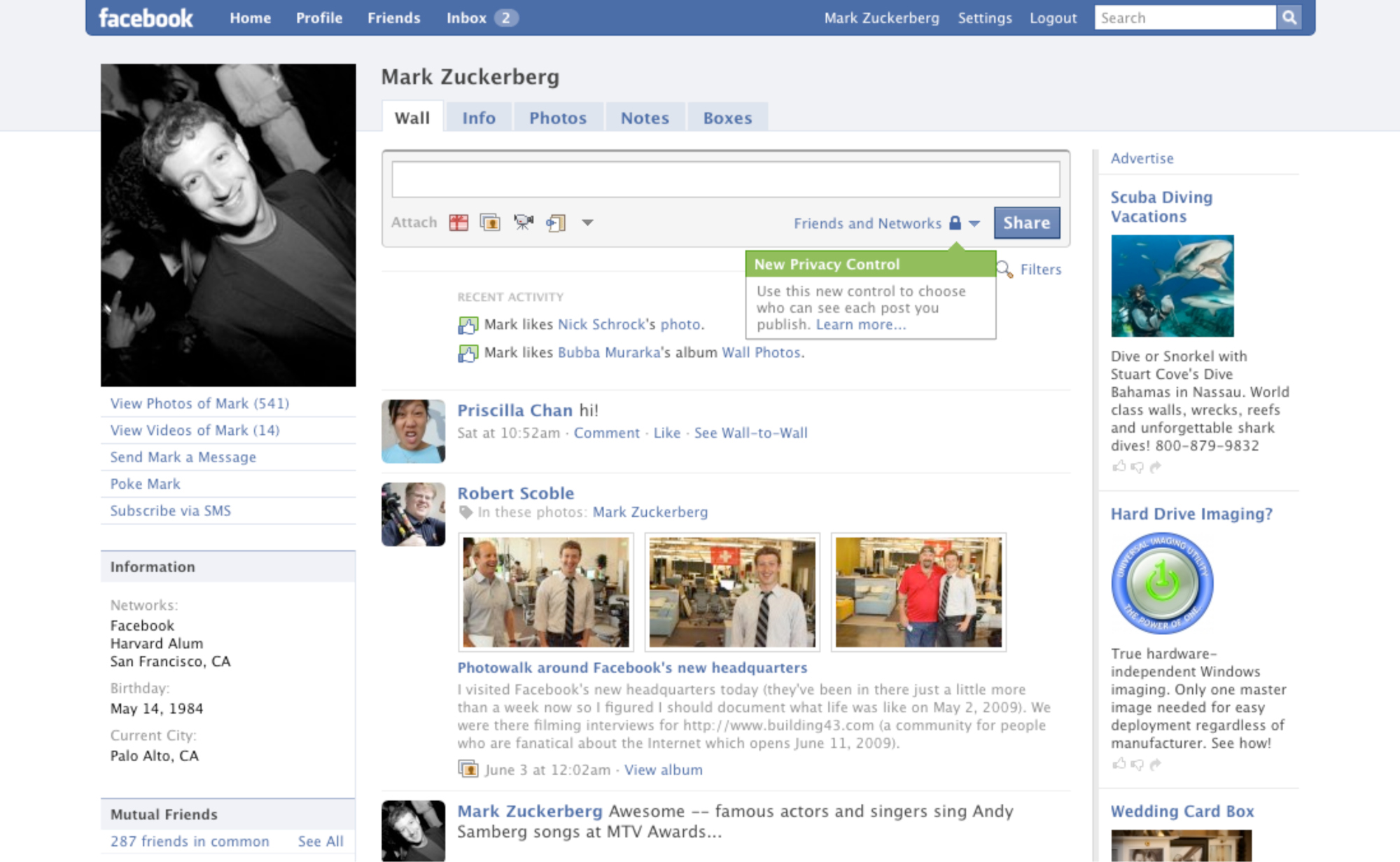

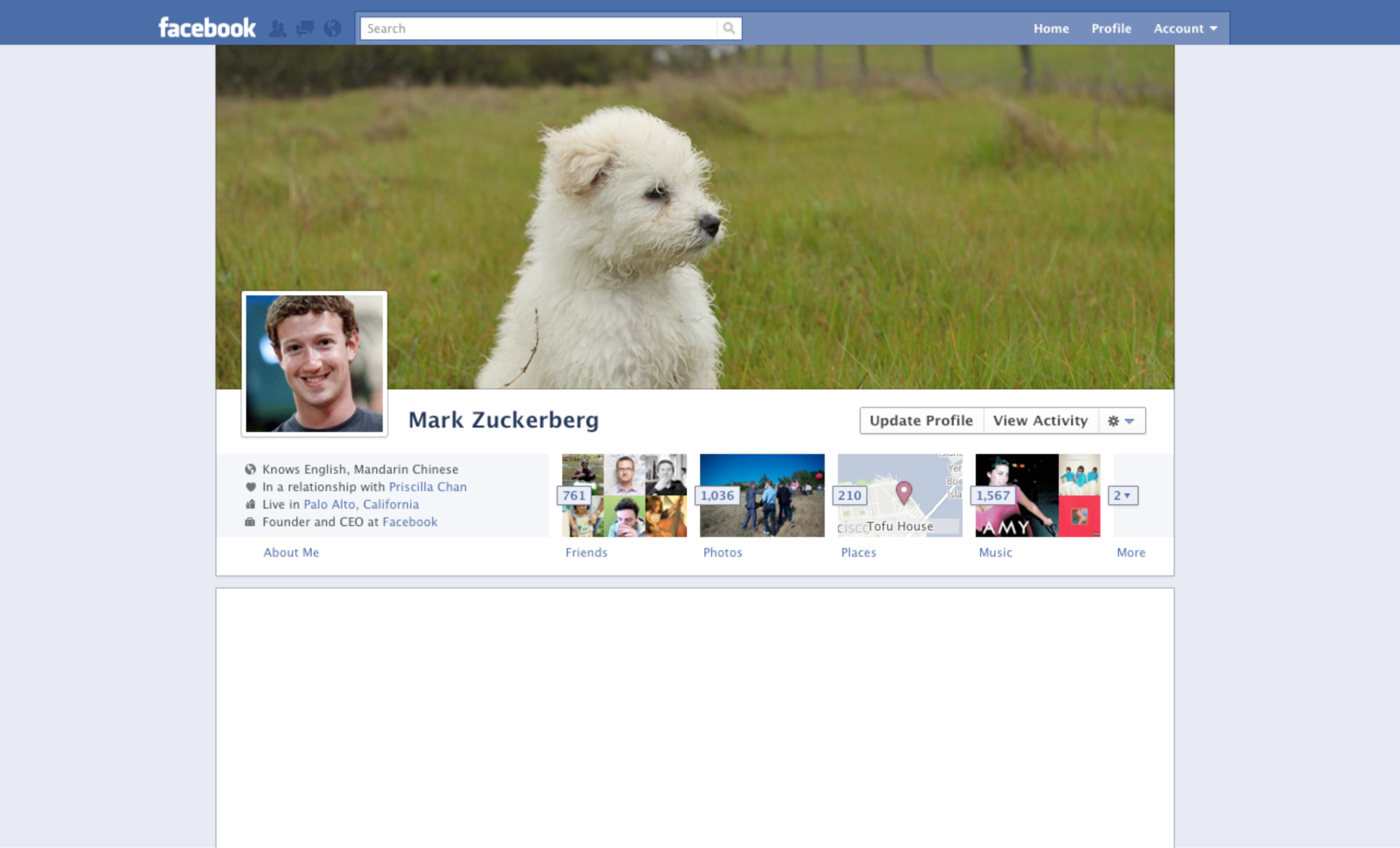

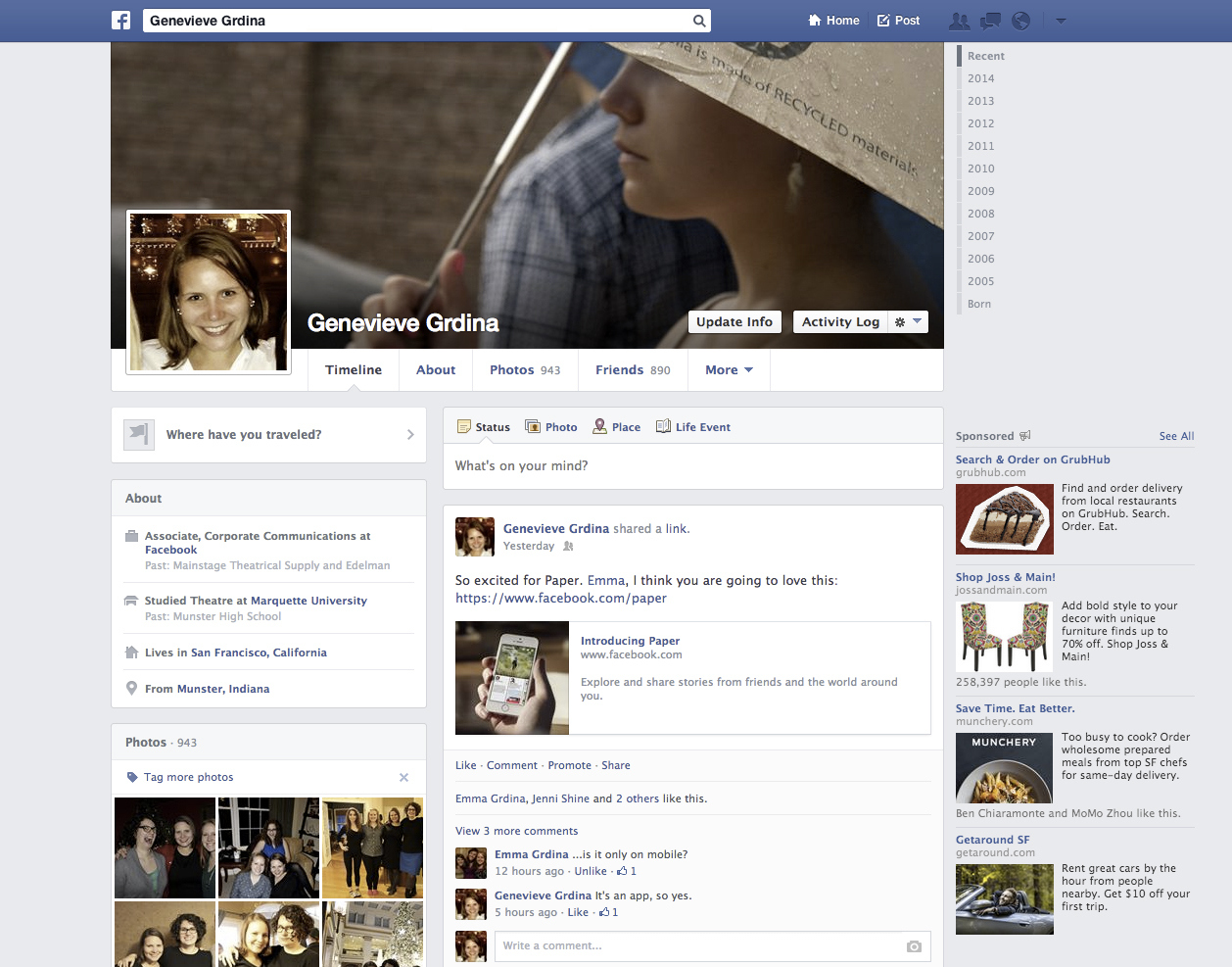

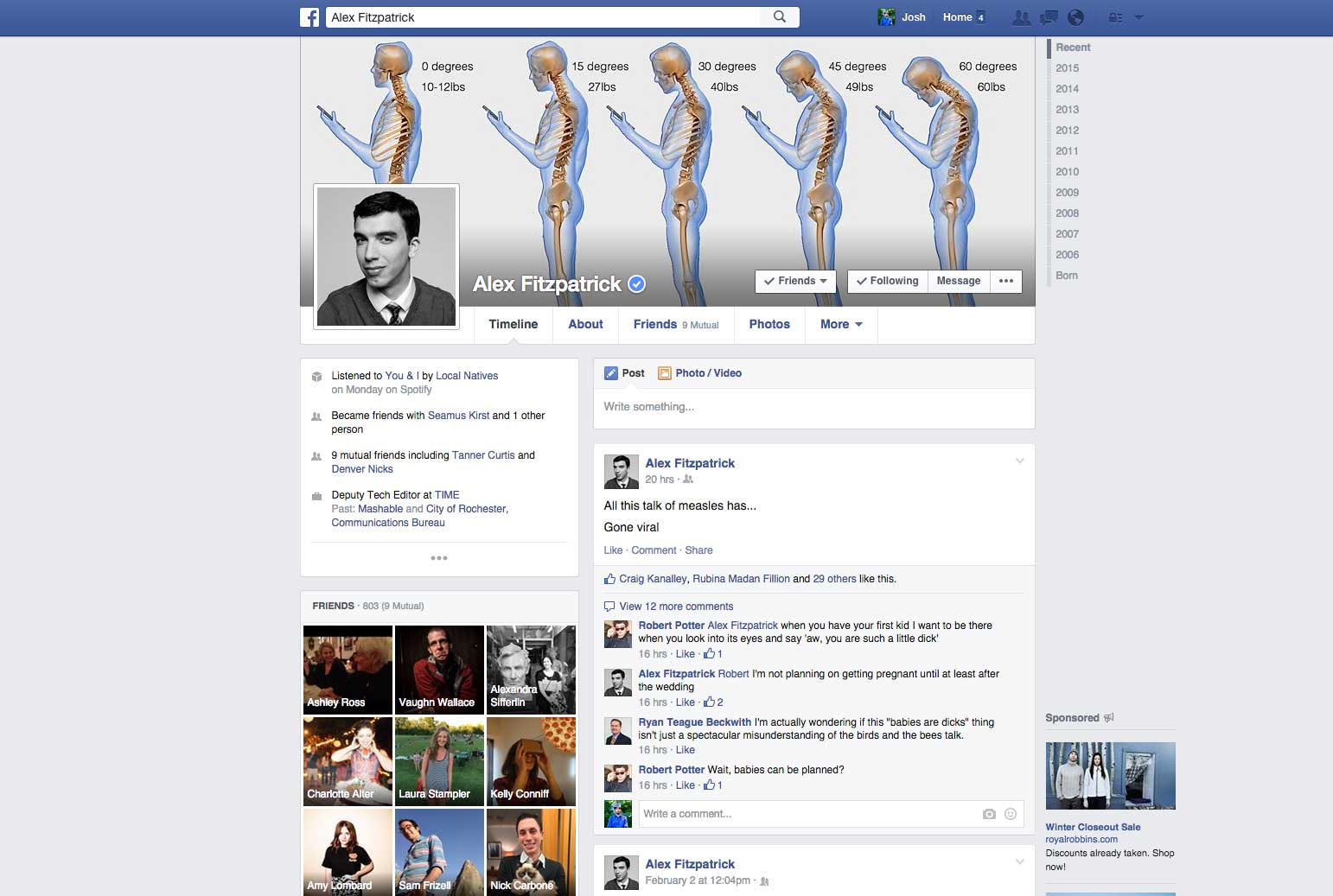

This Is What Your Facebook Profile Looked Like Over the Last 11 Years

More Must-Reads from TIME

- Donald Trump Is TIME's 2024 Person of the Year

- Why We Chose Trump as Person of the Year

- Is Intermittent Fasting Good or Bad for You?

- The 100 Must-Read Books of 2024

- The 20 Best Christmas TV Episodes

- Column: If Optimism Feels Ridiculous Now, Try Hope

- The Future of Climate Action Is Trade Policy

- Merle Bombardieri Is Helping People Make the Baby Decision

Contact us at letters@time.com