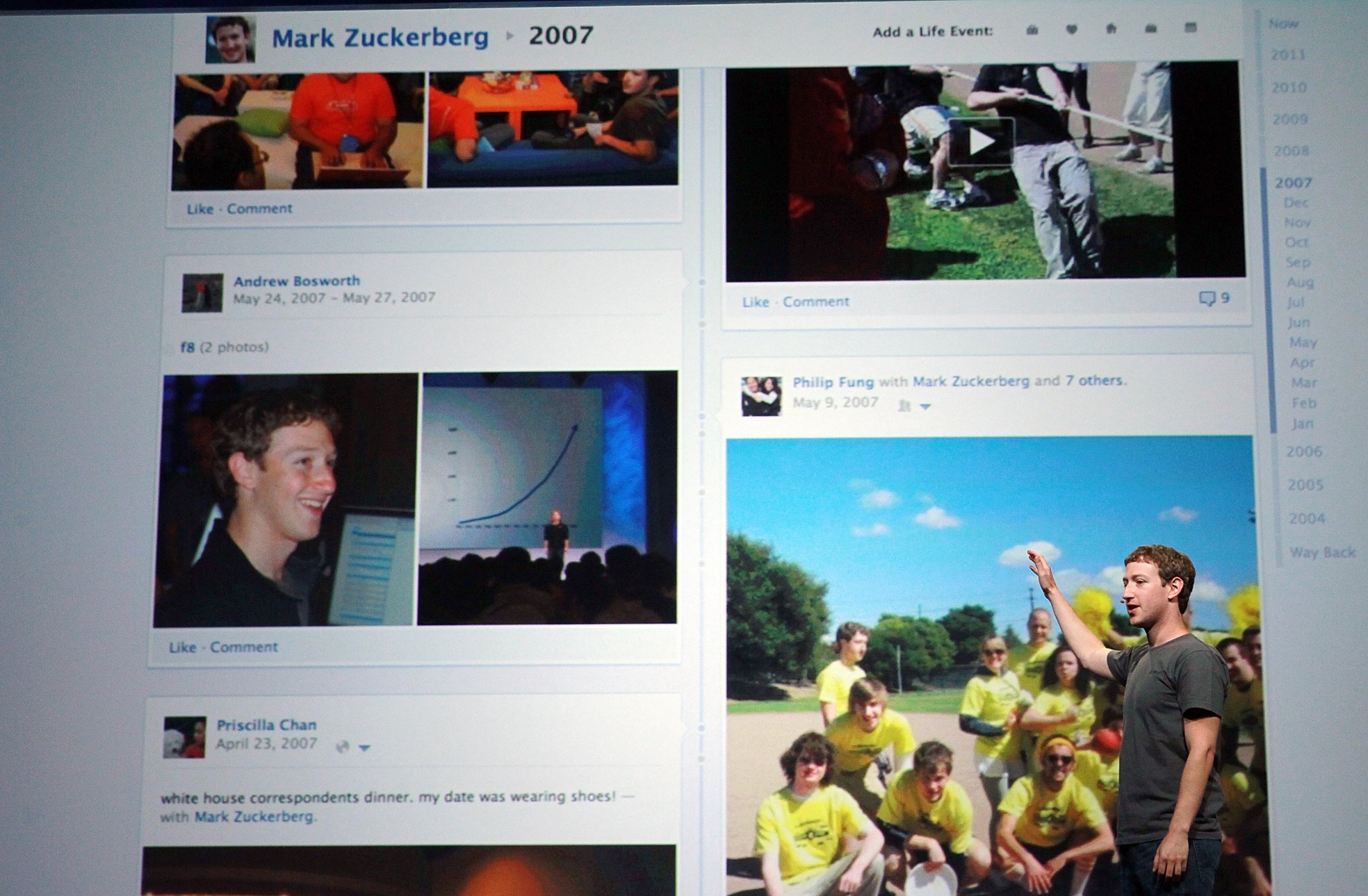

People are up in arms about the recent revelation that Facebook manipulated its users during a psychological study. The study, published in the Proceedings of the National Academy of Sciences and conducted by researchers at Cornell University and Facebook (full disclosure: I know the authors and the article’s editor), showed that people who saw more happy messages in their News Feed were more likely to post happy messages too. The researchers interpreted this as support for the theory of “emotional contagion”: that emotions can spread through online posts and interactions.

Unfortunately for the researchers, the explosive response to their study over social media confirms their findings. Negative emotion can easily go viral.

Why is this study is so controversial? Psychologists have known for years that individuals’ emotions can be influenced by their social surroundings. Sociologists have also shown that people act like their friends or other people around them in order to fit in. Just as no one wants to be a Debbie Downer at a party, posting sad stories online when your friends are posting happy ones seems to be a no-no. If anything, the findings add to a long list of Internet studies that argue against “digital dualism” — the notion that we behave differently online than we do offline — by showing that the online world plays an active role in shaping our social lives and experiences.

If the study’s findings are not controversial, its methods certainly are. Yet whether we like it or not, tech companies experiment with their users in precisely this way all the time. User-interface designers and researchers at places like Google, Facebook and Yahoo regularly tweak the live site’s interface for a subset of visitors to see whether users behave differently in response. While this technique shines new light on user behavior, the overall goal is to bring the company more revenue through more users, clicks or glances at ads. Stories of designers who made their companies millions more dollars in advertising revenue just by altering a single pixel on the homepage are legendary in Silicon Valley.

That’s why any tech company worth its salt has a research department staffed with Ph.D. scientists to analyze their data. That’s also why Facebook is actively hiring and reaching out to social scientists to help it better understand its data and reach new user populations.

Researchers, for their part, are increasingly joining forces with tech companies. There are many reasons to do so. From location check-ins to threaded conversations, from tweets in times of crisis to shared family photos, the reams of data present a fascinating slice of social life in the 21st century. These platforms also provide an unprecedented venue for a natural experiment at scale. With only a few tweaks, and without users’ knowing, researchers can witness which simple changes have tremendous effects.

As a sociologist of technology, I’ve witnessed these changes firsthand. I have grants from Microsoft and Yahoo; Intel funds my colleagues’ students; our graduates staff the labs at Facebook and Google. These collaborations aim to keep Internet research both current and practical.

But there are other reasons social scientists are turning to tech companies. Public money for social-science research is being slashed at the federal level. The congressional committee that oversees the National Science Foundation wants to cut $50 million to $100 million of social, behavioral and economics funding for the next two years (again, full disclosure: I have received NSF funding). A bill called FIRST: Frontiers in Innovation, Research, Science and Technology aims to improve American competitiveness by funding research that supports a U.S. industry advantage. Yet the committee has called specifically for the NSF to stop giving grants to study social media, online behavior or other Internet topics.

Ironically, at precisely the time when American technology companies are looking to social science to help understand their users and improve their business, this research is being denigrated in the House. And at exactly the time when independent research on Internet practices is needed, scholars must turn to companies for both data and funding.

This is a shortsighted move. On the one hand, it means we will train fewer social scientists to rigorously and responsibly answer the new questions posed by big data. But it also pushes basic research about online social life exclusively into the private sector. This leaves the same companies that make the technologies we use to talk, shop and socialize responsible for managing the ethics of online experimentation. No wonder that esoteric questions like informed consent are suddenly headline news.

The recently released study, then, does present reasons to be alarmed, though not for the reasons most of us think. Facebook isn’t manipulating its users any more than usual. But the proposed changes in social-science funding will have a more lasting effect on our lives both online and offline. That should inspire some emotions that are worth sharing.

Janet Vertesi is assistant professor of sociology at Princeton University, where she is a faculty fellow at the Center for Information Technology Policy

More Must-Reads from TIME

- How Donald Trump Won

- The Best Inventions of 2024

- Why Sleep Is the Key to Living Longer

- Robert Zemeckis Just Wants to Move You

- How to Break 8 Toxic Communication Habits

- Nicola Coughlan Bet on Herself—And Won

- Why Vinegar Is So Good for You

- Meet TIME's Newest Class of Next Generation Leaders

Contact us at letters@time.com