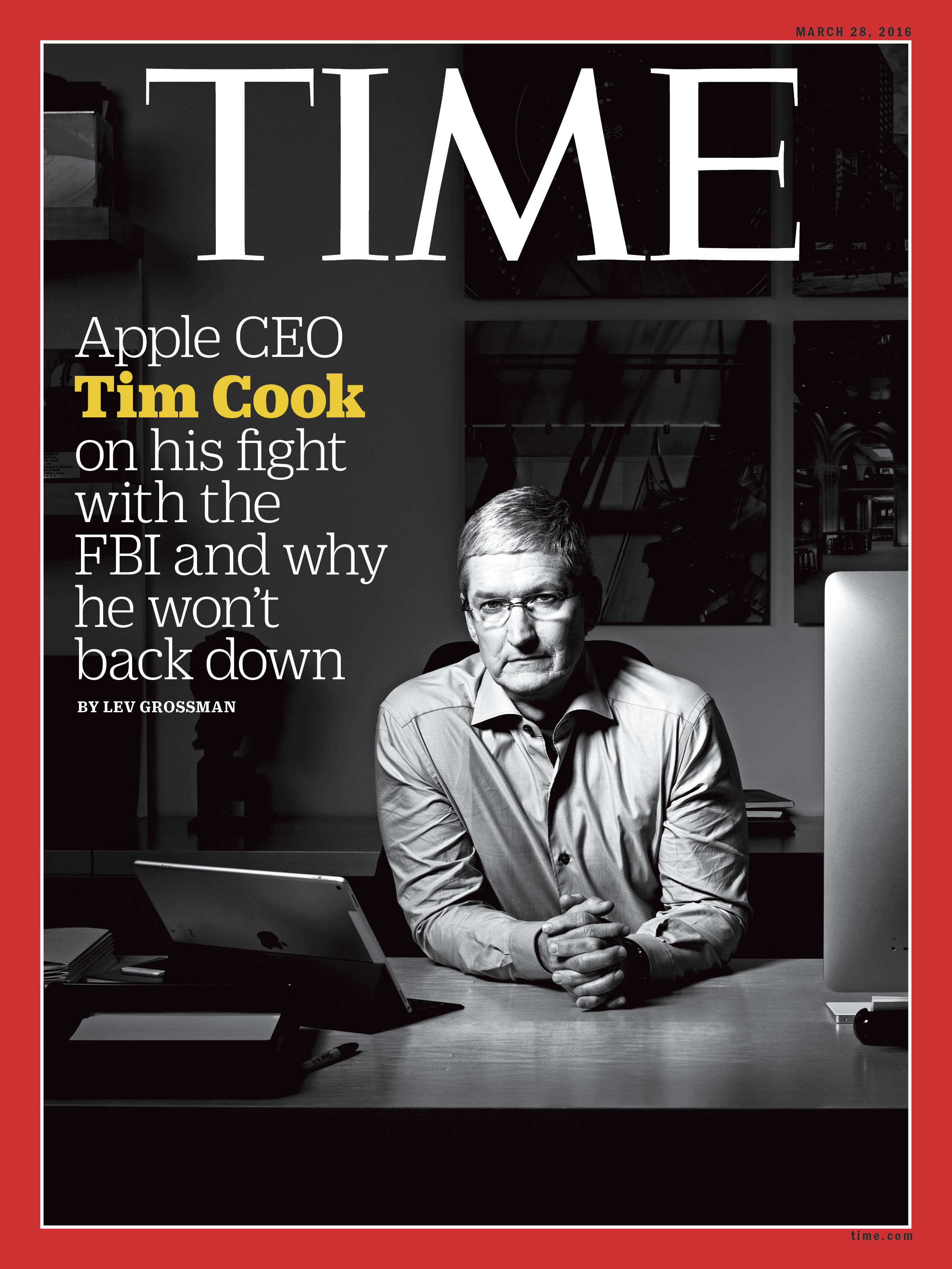

Apple CEO Tim Cook sat down with TIME’s Nancy Gibbs and Lev Grossman on March 10 to discuss his company’s rapidly escalating fight with the FBI over encryption. That is the subject of the magazine’s March 28 cover story, available here. What follows is a full transcript of their conversation:

To start with why don’t we roll back to last fall. There was a case that came up in Brooklyn, a drug dealer, the judge reached out to Apple and asked how you guys felt about getting information off the phone. Apple had gone along with similar requests prior to that. And in this case, Apple pushed back. Why the change?

COOK: The difference is that that judge asked us if we felt this was a proper use of the All Writs Act. Before judges would order us to do X or Y—we’ve never been asked to do what we’ve been asked to do now, so this case is materially different—but back then the question the judge asked us that we’d never been asked was if we felt the government was properly using this Act. And we went back and said no, we don’t. We don’t think the government has the authority to do this.

And our thinking has been that if you look back at the history of this discussion, because this discussion’s been going on for a while—CALEA, not to get too technical, but CALEA is essentially the regulatory arm for the telecommunications area. And there was a decision in the Congress to not have technology covered under, think about it as CALEA 2. And so the Congress passed. And our view has been that the use of this is inappropriate.

That doesn’t mean that we didn’t get the judges using it. But this judge said, I don’t want to just order it, I want to understand your view of whether this is a proper use. That was the first time that we’d ever been asked that before. We went back and put our argument together and said that no, we think it’s inappropriate.

Now in that case, what occurred was that the accused confessed, so the details of the case itself became completely unimportant. But the broader issue of, can the government use the All Writs Act to compel Apple to extract data from a phone, was still very important.

So when we saw the government going not only to this extent, but now going to a greater degree and asking us to develop a product, a new product that takes out all the security aspects, and makes it a simple process for them to guess many thousand different combinations of passwords or passcodes, we said you know, that case is now important. That question is now important, even though the details are no longer important. So we went back and asked that judge to rule on that. We’d been waiting, but we thought maybe he’s not going to do anything anymore since the case itself is no longer important.

And so we filed, and that’s a public filing. He asked us for more information about our point that the government was using this more and in increasingly significant ways. And then he asked the government to respond again. And then ruled —last Monday I think it was.

Now rolling forward to San Bernardino, just setting the scene, can you give us a little bit of the narrative? How did you find out about the government’s request? How did you feel about it? Who did you talk to? How did you arrive at the decision that you did?

COOK: I think the attack itself happened midweek, Wednesday or Thursday, if I remember correctly, and we didn’t hear anything for a few days. I think it was Saturday before we were contacted. We have a desk, if you will, set up to take requests from government. It’s set up 24/7, not as a result of this. it’s been going for a while. The call came into that desk, and they presented us with a warrant as it relates to this specific phone.

There were other phones involved, as I understand it, other phones that the shooters were using that they destroyed. This one was left intact. And so we filled the warrant. The warrant was for all information that we had about that phone, and so we passed that information, which for us was a cloud backup on the phone, and some other metadata, if you will, that we would have about the phone.

I suspect—I don’t know but I suspect—that they also gave the carrier a warrant, because they obviously have the ability to get the phone metadata and the metadata of messages that go across the cellular network. So there’s several different pieces of information they can gather on the phone.

Some time passed, quite a bit of time, over a month I believe, and they came back and said we want to get some additional information. And we said well, here’s what we would suggest. So we gave them some unsolicited advice. We said, take the phone to the home or apartment and power it, plug it in and let it back up. And as it turned out they came back and said, well, that didn’t work.

And we said, well, why? We need to understand this, we sent engineers and so forth, and we found out that they had directed the county in the early going, likely before we were ever involved, to reset the iCloud password. As you know, if you reset your iCloud password, the only way for the phone to back up is for you to put the new password in the phone. You can’t put the new password in the phone if it is pass code protected. So unfortunately that didn’t work.

So we were helping. We were consulting in addition to passing the information that we had on the phone, which was all the information that we had. Some more time passed, and they started talking to us about how they might sue, or they may put a claim in. But they never told us whether they were going to do it or not. By then it’s seventy-five days or so from the attack.

I think most people here felt like it wouldn’t occur, and I felt that if it would occur, I would get a call. And that didn’t happen. We found out about it actually from the press, who were being briefed about it in advance of the filing. That means they elected to not file it under seal, in order to get the visibility on it, I guess, and try to swing the public, and decided not to reach out on it.

We found out about it actually from the press, who were being briefed about it in advance of the filing.

So we then had a decision of what should we do. Of course we had previously decided—because they had asked us to do the thing that we’re calling government OS, the new operating system without the security controls—and we had long discussions about that internally, when they had asked us. Lots of people internally were involved. It wasn’t just me sitting in a room somewhere deciding that way, it was a labored decision. We thought about all the things you would think we would think about.

So we had already decided before the suit hit that this wasn’t a good thing for people. From a customer point of view it wasn’t good, because it would wind up putting millions of customers at risk, making them more vulnerable. In addition, we felt like it trampled on civil liberties, not only for our customers but in the broader sense. It felt like different points in history, almost, in the U.S., where the government overreached for whatever reason. And we were dead set in the path of it.

So we knew it was wrong. It was wrong on so many levels. And so when we saw the suit, when the suit actually hit, it was then more about, how do we communicate to the Apple community why we’re doing what we’re doing?

Because we knew that on the surface, why won’t you open this phone, you know, most people would quickly jump to yeah, you should do this. As we would want to if it didn’t have all these other implications to it. So it then became more about being clear and straightforward about why we were doing what we were doing.

When you say civil liberties, what do you mean exactly? What does that mean to you?

COOK: It means a lot of things. When I think of civil liberties I think of the founding principles of the country. The freedoms that are in the First Amendment. But also the fundamental right to privacy.

And the way that we simply see this is, if this All Writs Act can be used to force us to do something would make millions of people vulnerable, then you can begin to ask yourself, if that can happen, what else can happen? In the next senate you might say, well, maybe it should be a surveillance OS done. Maybe law enforcement would like the ability to turn on the camera on your Mac. But it wasn’t clear at all. Because if you read the All Writs Act, you can tell it was written over two hundred years ago. It’s a very open ended kind of thing that was clearly meant to fill in the crevices of laws that didn’t exist yet in the country. So we saw this slippery slope there.

And also the act itself doesn’t look at the crime, it doesn’t look at the reason the government wants it. It looks at the burden to the company that it’s asking to do it. So this case was domestic terrorism, but a different court might view that robbery is one. A different once might view that a tax issue is one. A different one might view that a divorce issue would be okay. We saw this huge thing opening and thought, you know, if this is where we’re going, somebody should pass a law that makes it very clear what the boundaries are. This thing shouldn’t be done court by court by court by court.

We saw this huge thing opening and thought, you know, if this is where we’re going, somebody should pass a law that makes it very clear what the boundaries are. This thing shouldn’t be done court by court by court by court.

Particularly looking at the history. The Congress made a conscious decision not to do this. It’s not like, oh, this technology is so new, it’s never been thought of before. It’s not like that. So we saw that being a huge civil liberties slide. We saw us creating a back door—we think it’s fundamentally wrong. And not just wrong from a privacy point of view, but wrong from a public safety point of view.

Think about the things that are on people’s phones. Their kids’ locations are on there. You can see scenarios that are not farfetched at all where you can take down power grids going through a smart phone. So there’s all kinds of things that as we looked at it, we think the government should be pushing for more encryption. That it’s a great thing. It’s like the sun and the air and the water. It’s a superb thing.

And certainly some things that are very good can sometimes be used in a bad way. We get that. But you don’t take away the good for that sliver of bad. We’ve never been about that as a country. We make that decision every day, right? There are some times that freedom of speech—we might cringe a little when we hear that person saying this, and wish they wouldn’t, but we think about the alternative of not having that freedom. We think about freedom of the press. You just go on and on and on. We all cringe occasionally, but then we think about if you begin to restrict and limit and box. Pretty soon it’s not the country that we’re a part of anymore. It’s not America anymore. This to us is like that. It’s at the core of who we are as a country.

And you know, it wasn’t very long ago that all this stuff was being debated. It’s not like it hasn’t been discussed. So this seemed like a back door of the back door. You know, trying to force someone to put a back door in, making people more vulnerable. Clearly trampling on civil liberties. I mean, I think it’s hard to debate these things. I think these things are unequivocally what is going on.

And if they are to go on, they should go on out in the open, in the Congress, and the Congress should pass a law. Because those are the people that we vote on to represent us. You know we don’t elect people in government in appointed positions, and for the most part we don’t elect judges, and so forth.

And courts can have different views, and I think they would. We know that. It’s our system, and it’s the great thing about our system. But in something so fundamental, this should be talked about.

Now for us, and this is so key, I believe that if you took privacy and you said, I’m willing to give up all of my privacy to be secure. So you weighted it as a zero. My own view is that encryption is a much better, much better world. And I’m not the only person that thinks that. You know, Michael Hayden thinks that, and he ran the NSA so that’s a point of view we should all listen to. So you can see not only data theft and theft of all different things, but you then begin to think about the public safety aspects of it. And to me it is so clear that even if you discount the importance of privacy, that encryption is the way to go.

I’m hoping that that comes out strong. Once again, I think it came out once in the Congress and they said, we’re not doing anything to limit this.I think it happened in the administration as well and they said, we’re not going to seek any legislation on this. So I think we need to go through that again and hope everybody comes to the same conclusion.

Do you feel as though the FBI intentionally took a case that was obviously very emotional, it’s the ultimate domestic terrorism case, not so much because there is vital evidence on that phone, but because they knew that they were going to make this the hardest version of the debate?

COOK: I think they picked a case to pursue that they felt they had the strongest possibility of winning. Is there something on the phone? I don’t know. I don’t think anybody really knows.

The thing that some people point out, or have pointed out to me, is that the other phones are smashed. One of the family members of the victim came out last week and said everybody knew these phones were monitored. In fact he said they had the GPS app on the phone that allowed the county to track the employees because the employees were field employees. And I’m sure they did it from a safety point of view.

And the FBI waited seventy-five days. I guess that’s the other thing that some people pointed out. But I don’t know, is the real answer.

Do you feel ambushed?

COOK: No, I don’t feel ambushed. What I feel is that in a professional way, if I’m working with you for several months on things, if I have a relationship with you, and I decide one day I’m going to sue you, I’m a country boy at the end of the day. I’m going to pick up the phone and tell you I’m going to sue you.

And so do I like their tactics? No. I don’t. I’m seeing the government apparatus in a way I’ve never seen it before. Do I like finding out from the press about it? No. I don’t think it’s professional. So do I like them talking about, or lying, about our intentions? No. I’m offended by it. Deeply offended by it.

However, putting all of that emotion aside, I think the dialogue that is happening now with a lot of different people and a lot of different view points, people that agree with us and disagree with us, I think it’s healthy and it’s a part of democracy. So that part I’m really excited about. And I’m optimistic that we’ll get to the right conclusion.

And again, when I say that I’m separating, the court’s going to do what it’s going to do. But you were asking about the broader policy issue, I think, and I’m excited now that people are engaged once again. I thought we’d already done this, to be honest. But I think we have to do it again. And that’s fine.

So I do think that’s positive. I don’t think the whole thing is negative. But there are parts of their approach that I think are heavy-handed and not the country I love.

Have you spoken to the President?

COOK: Not about this case.

You talk about public safety. Encryption is obviously incredibly valuable as a tool for preserving public safety. As against that, there’s a long an honorable tradition of law enforcement being able to get access to information that it needs to investigate crimes. And that also helps us with public safety. So you’re weighing one against the other, right? It’s not like it’s black and white. It’s you have to make a tradeoff. Or am I wrong in saying that?

COOK: Well, first of all, I don’t make the tradeoff. That’s the Congress’s job, to pass laws and so forth. But no, I don’t see it like that. I know everybody wants to paint it as privacy versus security, as if you can give up one and get more of the other. I think it’s very simplistic and incorrect. I don’t see it that way at all.

Because the reality is that if you—let’s say you just pulled encryption. Let’s ban it. Let’s you and I ban it tomorrow. And so we sit in Congress and we say, thou shalt not have encryption. What happens then? Well, I would argue that the bad guys will use encryption from non-American companies, because they’re pretty smart and encryption isn’t—I don’t own encryption, Apple doesn’t own encryption. Encryption, as you know, is everywhere. In fact some of encryption is funded by our government. Some of the best encryption is funded by the government. But you’ll see encryption coming out of most countries in the world.

I don’t own encryption, Apple doesn’t own encryption. Encryption, as you know, is everywhere. In fact some of encryption is funded by our government.

So if you’re worried about messaging, which I think is primarily the worry in this scenario, people will just move to something else. You know if you legislate against Facebook and Apple and Google and whatever else in the US, they’ll just use something else. So are we really safer then? I would say no. I would say we’re less safe, because now we’ve opened up all of the infrastructure for people to go wacko at.

You’ve already seen people hitting the U.S. government—they pulled twenty million peoples’ information out from Social Security, their thumbprints and everything else. You see people going after private companies. You see people going after consumers. So the reality of today from a cyber security point of view—I think some of the top people predict that the next big war is fought on cyber security.

So you want to have an unbelievable defense. You probably want to have an offense too, but that’s somebody else’s job, to worry about that. So the act of banning or limiting or putting a back door in—a back door is any vulnerability that if I can get in, that means somebody else can. We look at it pretty simply as, we want to encrypt end to end. And if you guys are sending a message to each other, you have the ability to read it by putting your passcode in and decrypting it. Nancy has the ability to read it, but nobody else does.

So it’s just a simple thing. We don’t feel like we should be in the middle of that. I’m the FedEx guy. I’m taking your package and I’m delivering it. I just do it like this. My job isn’t to open it up, make a copy of it, put it over in my cabinet in case somebody later wants to come say, I’d like to see your messages. That’s not a role that I play. It’s not a role that I think I should play. And it’s certainly not a role I think you want me to play. I don’t think so, anyway. I don’t feel like the storage compartment for trillions of messages. And I’m not saying that from a cost point of view or anything else, I’m saying it from an ethics and values point of view. You don’t want me to hold all that stuff, right? I think you guys should have a reasonable expectation that your communication is private.

That’s a new development though, right? It didn’t used to—

COOK: No, from the start, from the very start of Messages, when—you were here when we launched it. We launched it with end-to-end encryption. And so this didn’t just happen, we didn’t suddenly think of this after Snowden. I know everybody says that, but it’s not true. Facetime, end-to-end encrypted from the start.

I don’t mean it’s new to Apple, I mean in a broader sense, it didn’t use to be the case that private citizens could actually take information and put it in this place, not a literal space but a virtual space, that was inaccessible to law enforcement. That is new, is it not?

COOK: Some of it is new. It’s hard for me to make a blanket statement like that. But yes, I mean with hacking getting more and more sophisticated, the hacking community has gone from the hobbyist in the basement to huge sophisticated companies that are essentially doing this, or groups of people or foreign agents inside and outside the United States. People are running huge enterprises off of hacking and stealing data. So yes, every software release we do, we get more and more secure. And we’ve been doing it for years. That path, the path toward more security and more privacy is a path we’ve been on for a long time. It’s not one that we just ventured on a year ago or two years ago or whatever.

Right. Your position is—I’m putting this in childishly cartoonish terms—that we’ve got to keep the hackers out, and if that means we keep the government out as well, so be it. It’s a trade off.

COOK: Yes. It’s not that—I’m not targeting government. I’m not saying hey, I’m closing it because I don’t want to give you any data. I’m saying that to protect out customers, we have to encrypt. And a side affect of that is, I don’t have the data.

But I wouldn’t go the step further that you went to and say that means the government is out. Because think about this—now, we have an unusual case in the south, so forget about that case for a minute and let’s talk about a normal case.

I’m not targeting government. I’m saying that to protect out customers, we have to encrypt. And a side affect of that is, I don’t have the data.

Let’s say they have a problem with you. They can come to you and say, open your phone. And one way is for it to be between the government and you. Then you can, I don’t know, they could pass a law that says you have to do it, or you have to do it or there’s some penalty, or something. That’s for somebody else to decide. But it does seem like it should be between you and them.

Except if I’m dead, or like in the drug dealer case I forgot my pass code.

COOK: You can’t—there’s probably not something that covers every single thing. And so—and this is one of the issues—if in order to cover the rare exception, you put a back door in, think about the consequences of the back door. You know, you can’t have a back door that says, good people only. It doesn’t work that way.

So what’s to stop financial institutions from saying we want it to be impossible for the government to have access to financial records?

COOK: I don’t know their infrastructure as well, but they use encryption significantly. Without encryption, you and I wouldn’t be able to do our banking online. We wouldn’t be able to buy things online, because your credit cards—they’ve probably been ripped off anyway, but they would be ripped off left and right every day if there wasn’t encryption.

The thing that is different to me about Messages versus your banking institution is, the part of you doing business with the bank, they need to record what you deposited, what your withdrawals are, what your checks that have cleared. So they need all of this information. That content they need to possess, because they report it back to you.

That’s the business they’re in. Take the message. My business is not reading your messages. I don’t have a business doing that. And it’s against my values to do that. I don’t want to read your private stuff. So I’m just the guy toting your mail over. That’s what I’m doing. So if I’m expected to keep your messages, and everybody else’s, then there should be a law that says, you need to keep all of these.

Now I think that would be really bad. I think it would be really bad because in order for me to keep them, I have to have a way to see them. If I have to have a way to see them and a place to copy them, you can imagine—if you knew where the treasure was buried at, and everybody else did, then it puts a bull’s eye on that target. And in the world of cyber security, the last thing you want is to have a target painted on you.

I’m not just saying that from an Apple point of view, but if it’s painted anywhere. No one should have a key that turns a billion locks. It shouldn’t exist. No one should have the message content for all of these messages. You wouldn’t want it all in one place. I think it would be very bad for security and privacy. If I know what your messages are, if I can read those, I’ll probably be able to conclude where you’re going, who you’re with, the location the message was sent.

No one should have a key that turns a billion locks. It shouldn’t exist.

I mean, the location the message was sent the phone company would have anyway probably, if it goes across the cellular network. But there’s just a lot of information there that can have implications on your safety, or your loved ones’ safety.

So being mindful of the fallacy of the hypothetical, suppose on the other side of the door is the nuclear device that’s on a timer, or the child who’s being tortured. And you have a key to that door.

COOK: If I had a key to that door I would turn that door. But that’s not the issue here, just to be clear. It’s not that I have information on this phone, and I’m not giving it. I had information. I gave all of it. Now they’re saying, hey, you could invent something to grab some additional information. We’re not sure there’s anything on there, but we want you to invent this in case there is. And the thing that they want me to invent, that key can turn millions of locks.

But that just argues that their rationale isn’t as strong as it should be. It may not exist. We don’t know if there’s anything valuable on that phone.

COOK: I’m actually—forget that, because I’m not substituting my judgment for theirs on the phone. I think they’ve said what I’ve said. They don’t know if there’s anything on it. I think everybody’s said that. I think Comey says maybe there is, maybe there’s not. So I think everybody recognizes that there may not be anything on it, there may be something on it. What I’m saying is, to invent what they want me to invent puts millions of people at risk.

And it’s not about one phone. It’s very much about the future. You have a guy in Manhattan saying I’ve got a hundred and seventy-five phones that I want to take through this process. You’ve got other cases springing up all over the place where they want phones taken through the process. So it’s not about one phone, and they know it’s not about one phone. I mean, that’s a purpose of the case, to set a precedent to get a process so they can kind of turn a crank without regard to what the case is about, honestly. Right? It’s anything a court would say to use it for.

But is your point then that the courts are not the right place? You talked about if Congress wants to do this, pass a law. But you’re also saying it would be absolutely bad for Apple to build this.

COOK: Yes.

Whether it’s Congress that tells you to do that—

COOK: I am saying that.

—or the courts. In that case, aren’t you putting yourself and your judgment in front of any democratic processes, as well as a legal process?

COOK: No, because at the end of the day I’ll follow the law. But in our view there’s no law today that says I have to do this. The government is saying that this two hundred-plus year old law gives them the right to tell me I have to do it. I look at that and say, come on guys, this is crazy. That’s not right, and you know it’s not right.

At the end of the day I’ll follow the law. But in our view there’s no law today that says I have to do this.

So that dispute is going to happen in court now, but on the larger issue of encryption and back doors, to me that is a Congressional topic, because it has huge implications across many different areas. Someone needs to take a step back and look at public safety. They need to look at national security. They need to look at cyber security. They need to look at privacy. They need to look at other civil liberties.

To me Congress is the natural place that is set up in our three-branch structure in order to weigh such things. And if at the outcome, if they conclude that they want to limit or ban encryption, or force a back door, then they clearly have the right to do that. Because they can pass a law. And if the President signs it, it becomes law.

Do I think that will happen? No. I think there’s too much evidence to suggest that that’s bad for national security. It means we’re really throwing out founding principles on the side of the road. So I think there’s so many things to suggest that they wouldn’t do that. That’s not something I lose sleep over. I’m very optimistic, I have got to be, that in a debate, a public debate, all of these things will rise up and you’ll see sanity take over.

You know as well as I do, sometimes the way we get somewhere, our journey is very ugly. But I’m a big optimist that we ultimately arrive at the right thing. Yes, there’s been cases that that doesn’t happen. But I optimistically think that it will.

So what I would like to see is, we think it should be studied. This was the commission idea. The reason we’re saying that is not to punt, it’s where somebody can look at all of these implications. Right now we have one part of government looking at one thing. They’re looking at, how do I get the most information to solve this case? Or maybe not to solve it, because they may have it solved, but get the most information on this case. I’m saying, guys, what you’re asking me to do has a lot more implications than this case.

This is something that to me is a basic responsibility that they have. If somebody studies it, and the right people are on it, the right debate happens, which I think is largely happening today, then I think what will come out of it is people will stop talking about weakening or banning encryption. We’ll have a huge pro-encryption stance that people will rally around, that it’s a good thing, that it’s a great thing and a necessary thing. I think that people will conclude that no back doors are the best, and the US should take a position that leads in the world by saying, no back doors anywhere. I think that Congress will do something to empower the different intelligence arms to build out a set of capabilities, new capabilities. to do their roles I the modern world, and encryption being a part of that.

That won’t need you doing it for them?

COOK: Correct.

But I’d like to talk about that for a moment, just to—you’ll hear the words “going dark,” or “warrantless,” or these kinds of words. This is how I see it, is right now, if somebody wanted information about Lev they could go to—who’s your carrier? Verizon. They could subpoena Verizon, lay a warrant on Verizon.

They would find out all the calls you’ve made, who they were made to, the length of the call, the time of the call. They could find out a lot of location data. They would find out messages that you sent across the cellular network, where you were. Lots of information.

If they get at my Nike app, my running times are terrible.

COOK: They may also learn that, right. I don’t know, I can’t speak for Nike on that. But they would know, they would come to us, and they would ask for some information, and if they had a valid warrant we would fill that. They would also probably spend a lot of time looking at things you’d done online, right, because there’s lots of things done in the public eye.

The truth is, this is the golden age of surveillance that we live in. There is more information about all of us, so much more than ten years ago, or five years ago. It’s everywhere. You are leaving digital footprints everywhere. Also there’s cameras everywhere, and I mean not just security cameras, but we all have a camera in our pocket. So if you want to know something that happened at a particular scene, you could probably find photos of that.

Thanks to you.

COOK: Thanks to, yes, partly, us. That’s right. And so my only point is, going dark is not—this is a crock. No one’s going dark. I mean really, it’s fair to say that if you send me a message and it’s encrypted, it’s fair to say they can’t get that without going to you or to me, unless one of us has it in our cloud at this point. That’s fair to say. But we shouldn’t all be fixated just on what’s not available. We should take a step back and look at the total that’s available.

Because there’s a mountain of information about us. I mean there’s so much. Anyway, I’m not an intelligence person. But I just look at it and it’s a mountain of data.

As a business person, as the guy running Apple, should this go to Congress, they rule, goes against you, how bad is it for Apple from a business point of view?

COOK: I think, first of all it’s bad for the United States. Because going against us doesn’t just mean going against us. It means likely banning, limiting or forcing back doors for [everyone]. I think it makes the U.S. much more vulnerable. Not only in privacy but also in security. The national infrastructure, everything. And I can’t imagine it happening because it would be outlandish for something like that to happen. I think everybody has better judgment than that.

But at the end of the day, we’re going to fight the good fight not only for our customers but for the country. We’re in this bizarre position where we’re defending the civil liberties of the country against the government. Who would have ever thought this would happen?

I never expected to be in this position. The government should always be the one defending civil liberties. And there’s a role reversal here. I mean I still feel like I’m in another world a bit, that I’m in this bad dream in some wise.

We’re in this bizarre position where we’re defending the civil liberties of the country against the government. Who would have ever thought this would happen?

So I don’t expect that the country wants that. You know we flirt with different things over different times. But we always come back to our core. And so is it bad for Apple? I think it’s bad for America, really bad for America. And I don’t expect it’ll happen. I don’t think it’ll happen. There’s too many bright people around.

Do you find it odd that now your job description includes weighing questions of public and private security, questions of privacy, right and wrong? Is it odd to you to be thrust into that role?

COOK: Yes. It feels very uncomfortable in some ways. Fighting the government is not a thing we choose to do. America is always stronger when we do things together. And so in my view the right approach here is for technology and intelligence to talk about the things we can do [together].

Ways we can have both privacy and security. Not view the world as this see-saw. I think this is a really strange way to see this. But it’s something that we’re fighting willingly. The easiest thing for us would have been to just do it.

That would have been easy. But it’s not right. And so I think at the end of the day—and none of us would have been able to sleep at night. We would have felt we had sold out. But that doesn’t make it comfortable.It is shocking to me that the U.S. is the only country in the world that has asked this. If I had heard it from, I don’t know, from some other country or something, then maybe it would feel a little different. But the U.S. should be the shining city on the hill, the beacon of civil liberties.

This is just one of those cases where occasionally the government over reaches and doesn’t act in the best interest of its citizens. But I’m optimistic that we’ll get through it and get to a much better place.

From a technological point of view, is the optimal path forward that you guys just engineer yourselves out of this loop? Make is to that you couldn’t even supply the government OS that would do what the government is asking you to do?

COOK: I think with every release we do, we have to go up. Because we have to try to stay one step ahead of the bad guys out there. The truth is that our security today will not be good enough for tomorrow. That’s true.

You have to accept that whether you’re sitting here or any other companies around this valley. Security isn’t just a feature, it’s a base, it’s a fundamental, right.

I would never do what you’re saying with the intention of doing that. Our intention is never anything to do with government, it’s to protect people. Is it a consequence of it? Yes, I mean over time you do more and more and more. That’s the road we’ve been on for a decade.

Actually more than that I guess if you think about the Mac, encryption has been I the Mac since the ‘80s, the end of the ‘80s or something like that, right. So it’s not —

What do you think it says about the changed information environment in which we live? What you alluded to earlier, just the vast clouds of data which we now generate by virtue of going about our daily business. We’re just sort of spewing out gigabytes of data, everything we do, to the point where privacy, it changes what privacy means. Now privacy becomes, rather than the default setting of the world that we live in, not it becomes a feature that we have to buy and shop for and rely on. Is that a change you’ve observed over the course of your career?

COOK: I think your observation that there’s this increasing amounts of data is absolutely true. It wasn’t very long ago, you wouldn’t even think about there being health information on the smart phone. But today there’s a lot of health information available on your smart phone.

There’s financial information. There’s your conversations, there’s business secrets. There’s an enormous long list of things that there’s probably more information about you on here than exists in your home, right. Which makes it a lot more valuable to all the bad guys out there.

That’s a reality. That data is increasing in phenomenal leaps and bounds all the time, along with the sort of the hacking and cyber issues are going up at the same time. So these two curves are pointed in the same direction.

Partly, and no surprise, because there’s so much more information out there. It’s clear why hacking communities are [growing]. Because it’s like, there’s a lot more gold there. There’s a lot more to steal than ever before.

There’s unbelievably nefarious things happening out there. I think those two curves are connected, are very strongly correlated with each other. I think that [there is] this fundamental right to privacy and the philosophy that government shouldn’t be intrusive.

To me, that is the same. I don’t think because this is escalating that that should fundamentally be different. Now do people, do different companies etc. look at privacy different? Yes, they clearly do.

And that’s the reason all of us have privacy policies and some you can actually read and you can look at these things and judge for yourself where you see it. But I think the fundamental right that’s there is a constitutional right.

I mean this is something that is basic to who we are. It’s not something that floats with technology.

To be honest I oscillate from side to side on this issue. And it troubles me to think of should god forbid a future act of domestic terrorism occurs, who knows, it gets linked back to that phone in San Bernardino, we could have spotted it coming. How do you weigh the lives lost there against the diminution of millions of people’s privacy, not an abolition of it, but degradation of their privacy? It involves a lot of difficult calculus.

COOK: You’re clicking back to privacy versus security though. And I think it’s privacy and security or privacy and safety versus security. It’s not that people’s wellbeing, their physical wellbeing is not a part of privacy. It is. It very much is.

It’s not that one side has life and one has side is a your financial information or your photo or whatever, it’s not that. Think about something that happens to the infrastructure, where there’s a power grid issue.

Sure, just happened.

COOK: Think about the people that are on a device, a medical device that depends on electricity. And of course hospitals have generators etc, but there’s a lot of people out in homes that do not.

These are real things, these aren’t fantasy things by any means.

Both sides are real though, I mean … is real.

COOK: Both sides are real. And yes, both sides are real. But giving up one doesn’t get you more of that. Because what’s going to happen—again, I get back to what I see is, if you limit us, the internet doesn’t have boundaries. And so you can wind up getting an app from Eastern Europe, or Russia or wherever, it doesn’t matter which country, just outside the United States.

And that app would give you end to end encryption. The terrorists is smart enough to use that. But we’re trying to protect everyday people. Everybody doesn’t want to have to be a computer scientist to protect themselves. Most people have no desire to do that.

That’s the fundamentalIt’s one of those things that people might feel good for a moment. They passed a law, and everybody goes ‘Whew.’ But then when you look at the reality, the bad guys still use it, and they’re still in the shadow.

And who have you exposed? You’ve exposed the 99% of good people.

When Donald Trump calls for a boycott of Apple products, do you think that’s because he doesn’t understand the arguments that you’re making?

COOK: I haven’t talked to him so I don’t know what he thinks. The way I look at it is, Apple is this great American company that could have only happened here. And we see it as our responsibility to stand up on something like this and speak up for all these people that are thinking what we’re thinking but don’t have the voice.

We see it as our responsibility to stand up on something like this and speak up for all these people that are thinking what we’re thinking but don’t have the voice.

We don’t see it as our role as the decision maker. We understand Congress sets laws. But we [see] it as our role not to just let it happen. I mean too many times in history has this happened, where the government over reached, did something that in retrospect somebody should have stood up and said ‘Stop.’

We see that this is our moment to stand up and say ‘Stop.’ And force a dialogue. And that dialogue may, I don’t know how it’ll go. I’m optimistic. But I don’t know at the end of the day. But I see that as our role.

What kind of over reach are you thinking of, when you think of the historical?

COOK: I can think of really bad things where things were done that I’m sure people looked at and thought were good at the time. And nobody said anything.

I do think this is something that I think will affect the wellbeing of citizens of the U.S. for decades to come, that will affect civil liberties for decades to come.

This is of that kind of stature and of that kind of importance. As it was going, the steamroller was on. And our job was just to be rolled up under the steamroller.

And that’s what they expected. I wouldn’t be able to live [with that] anymore, and nobody here would. It wasn’t just me. It’s literally almost the whole company feels like that. And yes, we understand the technology a lot more and so we see, we understand technically this thing deeply.

We ward off attacks every day and so we have a working knowledge of the cyber landscape. That probably makes us more ultra sensitive to it. And we’re also believers in civil liberties. To me this is a part of the foundation of what America is. Right to privacy is really important. You pull that brick out and another and pretty soon the house falls.

More Must-Reads from TIME

- Cybersecurity Experts Are Sounding the Alarm on DOGE

- Meet the 2025 Women of the Year

- The Harsh Truth About Disability Inclusion

- Why Do More Young Adults Have Cancer?

- Colman Domingo Leads With Radical Love

- How to Get Better at Doing Things Alone

- Michelle Zauner Stares Down the Darkness

Contact us at letters@time.com