Google’s latest partnership could result in smartphones that recognize objects much the same way humans do.

Machine learning startup Movidius said recently that it’s working with Google to “accelerate the adoption of deep learning within mobile devices.” Movidius makes a vision processor that attempts to replicate human eyesight, taking into account variables like depth and texture to put objects into context. That capability, CEO Remi El-Ouazzane says, could result in much more powerful smartphones and other devices.

“When you understand the context, than there are many things you can do,” says El-Ouazzane. “You can automate tasks, you can free up the human being to do [other] things.”

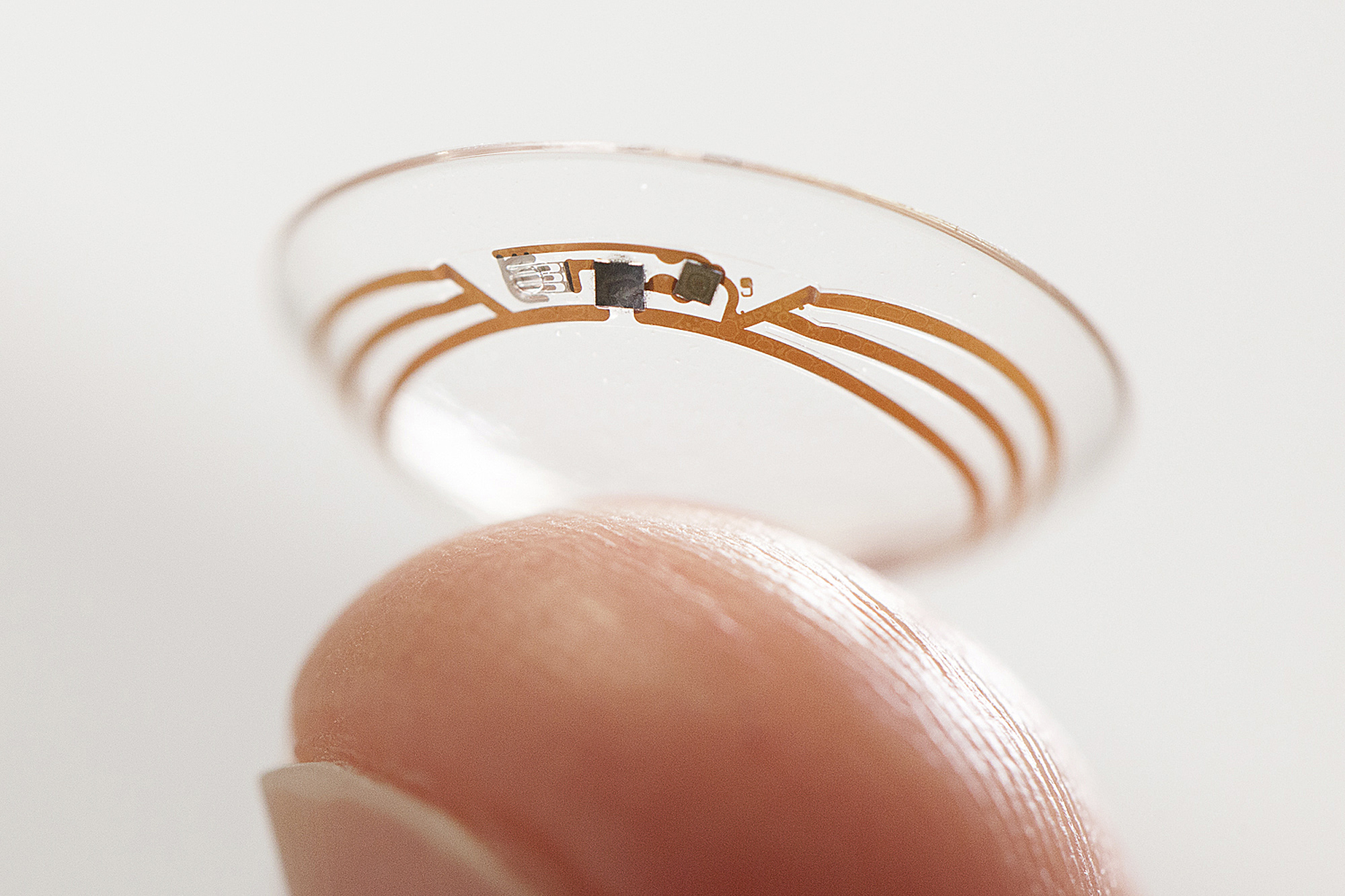

El-Ouazzane refused to talk about how his company’s chip might be used in any future Google products, like Android smartphones. But he did say it would show up “in the context of personal and wearable computing.” He also argued that it could improve unmanned aerial vehicles, or drones, by giving them the ability to better make sense of the video footage they’re recording as they fly about. A drone flown by an oil rig operator, for instance, might be able to detect damage as well as analyze the extent of the problem.

“The level of information you get will be much more sophisticated,” says El-Ouazzane.

Some commercially available software, like Google’s own Photos app, can already recognize particular people or objects in photos. Searching that app for “dogs,” for instance, pulls up only images of our four-legged friends. But that software relies on far-flung computers to run the actual computations. Movidius’ chip is different because its calculations happen right on the processor without any outside help, potentially speeding up the process and removing the need for an Internet connection.

Still, the executive admitted that truly replicating human eyesight and visual recognition is a steep challenge. “What our [vision processor] is doing definitely is not as tuned or as perfect as a [human] visual cortex,” he says. “We’re fighting 540 million years of human progression, so it takes time.”

The 10 Most Ambitious Google Projects

The Movidius chip relies on an emerging field called “deep learning,” referring to software that mimics the way humans learn from their experiences. Long a mostly academic concept, deep learning is starting to bear fruit in the form of consumer applications. Microsoft Skype’s real-time translator, for instance, is a result of research in this field.

But one disadvantage of deep learning systems is that they typically need to absorb massive amounts of data about a given subject before they can go off and make decisions on their own. For a typical deep learning program to correctly identify a dog, for instance, it first needs to look at lots of poodle pictures. Movidius’ goal is to reduce this need by what it calls “unsupervised networks,” which are systems that can recognize different types of objects on their own with less human intervention.

“I think today we are reaching a very high level of performance on those networks,” says El-Ouazzane.

More Must-Reads from TIME

- L.A. Fires Show Reality of 1.5°C of Warming

- Home Losses From L.A. Fires Hasten ‘An Uninsurable Future’

- The Women Refusing to Participate in Trump’s Economy

- Bad Bunny On Heartbreak and New Album

- How to Dress Warmly for Cold Weather

- We’re Lucky to Have Been Alive in the Age of David Lynch

- The Motivational Trick That Makes You Exercise Harder

- Column: No One Won The War in Gaza

Contact us at letters@time.com