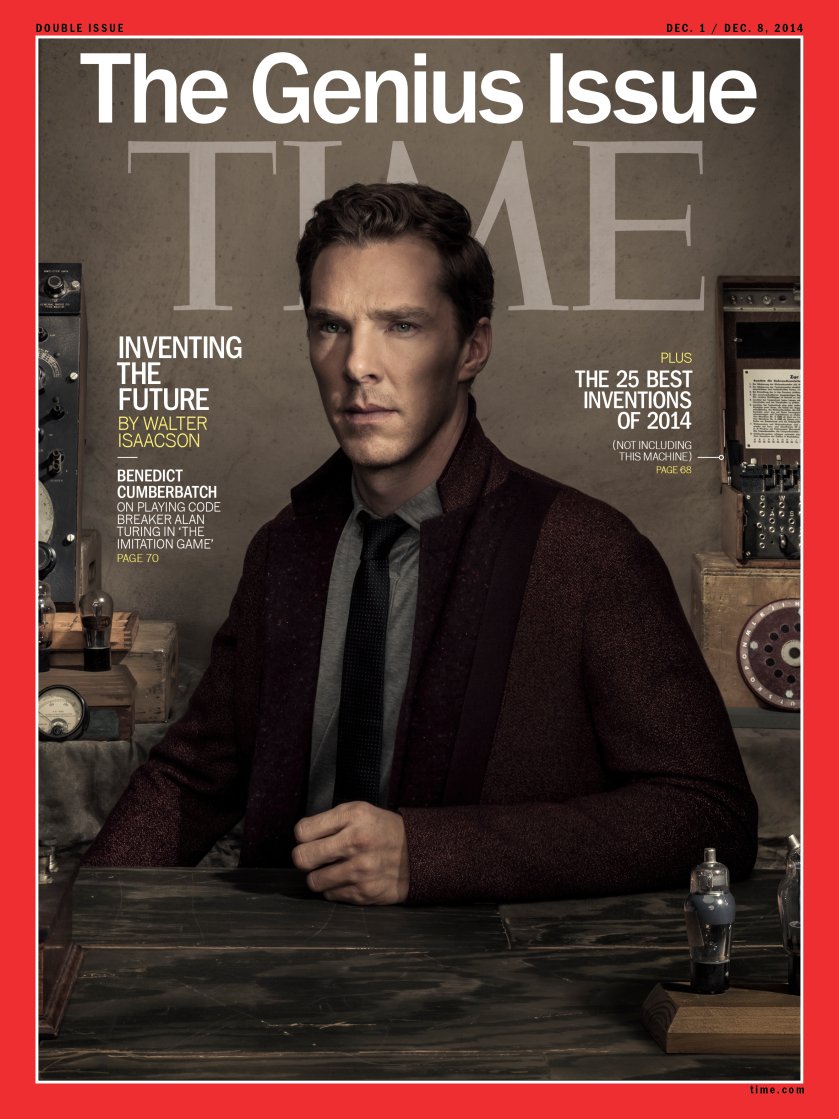

Alan Turing, the man who pioneered computing, also forced the world to question what it means to be human

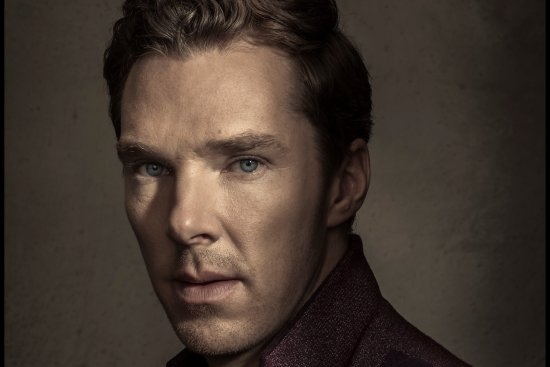

Alan Turing, the intellectual father of the modern computer, had a theory. He believed that one day machines would become so powerful that they would think just like humans. He even devised a test, which he called “the imitation game,” to herald the advent of computers that were indistinguishable from human minds. But as Benedict Cumberbatch’s performance in the new movie The Imitation Game shows, Turing’s heroic and tragic life provides a compelling counter to the concept that there might be no fundamental difference between our minds and machines.

As we celebrate the cool inventions that sprouted this year, it’s useful to look back at the most important invention of our age, the computer, which along with its accoutrements, microchips and digital networks is the über innovation from which most subsequent Ubers and innovations were born. But despite the computer’s importance, most of us don’t know who invented it. That’s because, like most innovations of the digital age, it has no single creator, no Bell or Edison or Morse or Watt.

Instead, the computer was devised during the early 1940s in a variety of places, from Berlin to the University of Pennsylvania to Iowa State, mainly by collaborative teams. As often seen in the annals of invention, the time was right and the atmosphere charged. The mass manufacture of vacuum tubes for radios paved the way for the creation of electronic digital circuits. That was accompanied by theoretical advances in logic that made circuits more useful. And the march was quickened by the drums of war. As nations armed for conflict, it became clear that computational power was as important as firepower.

Which is what makes the Turing story especially compelling. He was the seminal theorist conceptualizing the idea of a universal computer, he was part of the secret team at Bletchley Park, England, that put theory into practice by building machines that broke the German wartime codes, and he framed the most fundamental question of the computer age: Can machines think?

Having survived a cold upbringing on the fraying fringe of the British gentry, Turing had a lonely intensity to him, reflected in his love of long-distance running. At boarding school, he realized he was gay. He became infatuated with a fair-haired schoolmate, Christopher Morcom, who died suddenly of tuberculosis. Turing also had a trait, so common among innovators, that was charmingly described by his biographer Andrew Hodges: “Alan was slow to learn that indistinct line that separated initiative from disobedience.”

At Cambridge University, Turing became fascinated by the math of quantum physics, which describes how events at the subatomic level are governed by statistical probabilities rather than laws that determine things with certainty. He believed (at least while he was young) that this uncertainty and indeterminacy at the subatomic level permitted humans to exercise free will–a trait that, if it existed, would seem to distinguish them from machines.

He had an instinct that there were mathematical statements that were likewise elusive: we could never know whether they were provable or not. One way of framing the issue was to ask whether there was a “mechanical process” that could be used to determine whether a particular logical statement was provable.

Turing liked the concept of a “mechanical process.” One day in the summer of 1935, he was out for his usual solitary run and stopped to lie down in a grove of apple trees. He decided to take the notion of a “mechanical process” literally, conjuring up an imaginary machine and applying it to the problem.

The “Logical Computing Machine” that Turing envisioned (as a thought experiment, not as a real machine to be built) was simple at first glance, but it could handle, in theory, any mathematical computation. It consisted of an unlimited length of paper tape containing symbols within squares; the machine would be able to read the symbols on the tape and perform certain actions based on a “table of instructions” it had been given.

Turing showed that there was no method to determine in advance whether any given instruction table combined with any given set of inputs would lead the machine to arrive at an answer or go into some loop and continue chugging away indefinitely, getting nowhere. This discovery was useful for the development of mathematical theory. But more important was the by-product: Turing’s concept of a Logical Computing Machine, which soon came to be known as a Turing machine. “It is possible to invent a single machine which can be used to compute any computable sequence,” he declared.

Turing’s interest was more than theoretical, however. Fascinated by ciphers, Turing enlisted in the British effort to break Germany’s military codes. The secret teams set up shop on the grounds of a Victorian manor house in the drab redbrick town of Bletchley. Turing was assigned to a group tackling the Germans’ Enigma code, which was generated by a portable machine with mechanical rotors and electrical circuits. After every keystroke, it changed the formula for substituting letters.

Turing and his team built a machine, called “the bombe,” that exploited subtle weaknesses in the German coding, including the fact that no letter could be enciphered as itself and that there were certain phrases that the Germans used repeatedly. By August 1940, Turing’s team had bombes that could decipher German messages about the deployment of the U-boats that were decimating British supply convoys.

The bombe was not a significant advance in computer technology. It was an electromechanical device with relay switches rather than vacuum tubes and electronic circuits. But a subsequent machine produced at Bletchley, known as Colossus, was a major milestone.

The need for Colossus arose when the Germans started coding important messages, including orders from Hitler, with a machine that used 12 code wheels of unequal size. To break it would require using lightning-quick electronic circuits.

The team in charge was led by Max Newman, who had been Turing’s math don at Cambridge. Turing introduced Newman to the electronics wizard Tommy Flowers, who had devised wondrous vacuum-tube circuits while working for the British telephone system.

They realized that the only way to analyze German messages quickly enough was to store one of them in the internal electronic memory of a machine rather than trying to compare two punched paper tapes. This would require 1,500 vacuum tubes. The Bletchley Park managers were skeptical, but the team pushed ahead. By December 1943–after only 11 months–it produced the first Colossus machine. An even bigger version, using 2,400 tubes, was ready by June 1, 1944. The machines helped confirm that Hitler was unaware of the planned D-Day invasion.

Turing’s need to hide both his homosexuality and his codebreaking work meant that he often found himself playing his own imitation game, pretending to be things he wasn’t. At one point he proposed marriage to a female colleague (played by Keira Knightley in the new film), but then felt compelled to tell her that he was gay. She was still willing to marry him, but he believed that imitating a straight man would be a sham and decided not to proceed.

After the war, Turing turned his attention to an issue that he had wrestled with since his boarding-school friend Christopher Morcom’s death: Did humans have “free will” and consciousness, perhaps even a soul, that made them fundamentally different from a programmed machine? By this time Turing had become skeptical. He was working on machines that could modify their own programs based on information they processed, and he came to believe that this type of machine learning could lead to artificial intelligence.

In a 1950 paper, he began with a clear declaration: “I propose to consider the question, ‘Can machines think?’ ” With a schoolboy’s sense of fun, he invented his “imitation game,” now generally known as the Turing test, to give empirical meaning to that question. Put a machine and a human in a room, he said, and send in written questions. If you can’t tell which answers are from the machine and which are from the human, then there is no meaningful reason to insist that the machine isn’t “thinking.”

A sample interrogation, he wrote, might include the following:

Q: Please write me a sonnet on the subject of the Forth Bridge.

A : Count me out on this one. I never could write poetry.

Q: Add 34957 to 70764.

A: [Pause about 30 seconds and then give as answer] 105621.

Turing did something clever in this example. Careful scrutiny shows that the respondent, after 30 seconds, made a slight mistake in addition. (The correct answer is 105,721.) Is that evidence that the respondent was a human? Perhaps. But then again, maybe it was a machine cagily playing an imitation game.

Many objections have been made to Turing’s proposed test. “Not until a machine can write a sonnet or compose a concerto because of thoughts and emotions felt, and not by the chance fall of symbols, could we agree that machine equals brain,” declared a famous brain surgeon, Sir Geoffrey Jefferson. Turing’s response seems somewhat flippant, but it was also subtle: “The comparison is perhaps a little bit unfair because a sonnet written by a machine will be better appreciated by another machine.”

There was also the more fundamental objection that even if a machine’s answers were indistinguishable from a human’s, that did not mean it had consciousness and its own intentions, the way human minds do. When the human player of the Turing test uses words, he associates those words with real-world meanings, emotions, experiences, sensations and perceptions. Machines don’t. Without such connections, language is just a game divorced from meaning. This critique of the Turing test remains the most debated topic in cognitive science.

Turing gave his own guess as to whether a computer might be able to win his imitation game. “I believe that in about 50 years’ time it will be possible to program computers … so well that an average interrogator will not have more than a 70% chance of making the right identification after five minutes of questioning.”

Fooling fewer than a third of interrogators for only five minutes is a pretty low bar. Still, it’s now been more than 60 years, and the machines that enter Turing-test contests are at best engaging in gimmicky conversational gambits. The latest claim for a machine having “passed” the test was especially lame: a Russian program pretended to be a 13-year-old from Ukraine who didn’t speak English well. Even so, it fooled barely a third of the questioners for five minutes, and no one would believe that the program was engaging in true thinking.

A new breed of computer processors that mimic the neural networks in the human brain might mean that, in a few more years or decades, there may be machines that appear to learn and think like humans. These latest advances could possibly even lead to a singularity, a term that computer pioneer John von Neumann coined and the futurist Ray Kurzweil and the science-fiction writer Vernor Vinge popularized to describe the moment when computers are not only smarter than humans but also can design themselves to be even supersmarter and will thus no longer need us mortals. In the meantime, most of the exciting new inventions, like those in this issue, will involve watches, devices, social networks and other innovations that connect humans more closely to machines, in intimate partnership, rather than pursuing the mirage of machines that think on their own and try to replace us.

The flesh-and-blood complexities of Alan Turing’s life, as well as the very human emotions that drove him, serve as a testament that the distinction between man and machine may be deeper than he surmised. In a 1952 BBC debate with Geoffrey Jefferson, the brain surgeon, this issue of human “appetites, desires, drives, instincts” came up. Man is prey to “sexual urges,” Jefferson repeatedly said, and “may make a fool of himself.”

Turing, who was still discreet about his sexuality, kept silent when this topic arose. During the weeks leading up to the broadcast, he had been engaged in a series of actions that were so very human that a machine would have found them incomprehensible. He had recently finished a scientific paper, and he followed it by composing a short story, which was later found among his private papers, about how he planned to celebrate. “It was quite some time now since he had ‘had’ anyone, in fact not since he had met that soldier in Paris last summer,” he wrote. “Now that his paper was finished he might justifiably consider that he had earned another gay man, and he knew where he might find one who might be suitable.”

On a street in Manchester, Turing picked up a 19-year-old working-class drifter named Arnold Murray, who moved in with him around the time of the BBC broadcast. When Turing’s home was burglarized, he reported the incident to the police and ended up disclosing his sexual relationship with Murray. Turing was arrested for “gross indecency.”

At the trial in March 1952, Turing pleaded guilty, though he made it clear he felt no remorse. He was offered a choice: imprisonment or probation contingent on receiving hormone injections designed to curb his sexual desires, as if he were a chemically controlled machine. He chose the latter, which he endured for a year.

Turing at first seemed to take it all in stride, but on June 7, 1954, at age 41, he committed suicide by biting into an apple he had laced with cyanide. He had always been fascinated by the scene in Snow White in which the Wicked Queen dips an apple into a poisonous brew. He was found in his bed with froth around his mouth, cyanide in his system and a half-eaten apple by his side.

The imitation game was over. He was human.

Isaacson, a former managing editor of Time, is the author of The Innovators: How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution, from which parts of this piece are adapted