This is hardly the first time we’ve used centralized computers

In an age of handheld computers when everything from video games to television shows can be streamed over the Internet, it was curious if not comical to see the creative staff on AMC’s Mad Men get introduced to a computer in the beginning of the show’s final season.

“Trust me,” says Lou Avery to employees who just lost a room to the machine, “you’re going to use that computer more than you used that lounge.”

As viewers, we know that to be one of the biggest understatements ever uttered on the small screen. And in contrast, the effects of cloud computing — which predates even the IBM 360 Monolith portrayed in that office — cannot be overstated enough.

[time-brightcove not-tgx=”true”]

“We’ve gone through, shall we say, phases of centralization and decentralization in computing since the 1950s,” says Danny Sabbah, IBM’s chief technology officer for the company’s cloud computing efforts. In fact, at the time portrayed in the Mad Men episode, the first phase — the original age of cloud computing — was already coming to a close.

Yes, cloud computing, which is super-powering many of our devices today, got its start before President John F. Kennedy took office.

Sometime around 1955, John McCarthy, the computer scientist who created the term “artificial intelligence,” came up with the theory of time-sharing, which is very similar to today’s cloud computing. Back then, computing time cost several million dollars, and users wanted to make the greatest use out of a very expensive asset. In addition, smaller companies who couldn’t afford a computer of their own also wanted to also be able to do the type of automation that larger companies could do, but without making such an expensive investment. So, if users could find a way to “time-share” a computer, they could effectively rent its computational might without having to singularly foot the bill for its massive cost.

[video id=QlL0IykQ ]In the 1960s and ’70s, the idea of service bureaus emerged, which allowed users to share these very expensive computers. “That whole notion of shared services then had to be managed so that people weren’t stepping on each other,” says Sabbah. Users had their own terminals that ran hosted applications. There was a protocol (similar to, but different from, today’s Internet) that would essentially send information from the service bureau to the remote terminal and take requests from that terminal and send it back to the service bureau, which would then be routed to the right application.

“In many ways, basically, it’s very similar in principle and in concept to what we do today,” say Sabbah. But it was much more expensive, no where near as pervasive, and certainly wasn’t built on the backs of the types of network that we have now. It was all connected by a cable, not even a modem and certainly not wireless signals.

Not long after, the Defense Department’s DARPA (the Defense Advanced Research Projects Agency) gave birth to the networking system that later became the Internet. At the same time, computers began to get smaller and have fewer energy requirements. You no longer needed massive, water-cooled systems churning away to compute data.

“You didn’t have to have Halon fire extinguishers and things like that,” says Sabbah. “And you had lots of software that started to emerge that made it much easier to program them.”

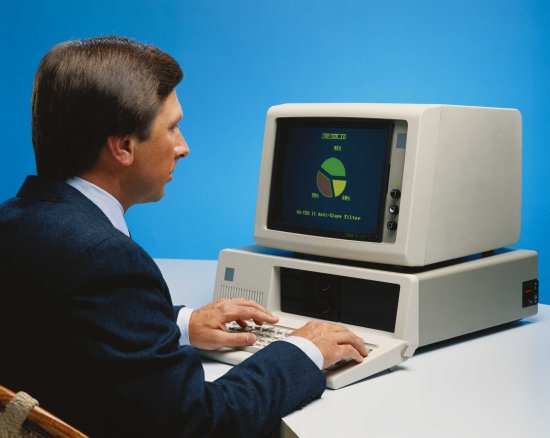

Then, in the 1980s, the mini computer was born. “Computing started to explode out of these centralized datacenters — everybody could have their own computer,” says Shabbah. And as a result, McCarthy’s notion of shared computing fell out of favor, because everybody could have their own PC, right in their own home.

And as time went on and people got connected online, it wasn’t until mobile Internet devices became popular that this idea of “cloud computing” returned. While smartphones indeed pack the computing power of a PC, they can lack in storage, which is where the modern day “timeshare” system picks up. Whether it’s syncing your fitness tracker data or your photos across your devices, today’s new cloud powers a variety of machines, keeping them in sync and running applications in a centralized place.

But we may soon see less cloud usage, says Sabbah. As it stands, devices like activity trackers or smart home devices send their data all the way up into the cloud to be processed, but that’s not necessary — our own tablets, smartphones, and computers have the ability to run the applications these peripherals need. Of course, there is some data that makes sense to be sent to a central location, like social media data, for instance. But companies may start being more judicious in what they send up, and what they send over.

“The pendulum tends to swing back and forth,” says Sabbah. “You have something that goes to centralized computing, then people start to figure out how they can un-centralize that particular computing, and unleash new applications and innovation.”

One thing’s for certain: the cloud will never go away, it will only change its shape.