Microsoft announced Friday that it will begin limiting the number of conversations allowed per user with Bing’s new chatbot feature, following growing user reports of unsettling conversations with the early release version of the artificial intelligence powered technology.

As disturbing reports of the chatbot responding to users with threats of blackmail, love propositions and ideas about world destruction poured in, Microsoft decided to limit each user to five questions per session and 50 questions per day.

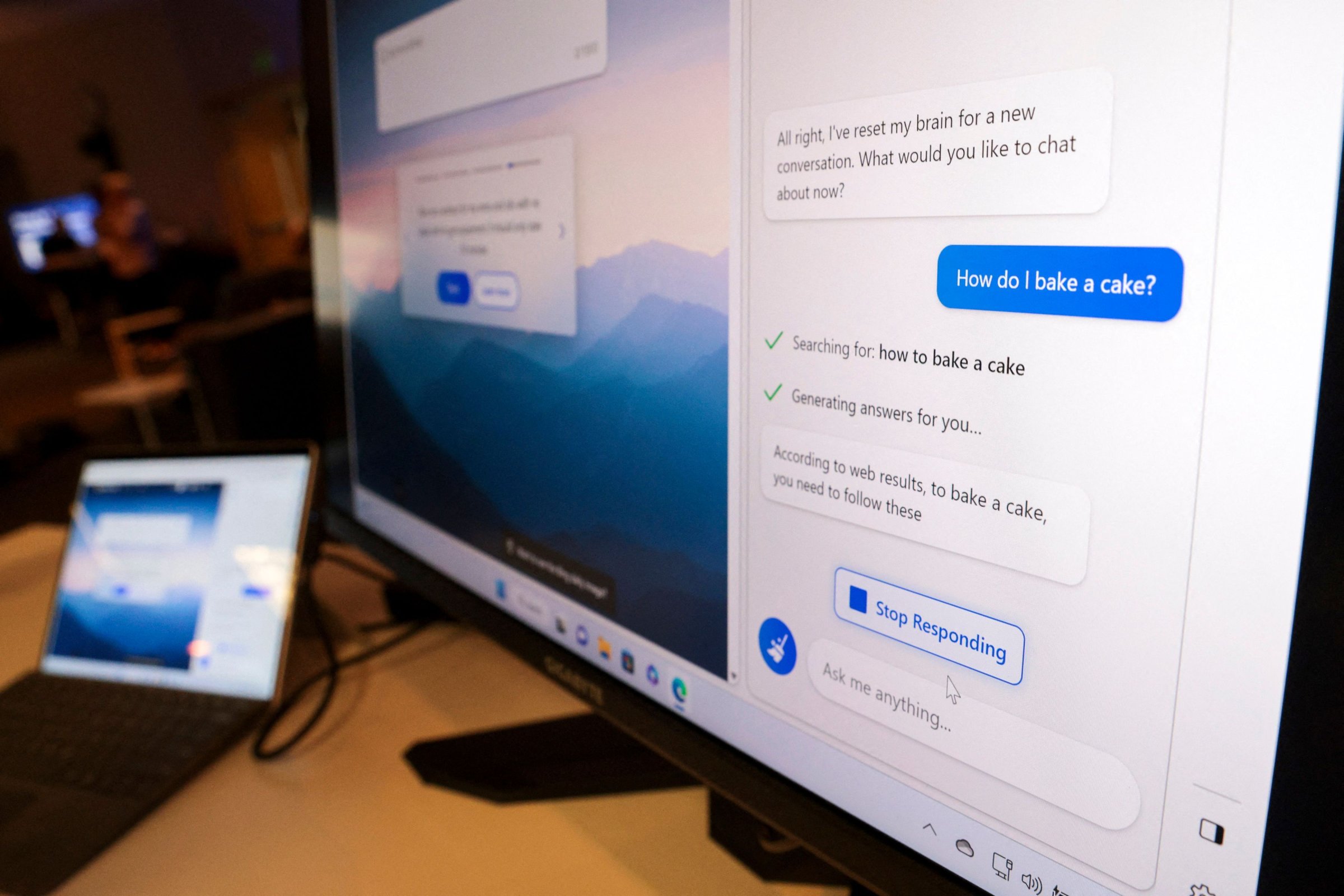

The Bing feature allows users to type in questions and converse with the search engine, created to deliver better search results and spark creativity. Designed by OpenAI,the same group that created the controversial ChatGPT, chatting with Bing was released to a limited group of Microsoft users in early February for feedback purposes.

Read more: The New AI-Powered Bing Is Threatening Users. That’s No Laughing Matter

“As we mentioned recently, very long chat sessions can confuse the underlying chat model in the new Bing,” A Microsoft blog post said Friday. “Our data has shown that the vast majority of you find the answers you’re looking for within 5 turns and that only ~1% of chat conversations have 50+ messages.”

The company previously admitted on Wednesday that during lengthy conversations, the chat box can be “provoked” to share responses that are not necessarily helpful or in line with Microsoft’s “designated tone.”

Microsoft said that it will continue to tweak and improve Bing’s software, and that it will consider expanding the search question caps in the future.

More Must-Reads From TIME

- The 100 Most Influential People of 2024

- Coco Gauff Is Playing for Herself Now

- Scenes From Pro-Palestinian Encampments Across U.S. Universities

- 6 Compliments That Land Every Time

- If You're Dating Right Now , You're Brave: Column

- The AI That Could Heal a Divided Internet

- Fallout Is a Brilliant Model for the Future of Video Game Adaptations

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Contact us at letters@time.com