Dr. Aiken is an academic advisor to the Europol Cyber Crime Centre and author of The Cyber Effect.

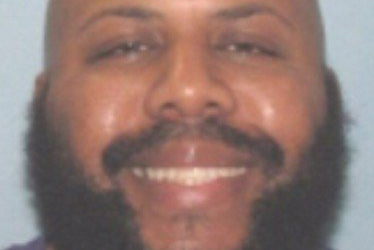

Steve Stephens made a chilling video titled “Easter day slaughter”, in which he filmed himself lethally shooting an apparently random victim, Robert Godwin, Sr., who was 74 years old. Stephens published his crime on his Facebook page.

Stephens even offered insights into his motivations. He claimed that he had broken up with “the love of [his] life”, that he had he “lost everything” gambling. He also provided evidence of premeditation: “I’ve run out of options… and now I’m just on some murder-type sh-t.” This justification footage is hauntingly similar to a video uploaded to YouTube by Elliot Rodger, a 22-year-old virgin who killed six people and wounded thirteen on May 23, 2014 in Santa Barbara, California. Rodger then took his own life by shooting himself in his BMW. On April 18, Stephens shot and killed himself in his own car in Pennsylvania, after a brief pursuit by police.

It took Facebook two hours to take down the Steve Stephens content. By then, it had been viewed over 150,000 times. Social media companies want live, cutting-edge, raw, captivating content that has the potential to go viral. What they are getting is live-streaming of extreme criminal violence. It’s going viral.

He and Rodger are far from the only cases. In February, 19-year-old Marina Lonina was sentenced to nine months in prison after pleading guilty to “obstructing justice”, after she live-streamed her 17-year-old friend getting raped by a man last year, using the social media app Periscope. Lonina claimed later to have been documenting the crime, not sharing it as entertainment. Yet she had a cell phone in her hand and did not call 911.

Most murders or sexual assaults occur in secluded and private locations: one victim, one assailant, nobody watching. Murderers and rapists, like most criminals, tend to cover their tracks to avoid being caught. In the Lonina case, the cyber crime was live-streamed, and in the Stephens case, posted on Facebook Live, before potential audiences of hundreds of millions. Rodger seemed to desire a similar viewership.

Disturbing acts committed in public on social media are becoming more common — from bullying to suicide, sex crimes, murder and the torture of animals and children. For those of us working in the field of cyberpsychology — the study of the impact of technology on human behavior — the sense of urgency is escalating.

Why is this happening?

To put it simply: New technology means new behavior. The digital revolution is occurring so quickly that it has become difficult to tell the difference between passing trends, still emerging behavior and something that’s already become an acceptable social norm.

But we don’t have to sit before our screens, flummoxed. Cyberpsychologists can help make sense of the online environment, where people behave differently than they do in “real” life — due to a myriad of cyber effects.

One thing I’ve observed over and over again, as an advisor to Europol and an academic who has been involved with INTERPOL, the FBI and other law-enforcement agencies, is that human behavior is often amplified and accelerated online. There’s increasing evidence of a loss of empathy online — a heightened detachment from the feelings and rights of others. We see this in extreme cyberbullying and trolling. Anonymity online, almost like a superpower of invisibility, fuels this behavior. So does a phenomenon called online disinhibition effect, which can cause individuals to be bolder, judgment-impaired and less inhibited — almost as if they were drunk.

Desensitization is another effect, a result of access to endless amounts of violent and extreme content on mainstream and digital media. Meanwhile, cyber exhibitionistic traits drive individuals to participate in acts that they hope will be viewed by an audience of millions.

The filming of men raping women was once confined to the brutality of war, but it now appears on the dark or hidden parts of the Internet, and is shared privately. In the Lonina case, extreme content was shared with apparent enthusiasm by a high-school student — and on Twitter, no less.

Similarly, acts of murder were once reported after the fact, on the news, or were only available in the deepest and darkest parts of the web, so-called “snuff” content. Now it appears killing has become a form of live engagement on social media, generated and distributed by pathological and criminal cyber exhibitionists.

The disruptions of the digital revolution are compiling — and becoming harder to ignore. Law enforcement is on the case, but I can attest that those on the frontlines feel the same sense of concern, if not urgency, as the rest of us.

Who is to blame?

The individuals who commit the acts, the others who share the images and videos, the media outlets that spread them further? Or does the fault lie in the devices, the service providers, the software, and the companies behind them? And what is the responsibility of social media platforms where live violent and sexual content can be so easily uploaded?

There is currently no content-analysis technology that can effectively filter live-streamed video in real time. It has to be done by people, one video at a time. Which is why human content moderators are used by social media companies such as Google (including YouTube), Facebook and Twitter. In fact, online content-moderation has become an industry in Southeast Asia, where workers are hired to remove beheadings, tortures, rapes and child-abuse images from their sites. But these human filters are already showing signs of serious emotional fallout from their work. They report insomnia, anxiety, depression and recurring nightmares — similar to post-traumatic stress disorder.

What can we do about anti-social media?

Just as oil companies have been made accountable by the media, government and activists to clean up the damages and spills created directly or indirectly by its products, the social-media industry needs to be accountable for its effects on humanity. The precautionary principle has been used to some effect in the environmental debate, and it works as follows: If an action or policy presents a risk of causing harm to the public (or the environment), in the absence of scientific consensus, those taking the action must prove that it’s not harmful.

This principle should apply to live content distributed by social-media companies in cyberspace. These companies are not merely mediating our communications, but changing how we think, live and act, as individuals and as a society. Let’s start a conversation about this. The cleanup needs to begin soon.

More Must-Reads From TIME

- The 100 Most Influential People of 2024

- The Revolution of Yulia Navalnaya

- 6 Compliments That Land Every Time

- What's the Deal With the Bitcoin Halving?

- If You're Dating Right Now , You're Brave: Column

- The AI That Could Heal a Divided Internet

- Fallout Is a Brilliant Model for the Future of Video Game Adaptations

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Contact us at letters@time.com