Google’s Photos app, introduced in May, is getting a new feature that makes it easier to create and share collaborative albums with other users, the company announced Thursday. The service will now support shared albums, which means users can make it so that friends and family members will be able to add new photos to a given album.

After creating an album in Google Photos, users will see an option to set the album to “collaborative.” Once this feature is turned on, whoever that user chooses to share the album with will be able to add photos to the collection. These contributors will get a notification asking if they’d like to join the album, and once they respond with a yes, the creator of the album will also receive a notification.

A Google account is required in order to contribute content and receive updates on an existing album.

It’s not a new or revolutionary idea by any means — Apple offers similar functionality with its iCloud Photo Library through a feature called iCloud Photo Sharing. This allows family and friends to subscribe to a user’s photo albums, leave comments, and get notified when new images are added.

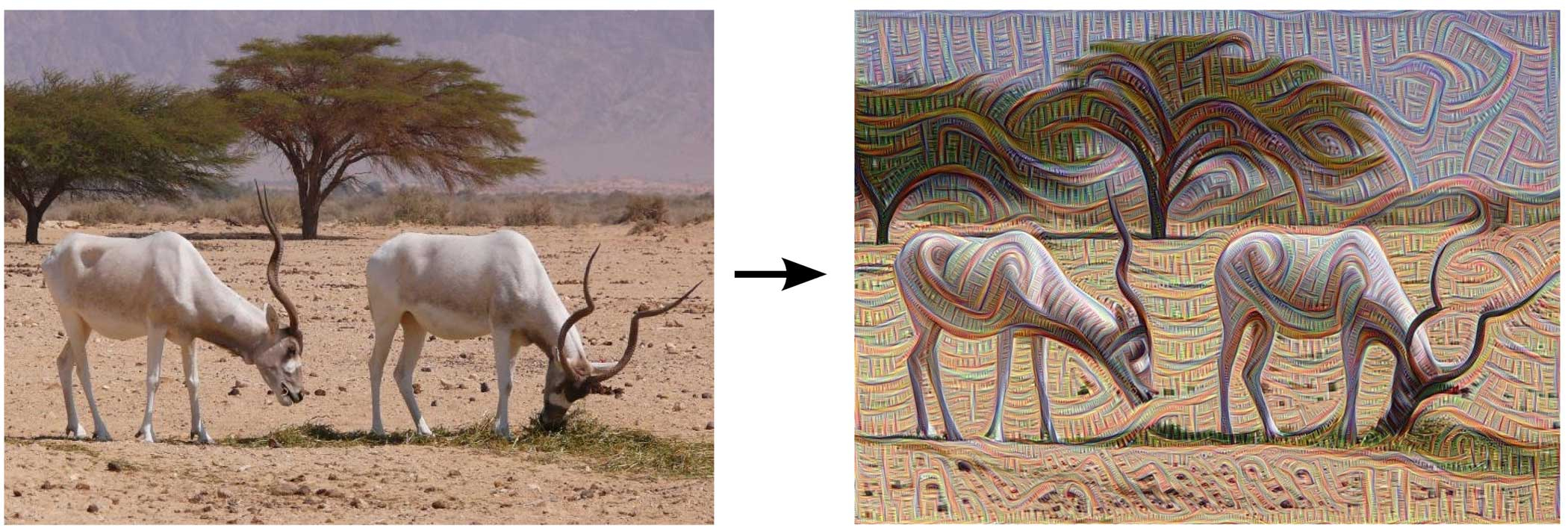

It’s interesting, though, because shared albums was one of the few big features Google’s Photos app has been lacking until this point. Google Photos already offers two key advantages over other photo storage apps: an incredibly accurate search functionality that can actually tell what’s in each photo by using machine learning, and free unlimited storage for photos up to 16 megapixels.

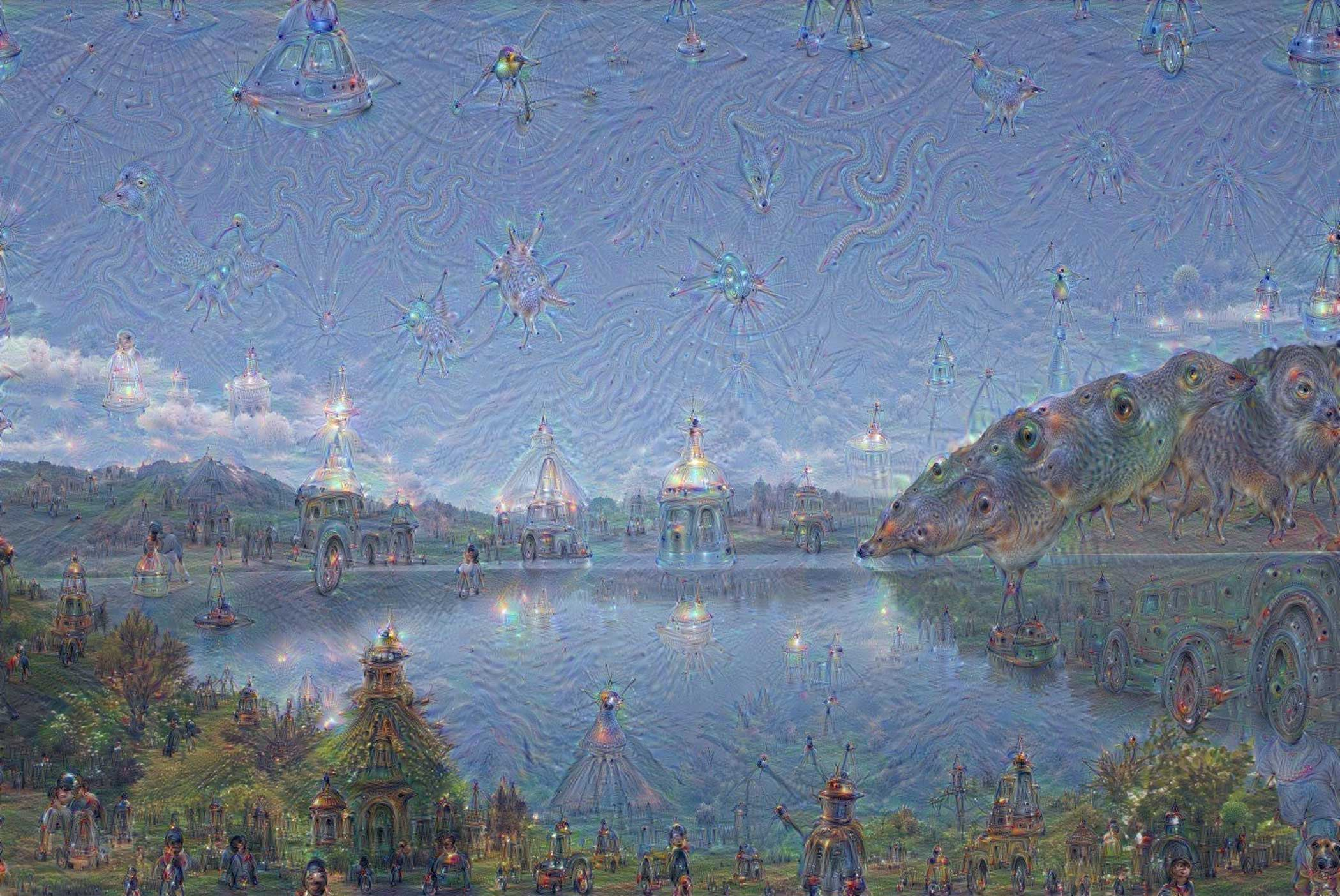

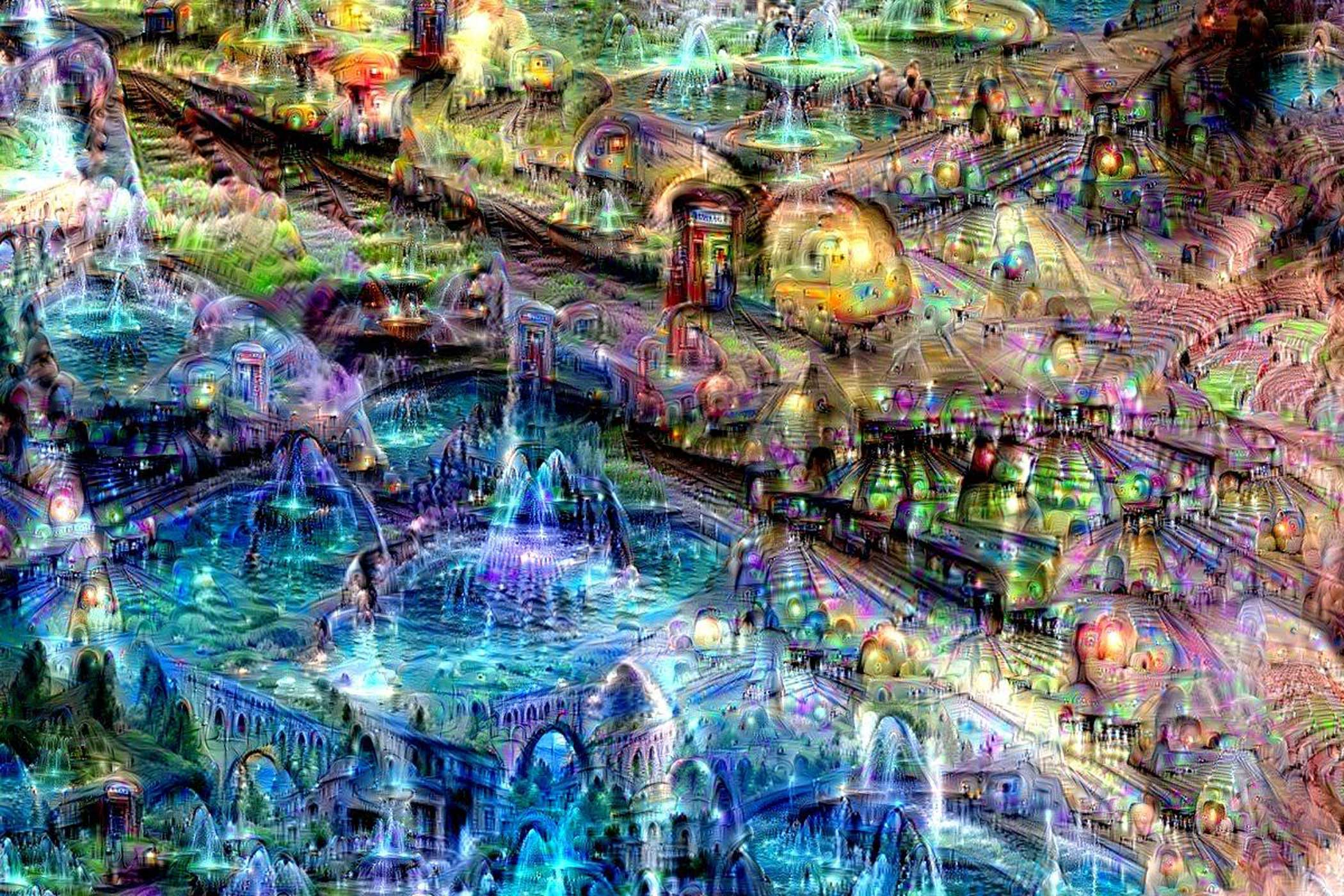

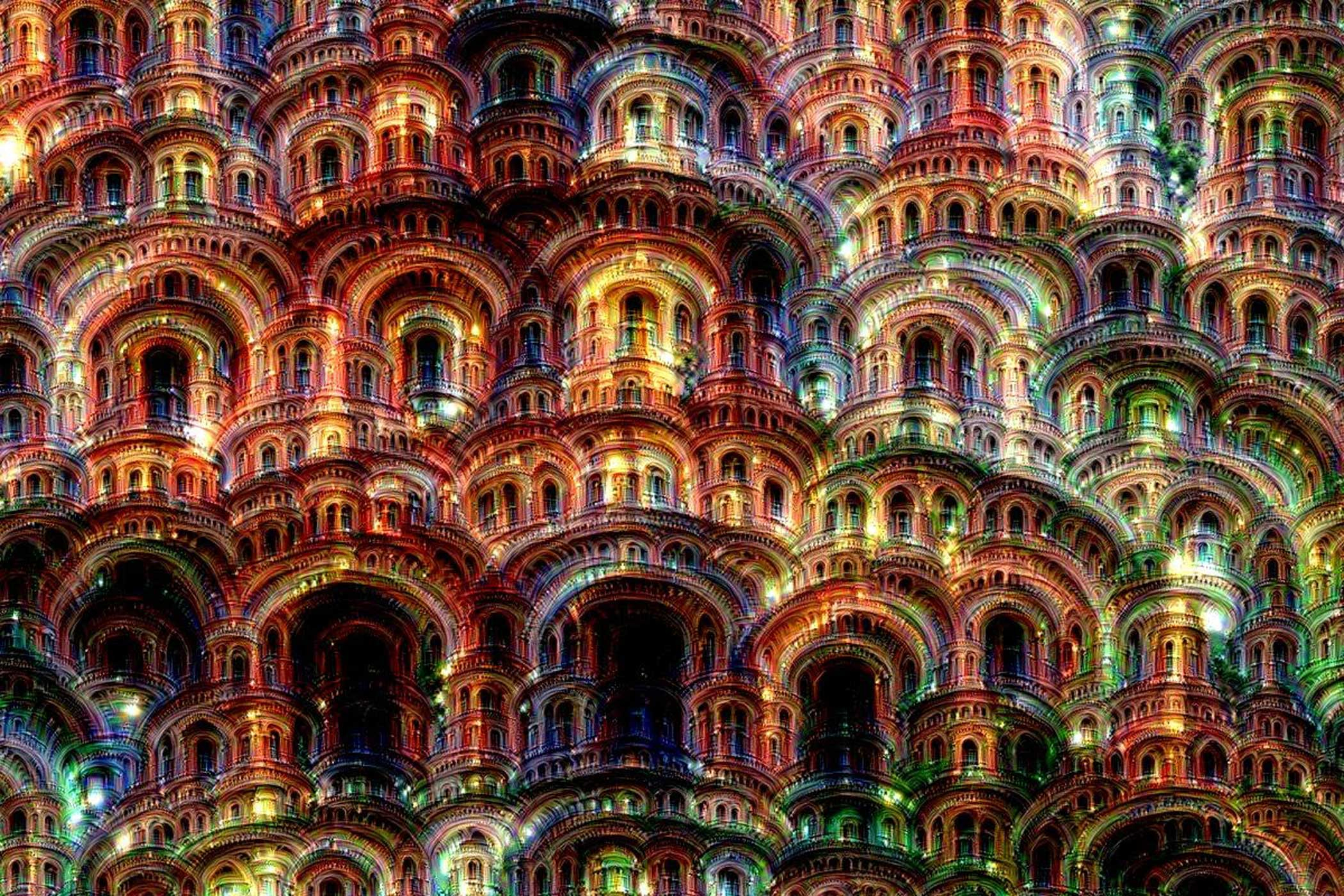

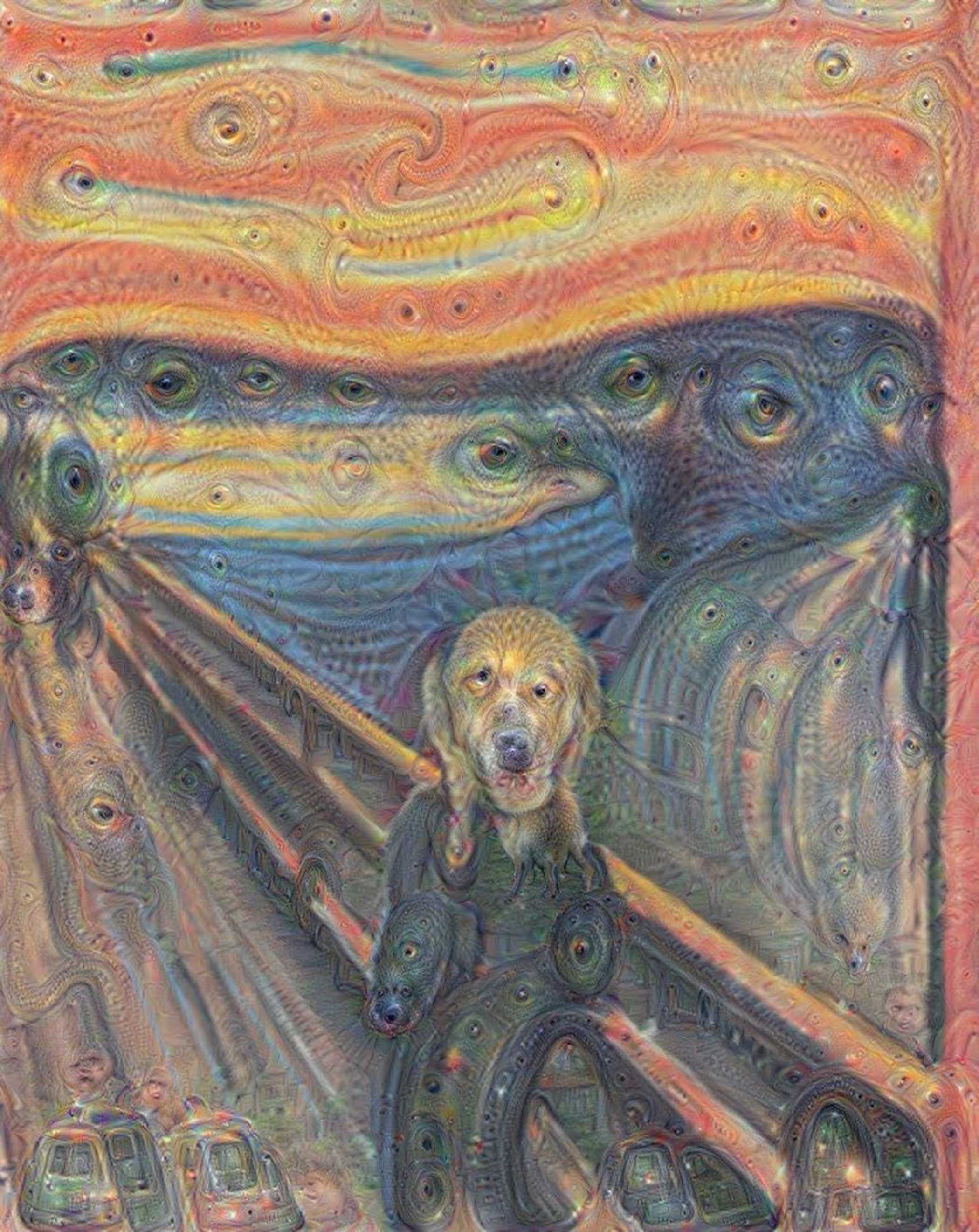

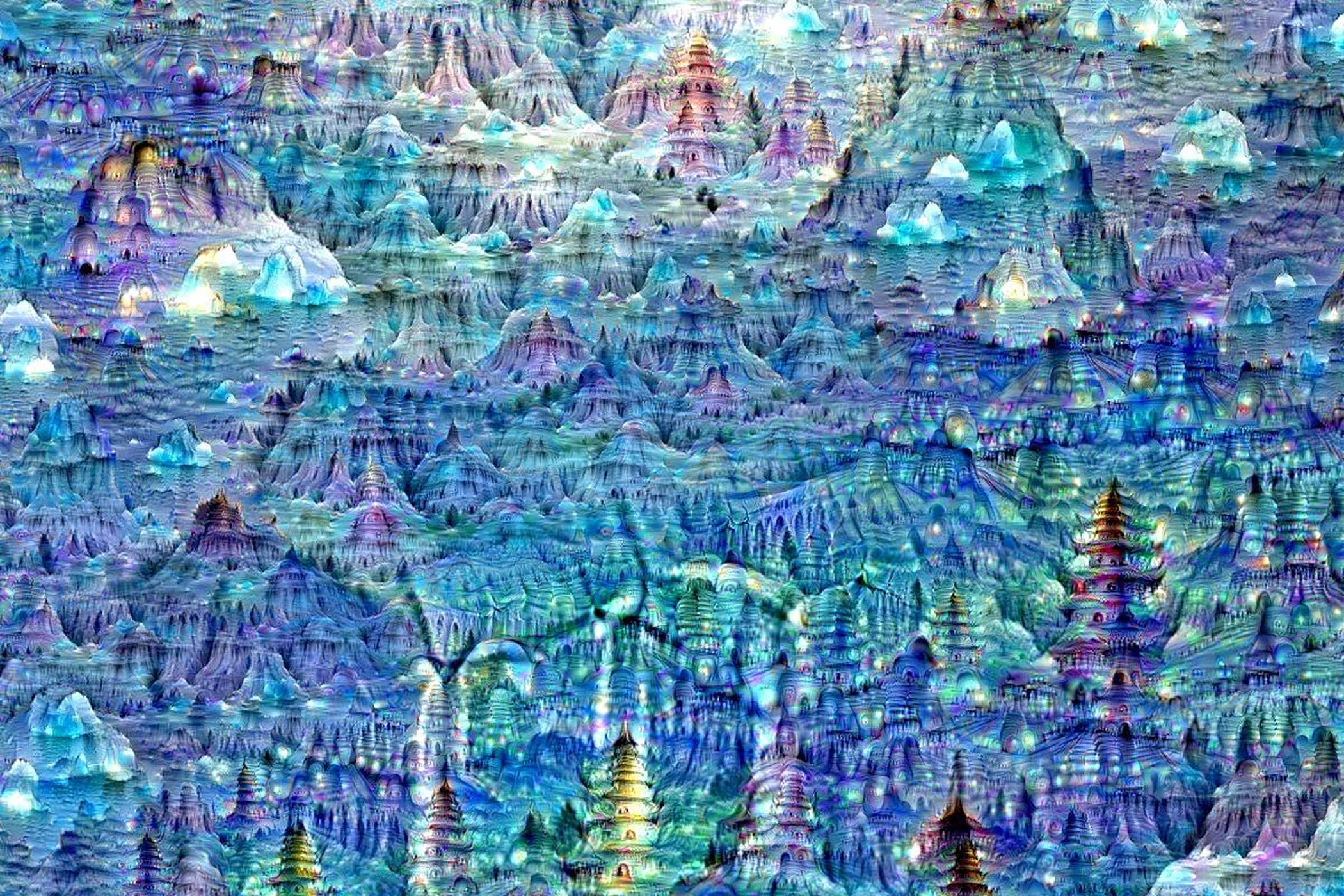

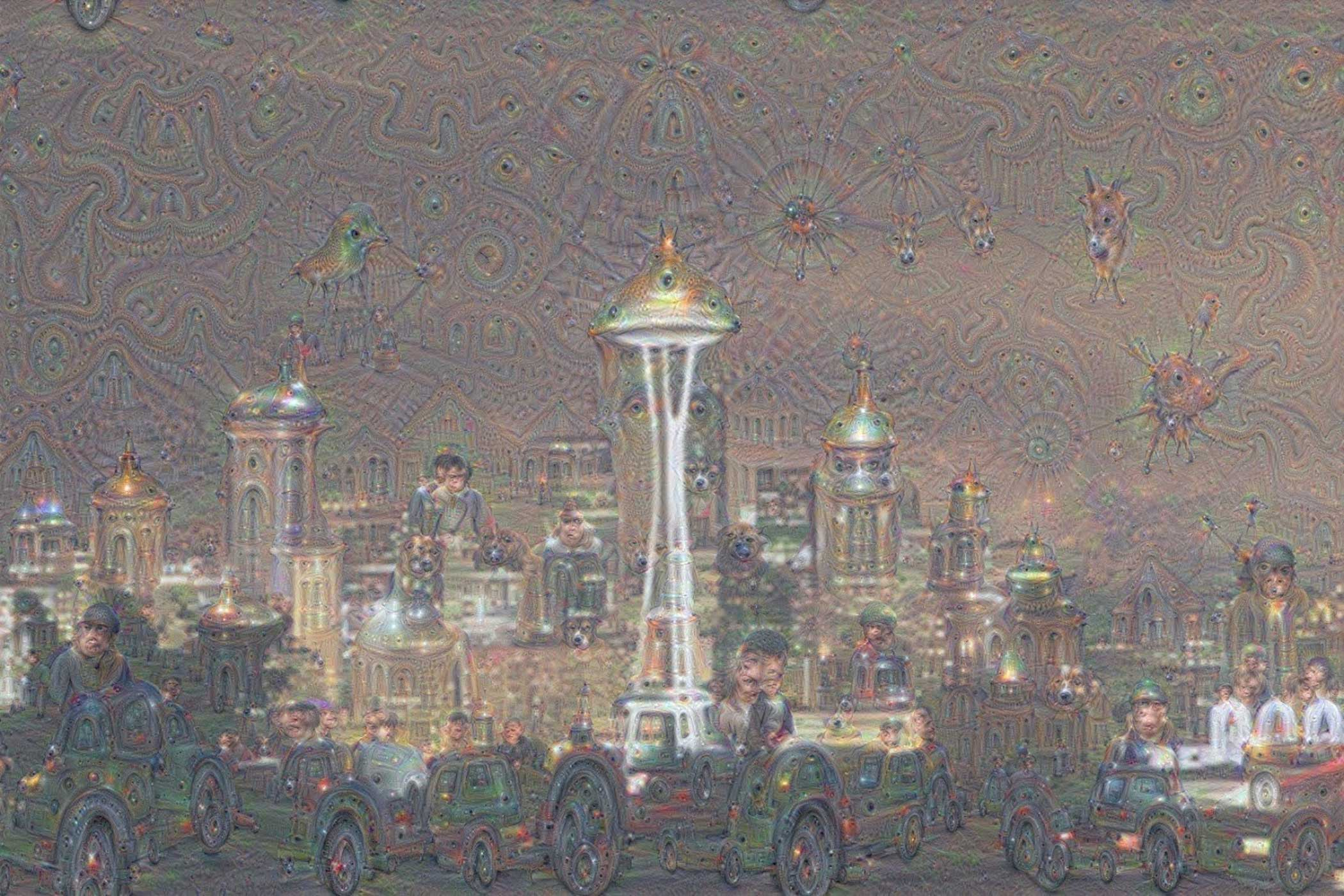

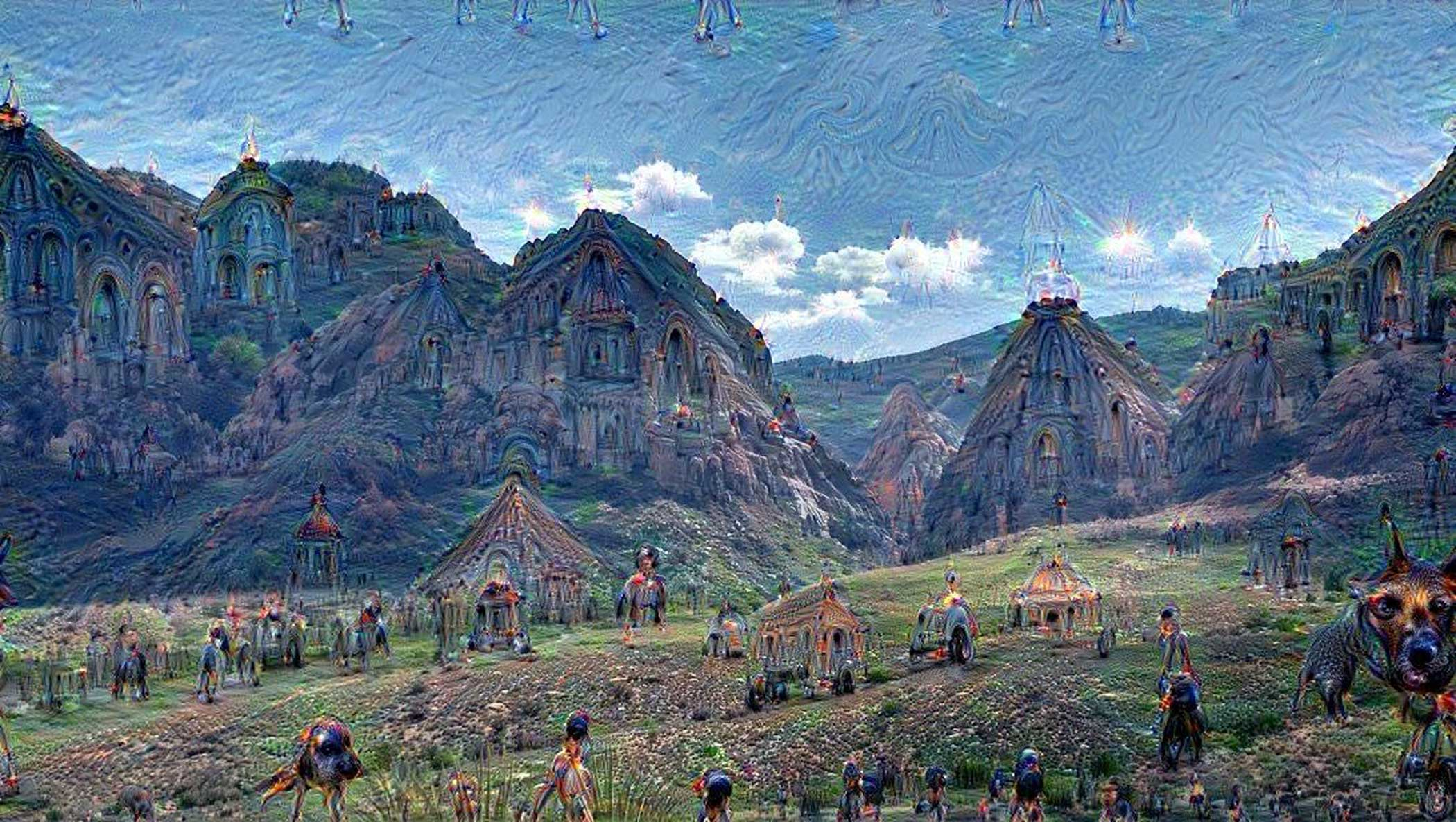

See the Fantastically Weird Images Google’s Self-Evolving Software Made

More Must-Reads From TIME

- The 100 Most Influential People of 2024

- The Revolution of Yulia Navalnaya

- 6 Compliments That Land Every Time

- What's the Deal With the Bitcoin Halving?

- If You're Dating Right Now , You're Brave: Column

- The AI That Could Heal a Divided Internet

- Fallout Is a Brilliant Model for the Future of Video Game Adaptations

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Contact us at letters@time.com